Sorachio-1B: Conversational AI Assistant

Overview

Sorachio-1B is a fine-tuned conversational AI model built on Google's Gemma 3, optimized for multilingual dialogue and assistant-style tasks. This fine-tuning enhances the model's conversational tone and develops a distinctive persona for more engaging and natural interactions.

The model uses QLoRA (Quantized Low-Rank Adaptation) for efficient training with limited computational resources while preserving strong conversational abilities across multiple languages.

Model Details

- Base Model:

google/gemma-3-1b-it - Fine-tuning Method: QLoRA (4-bit quantization + LoRA)

- Model Size: 1B parameters

- Training Infrastructure: Google Colab (T4 GPU)

- Languages Supported: Multilingual (leveraging Gemma's native multilingual capabilities)

Conversational Enhancement

The fine-tuning process develops a distinctive conversational personality with several key characteristics:

Persona Development:

- Friendly and approachable tone that makes users comfortable

- Culturally adaptive responses, especially in Indonesian contexts

- Professional yet casual balance between helpfulness and relaxed interaction

- Emotionally aware understanding of conversational nuances

Communication Style:

- Natural speech patterns with colloquial expressions

- Contextually appropriate formality adjustment

- Empathetic responses with genuine interest in helping

- Consistent personality maintained across topics and languages

Training Configuration

Dataset

- Size: ~500,000 tokens of high-quality multi-turn conversational data

- Content: Several thousand conversation examples covering various topics and interaction patterns

- Focus: Multilingual conversations curated to reinforce consistent tone and personality traits

QLoRA Setup

QLoRA combines 4-bit quantization with Low-Rank Adaptation, reducing memory requirements from ~18GB to ~9GB:

- Precision: 4-bit quantization (NF4 type) with double quantization

- Compute Type: Float16 for optimal performance

- LoRA Rank: 8 with Alpha 16

- Target Modules: All attention and MLP layers (

q_proj,k_proj,v_proj,o_proj,gate_proj,up_proj,down_proj) - Trainable Parameters: 6,522,880 (0.65% of total)

Training Parameters

- Epochs: 3

- Batch Size: 1 per device with 8-step gradient accumulation (effective: 8)

- Learning Rate: 2e-4 with cosine scheduler and 0.1 warmup ratio

- Optimizer: Paged AdamW 8-bit with 0.01 weight decay

- Dropout: 0.05

Training Results

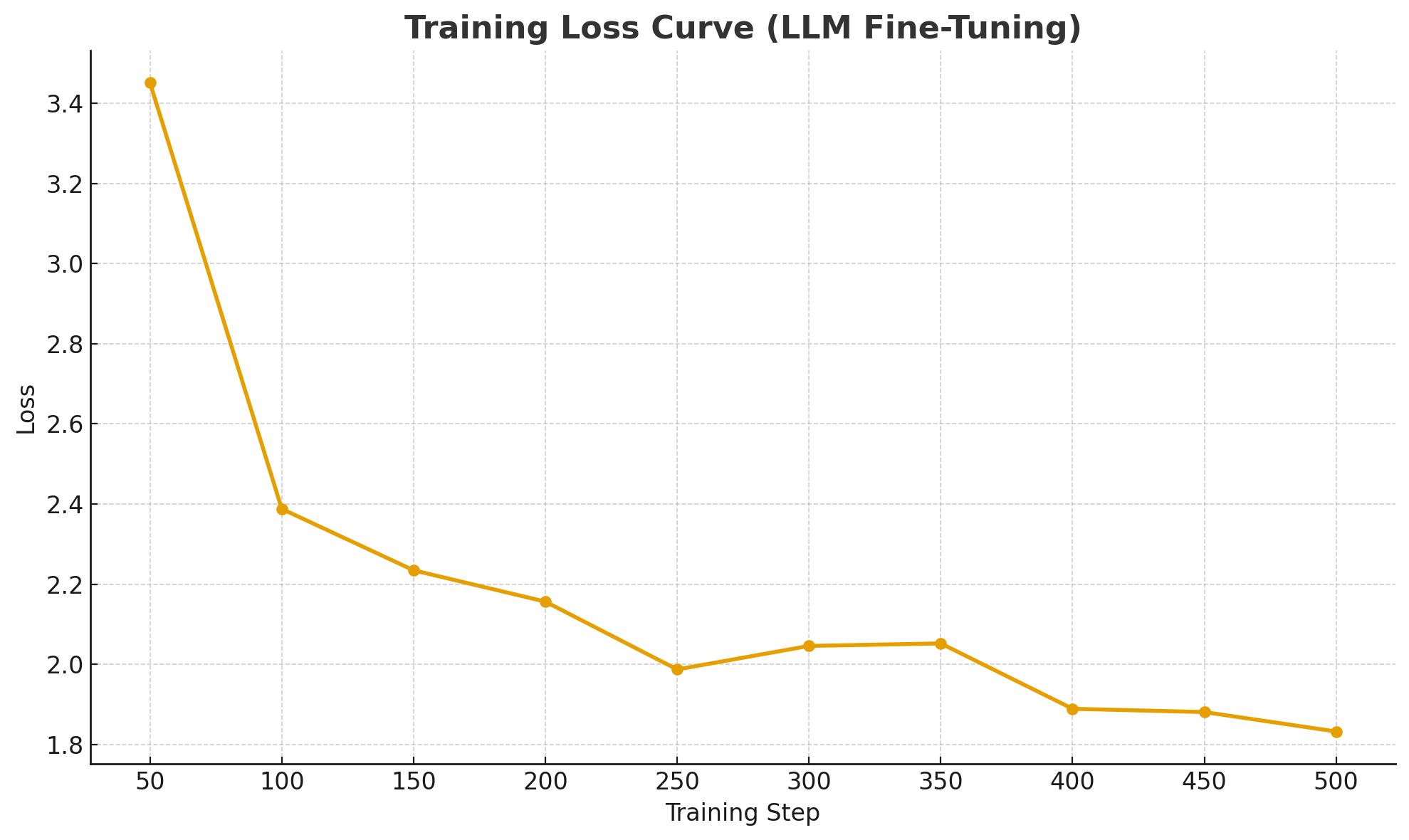

The model showed excellent convergence with final training loss of 1.8328 after 525 steps across 3 epochs:

| Step | Training Loss | Step | Training Loss |

|---|---|---|---|

| 50 | 3.4508 | 300 | 2.0462 |

| 100 | 2.3880 | 350 | 2.0525 |

| 150 | 2.2349 | 400 | 1.8895 |

| 200 | 2.1567 | 450 | 1.8813 |

| 250 | 1.9876 | 500 | 1.8328 |

Training Efficiency:

- Total Time: 47 minutes 37 seconds (2,863.7 seconds)

- Training Speed: 1.467 samples/second, 0.183 steps/second

- Average Training Loss: 2.179

- Final Training Loss: 1.8328

- Total FLOPs: 7.56 × 10^15

The model achieved strong convergence with the loss steadily decreasing throughout training, reaching 1.8328 in the final steps. This indicates successful adaptation to the conversational dataset with improved performance compared to earlier training runs.

Usage

Quick Start

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Load model and tokenizer

model_id = "izzulgod/sorachio-1b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

device_map="auto",

torch_dtype=torch.float16,

attn_implementation="eager"

).eval()

# Prepare conversation

messages = [{"role": "user", "content": "Perkenalkan dirimu"}]

# Generate response

input_ids = tokenizer.apply_chat_template(

messages, tokenize=True, add_generation_prompt=True, return_tensors="pt"

).to(model.device)

with torch.no_grad():

outputs = model.generate(

input_ids=input_ids,

attention_mask=(input_ids != tokenizer.pad_token_id).long(),

max_new_tokens=256,

do_sample=True,

top_p=0.9,

temperature=0.6,

pad_token_id=tokenizer.eos_token_id

)

response = tokenizer.decode(outputs[0][input_ids.shape[-1]:], skip_special_tokens=True)

print(response)

Sample Output

Halo! Saya Sorachio, asisten AI yang dibuat untuk membantu kamu dengan berbagai hal — mulai dari menjawab pertanyaan, menulis, sampai mengobrol santai. Aku senang bisa bertemu denganmu! 😊

Model Capabilities

Core Features

- Multilingual Support: English, Indonesian, and other Gemma-supported languages with cross-lingual understanding

- Multi-turn Dialogue: Context retention across extended conversations with natural dialogue flow

- Persona Consistency: Maintains friendly, culturally-aware character across all interactions

- Safety: Inherits safety features from base Gemma model

Enhanced Characteristics

- Emotional Intelligence: Appropriate responses to different emotional contexts

- Cultural Adaptation: Communication style adapts to cultural expectations

- Conversational Memory: References earlier conversation parts effectively

- Professional Boundaries: Helpful assistant role while remaining personable

Technical Requirements

Hardware

- GPU: NVIDIA T4 (Google Colab free tier sufficient)

- Memory: ~9GB GPU memory with 4-bit quantization

- Storage: ~3GB for model checkpoints

Dependencies

transformers>=4.40.0

peft>=0.10.0

bitsandbytes>=0.43.0

torch>=2.0.0

Limitations

- Context Window: Limited to base model's context length

- Domain Focus: Optimized primarily for conversational tasks

- Performance Variation: May vary across different languages

- Resource Requirements: GPU recommended for optimal inference speed

License

This model follows the licensing terms of the base Gemma model. Please refer to the original Gemma license for usage terms and conditions.

- Downloads last month

- 34