Datasets:

license: cc-by-4.0

task_categories:

- image-to-3d

language:

- en

ROVR Open Dataset

Introduction

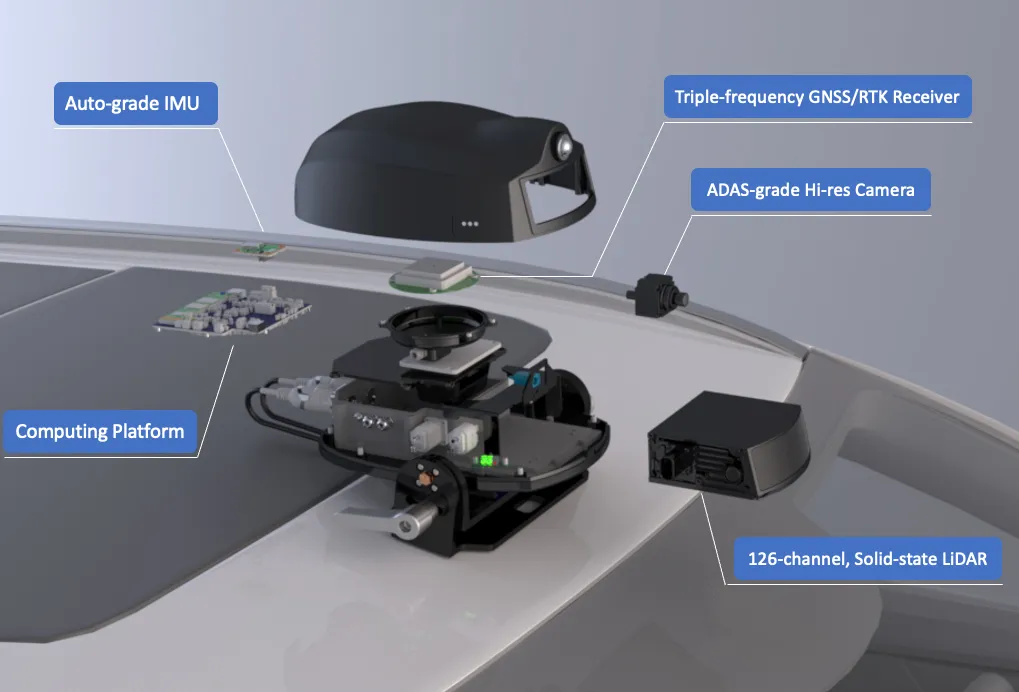

Welcome to the ROVR Open Dataset repository! This dataset is designed to empower autonomous driving and robotics research by providing rich, real-world data captured from ADAS cameras and LiDAR sensors. The dataset spans 50+ countries with over 20 million kilometers of driving data, making it ideal for training and developing advanced AI algorithms for depth estimation, object detection, and semantic segmentation.

Key Features

- Global Coverage: 50+ countries with diverse driving environments and road scenarios.

- High-Resolution Data: Data from high-precision ADAS cameras and LiDAR sensors.

- Real-World Scenarios: Over 20M+ kilometers of real-world driving data representing various environments and conditions.

- Comprehensive Data Types: Includes point clouds, monocular depth estimation, object detection, and semantic segmentation.

Dataset Overview

ROVR Open Dataset includes various data types to support different autonomous driving tasks:

- LiDAR Point Clouds: Dense 3D point clouds for precise depth estimation and scene analysis.

- Monocular Depth Estimation: High-precision depth information to aid autonomous driving perception.

- Object Detection: Real-time detection of vehicles, pedestrians, and obstacles.

- Semantic Segmentation: Pixel-level segmentation of roads, obstacles, and other features.

LiDAR Point Clouds |

Monocular Depth Estimation |

Object Detection |

Semantic Segmentation |

ROVR Open Dataset Overview

Dataset Volume

The first batch of the ROVR Open Dataset includes 1,363 clips, each containing 30 seconds of data, collected from diverse global environments to support autonomous driving research.

Scene Coverage

The dataset spans three scene types, each with varied conditions to ensure comprehensive representation:

- Highway: High-speed road scenarios.

- Urban: City environments with complex traffic patterns.

- Rural: Less dense, open-road settings.

Conditions: Each scene type includes Day, Night, and Rainy conditions for robust scenario diversity.

Dataset Split

The dataset is divided as follows:

- Training Set: 1,296 clips

- Test Set: 67 clips

Annotations

- Current: Ground truth annotations are provided for monocular depth estimation.

- Planned: Annotations for object detection and semantic segmentation will be included in future releases.

Future Releases

A second batch of data is planned for open-source release to further expand the dataset’s scope and utility.

Challenges

We will be hosting global challenges to advance autonomous driving research. These challenges will focus on the following areas:

- Monocular Depth Estimation (Coming Soon): Depth estimation algorithms using camera and LiDAR ground truth.

- Fusion Detection & Segmentation (Coming Soon): Real-time vehicle and obstacle detection using camera and LiDAR data, along with pixel-level segmentation.

Licensing

The dataset is available under the following licenses:

- Open-Source License (CC BY-NC-SA 4.0): Free for non-commercial research with attribution.

- Commercial License (CC BY 4.0): Full access for commercial use, with attribution required.

For detailed licensing information, please see LICENSE.md.

ROVR Network Open Dataset

Getting Started

Explore, download, and contribute to ROVR Open Dataset to help shape the future of autonomous driving.

Dataset Usage Rules

This dataset and its subsets can only be used after signing the agreement.

Without permission, it is not allowed to forward, publish, or distribute this dataset or its subsets to any organization or individual in any way or by any means.

Any copy and sharing requests should be forwarded to the official contact email.

Please cite our paper if the ROVR Open Dataset is useful to your research.

Full Dataset Access

All users can obtain and use this dataset and its subsets only after signing the Agreement and sending it to the official contact email.

For students, research groups, or research institutions who need to obtain this data, signatures of both the Responsible Party and the Point of Contact (POC) are required:

- Responsible Party: Tutor of the research group or a representative of the research institution, with authority to sign the agreement. Must be permanent personnel of the institution.

- Point of Contact (POC): Individual with detailed knowledge of the dataset application. In some cases, the Responsible Party and the POC may be the same person.

- The homepage of the Responsible Party (or research group, laboratory, department, etc.) must be provided to prove the role.

Please send the signed Agreement in scanned copy format to the official contact email: 📧 [email protected]

Please CC the Responsible Party when sending the email.

Email format for ROVR Open Dataset application:

- Subject: ROVR Open Dataset Application

- CC: The email address of the Responsible Party

- Attachment:

ROVR_Open_Dataset_Agreement.pdf(scanned copy)

Citation

If you use the ROVR Open Dataset or related resources, please cite us as:

@article{guo2025rovr,

title={ROVR-Open-Dataset: A Large-Scale Depth Dataset for Autonomous Driving},

author={Guo, Xianda and Zhang, Ruijun and Duan, Yiqun and Wang, Ruilin and Zhou, Keyuan and Zheng, Wenzhao and Huang, Wenke and Xu, Gangwei and Horton, Mike and Si, Yuan and Zhao, Hao and Chen, Long},

journal={arXiv preprint arXiv:2508.13977},

year={2025}

}

Join the Community

Contribute to the development of autonomous driving technologies by joining the ROVR community:

Collaborators

We are proud to collaborate with the following partners, with more to be added in the future:

|

|

|

Support & Documentation

For more information on how to get started, explore our documentation or contact us for support: