pythia-1.4b Fine-tuned on sft-hh-data

This model is a fine-tuned version of EleutherAI/pythia-1.4b on the activeDap/sft-hh-data dataset.

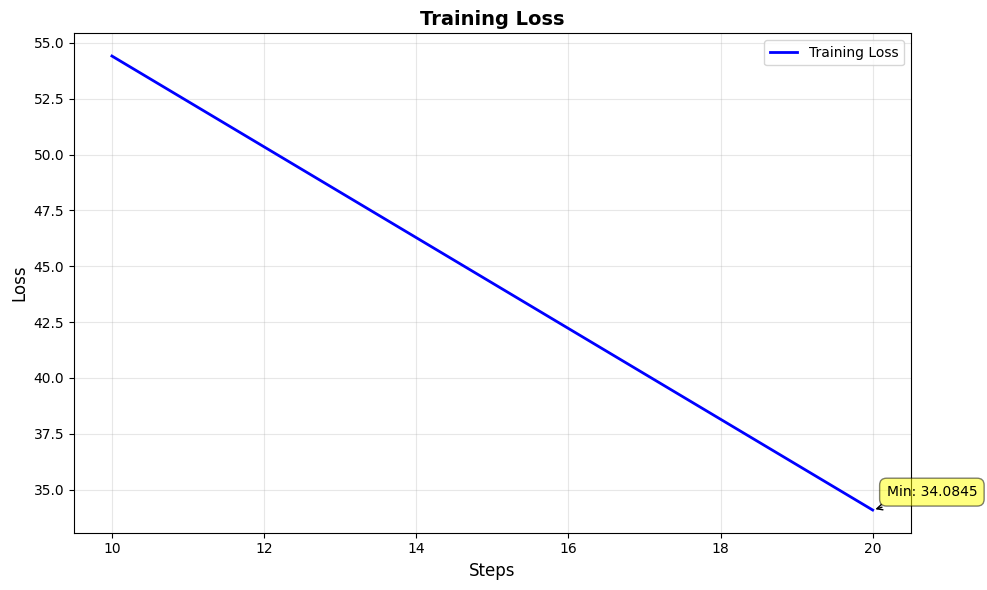

Training Results

Training Statistics

| Metric | Value |

|---|---|

| Total Steps | 20 |

| Final Training Loss | 34.0845 |

| Min Training Loss | 34.0845 |

| Training Runtime | 6.19 seconds |

| Samples/Second | 197.71 |

Training Configuration

| Parameter | Value |

|---|---|

| Base Model | EleutherAI/pythia-1.4b |

| Dataset | activeDap/sft-hh-data |

| Number of Epochs | 1.0 |

| Per Device Batch Size | 16 |

| Gradient Accumulation Steps | 1 |

| Total Batch Size | 64 (4 GPUs) |

| Learning Rate | 2e-05 |

| LR Scheduler | cosine |

| Warmup Ratio | 0.1 |

| Max Sequence Length | 512 |

| Optimizer | adamw_torch_fused |

| Mixed Precision | BF16 |

Usage

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "activeDap/pythia-1.4b_sft-hh-data"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Format input with prompt template

prompt = "What is machine learning?\nAssistant:"

inputs = tokenizer(prompt, return_tensors="pt")

# Generate response

outputs = model.generate(**inputs, max_new_tokens=100)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

Training Framework

- Library: Transformers + TRL

- Training Type: Supervised Fine-Tuning (SFT)

- Format: Prompt-completion with Assistant-only loss

Citation

If you use this model, please cite the original base model and dataset:

@misc{ultrafeedback2023,

title={UltraFeedback: Boosting Language Models with High-quality Feedback},

author={Ganqu Cui and Lifan Yuan and Ning Ding and others},

year={2023},

eprint={2310.01377},

archivePrefix={arXiv}

}

- Downloads last month

- 8

Model tree for activeDap/pythia-1.4b_hh_helpful

Base model

EleutherAI/pythia-1.4b