Leesplank Noot EuroLLM-1.7B

Dutch text simplification model achieving SARI 66.44 ± 0.32 at 27.5 tokens/second

Model Details

- Model:

UWV/leesplank-noot-eurollm-1.7b - Base: EuroLLM-1.7B (utter-project/EuroLLM-1.7B)

- Task: Dutch text simplification for government communication

- Performance: SARI 66.44 at 27.5 tokens/second

- License: Apache 2.0

This model fine-tunes EuroLLM-1.7B on 1.89M Dutch Wikipedia simplifications to produce accessible text for citizens with reading difficulties, compliant with EU accessibility guidelines and the AI Act.

Quick Start

from transformers import pipeline

# Load EuroLLM model

model = pipeline(

"text-generation",

model="UWV/leesplank-noot-eurollm-1.7b",

torch_dtype="auto",

device_map="auto"

)

# Text to simplify

text = "Een pekdruppelexperiment is een langetermijnexperiment dat het vloeien van een stuk pek meet over vele jaren. Pek is een verzamelnaam voor een aantal vloeistoffen met een zeer hoge viscositeit, zoals teer en bitumen, die er bij kamertemperatuur uitzien als een vaste stof, maar in feite zeer dik vloeibaar zijn en uiteindelijk druppels vormen."

# EuroLLM: no system prompt

messages = [{

"role": "user",

"content": f"Vereenvoudig: {text}"

}]

# Generate simplified text

output = model(

messages,

max_new_tokens=150,

return_full_text=False,

do_sample=False, # Greedy decoding for consistency

eos_token_id=model.tokenizer.eos_token_id

)

# Print results

print(f"Original: {text}")

print(f"\nSimplified: {output[0]['generated_text']}")

Architecture & Training

Model Architecture

- Parameters: 1.7B total

- Layers: 24 transformer blocks

- Hidden Size: 2048

- Attention Heads: 16 (with 8 KV heads using GQA)

- MLP Dimension: 5632

- Context Length: 1024 tokens (training max_seq_length), 4096 theoretical limit

- Precision: bfloat16 (no quantization)

- Activation: SwiGLU

- Position Encoding: RoPE (θ=10000)

- Vocabulary Size: 128,000

Training Configuration

### Training Configuration

learning_rate: 7e-5

epochs: 2

warmup_steps: 200

scheduler: cosine

max_gradient_norm: 1.0

optimizer: AdamW (β₁=0.9, β₂=0.999, ε=1e-8)

neftune_noise_alpha: 5

per_device_train_batch_size: 32

gradient_accumulation_steps: 2

packing_enabled: true (~5x efficiency gain)

eval_steps: 100

save_steps: 200

logging_steps: 10

Hardware & Distributed Training

- GPUs: 4× AMD MI300X (192GB total VRAM)

- FSDP: Full sharding with activation checkpointing

- Effective Batch Size: 256 (32 × 4 GPUs × 2 gradient accumulation)

- Packing: Enabled for efficient sequence batching

- Total Training Steps: 2,960

- Training Time: 6 hours 47 minutes (2.0 epochs, 2,960 steps)

- Peak Memory: 71GB per GPU

Training Progress

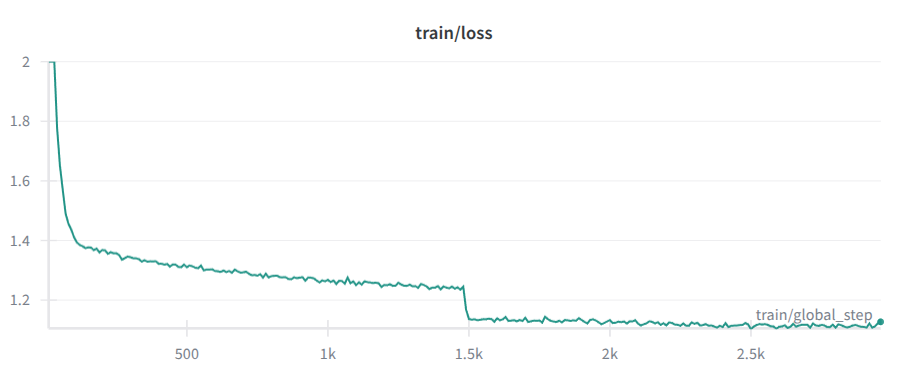

The following charts show the training dynamics over 2,960 steps (2 epochs with sequence packing):

Training loss showing rapid initial convergence from 2.0 to ~1.3 within 500 steps, stabilizing at 1.127

Training loss showing rapid initial convergence from 2.0 to ~1.3 within 500 steps, stabilizing at 1.127

Evaluation loss measured every 100 steps, final value: 1.041

Evaluation loss measured every 100 steps, final value: 1.041

Key observations:

- Rapid convergence in first 500 steps due to effective initialization from base model

- Smooth cosine learning rate schedule with 200 warmup steps

- Consistent improvement throughout both epochs

- Stable training with no signs of overfitting (eval loss < train loss)

Dataset Engineering

The source dataset (UWV/Leesplank_NL_wikipedia_simplifications_preprocessed) is pre-sorted by Levenshtein (edit) distance between original and simplified text, grouping similar simplification patterns together.

Our training strategy combines two approaches to leverage this structure:

- 70% Ordered Samples: Preserves the Levenshtein distance ordering, allowing the model to learn progressively from similar simplification patterns

- 30% Shuffled Samples: Breaks domain clusters, improves generalization across diverse simplification types

Each sample receives one of 20 instruction variants:

SIMPLIFICATION_PROMPTS = [

"Vereenvoudig deze tekst:",

"Kun je deze tekst makkelijker maken?",

"Herschrijf dit in eenvoudigere taal:",

# ... 17 more variations

]

# 20% get short prompt "Vereenvoudig:" for length diversity

Dataset statistics:

- Training: 1,886,765 paragraph pairs

- Validation: 1,024 samples (held-out)

- Median Length: 312 tokens (99.4% < 1024)

Performance Metrics

Primary Benchmark (3 runs × 1000 samples, beam_search)

| Metric | EuroLLM-Leesplank |

|---|---|

| SARI Mean | 66.44 ± 0.32 |

| 95% CI | [65.66, 67.23] |

| Speed (tok/s) | 19.04 |

| Time/sample | 2.31s |

| Median SARI | 66.08 |

| Q1-Q3 Range | 57.97-73.71 |

| Std Dev | 12.56-12.92 |

Decoding Strategy Comparison (100 samples)

| Strategy | SARI Score | Speed (tok/s) | Recommendation |

|---|---|---|---|

| Greedy | 66.69 ± 11.48 | 27.50 | ✓ Production |

| Beam (n=5) | 66.81 ± 12.56 | 19.04 | Research only |

Key Finding: Beam search provides negligible quality improvement (+0.12 SARI) for 31% speed penalty. Use greedy decoding in production.

Usage Instructions

1. Never Use System Prompts

This model was trained without system prompts. Including one catastrophically degrades performance:

# WRONG - SARI drops to ~28

messages = [

{"role": "system", "content": "Je bent een assistent..."},

{"role": "user", "content": "Vereenvoudig: ..."}

]

# CORRECT - SARI 66.44

messages = [

{"role": "user", "content": "Vereenvoudig: ..."}

]

2. Optimal Generation Settings

generation_config = {

"max_new_tokens": 256, # Sufficient for paragraph simplification

"do_sample": False, # Greedy decoding for speed

"temperature": 1.0, # Ignored with do_sample=False

"top_p": 1.0, # Ignored with do_sample=False

"repetition_penalty": 1.0, # No penalty needed

"pad_token_id": tokenizer.eos_token_id,

}

Limitations & Biases

Scope Limitations

- Language: Dutch only, no multilingual capability

- Domain: Optimized for Wikipedia/government text, may underperform on medical/legal documents

- Granularity: Paragraph-level simplification only (not word or sentence level)

- Context: 1024 token practical limit (theoretical 4096)

Known Biases

- Simplification Style: Tends toward Wikipedia Simple Dutch conventions

- Explanation Preference: Often adds explanatory clauses for complex terms

- Length Bias: May expand text when explaining concepts (simplification ≠ shortening)

Deployment Recommendations

Hardware Requirements

- Minimum: 8GB VRAM (RTX 3060, A10)

- Recommended: 16GB VRAM (RTX 4080, A40)

- CPU Inference: Possible but ~20x slower

Citation

@software{leesplank_noot_2025,

author = {UWV InnovatieHub},

title = {Leesplank Noot: Dutch Text Simplification},

year = {2025},

publisher = {HuggingFace},

url = {https://huggingface.co/UWV/leesplank-noot-eurollm-1.7b}

}

- Downloads last month

- 156