BrainExplore: Large-Scale Discovery of Interpretable Visual Representations in the Human Brain

Abstract

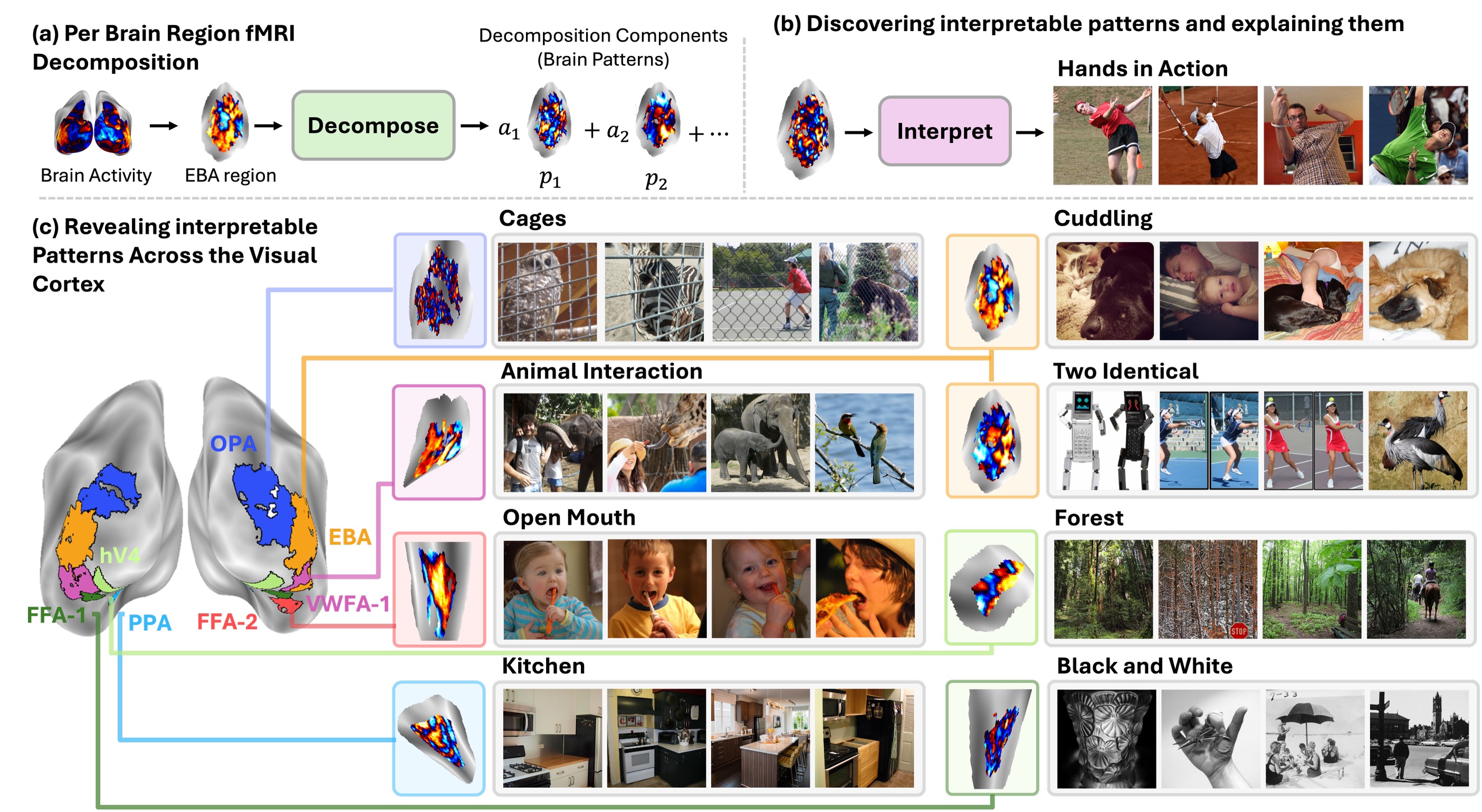

An automated framework uses unsupervised decomposition and natural language descriptions to identify and explain visual representations in human brain fMRI data.

Understanding how the human brain represents visual concepts, and in which brain regions these representations are encoded, remains a long-standing challenge. Decades of work have advanced our understanding of visual representations, yet brain signals remain large and complex, and the space of possible visual concepts is vast. As a result, most studies remain small-scale, rely on manual inspection, focus on specific regions and properties, and rarely include systematic validation. We present a large-scale, automated framework for discovering and explaining visual representations across the human cortex. Our method comprises two main stages. First, we discover candidate interpretable patterns in fMRI activity through unsupervised, data-driven decomposition methods. Next, we explain each pattern by identifying the set of natural images that most strongly elicit it and generating a natural-language description of their shared visual meaning. To scale this process, we introduce an automated pipeline that tests multiple candidate explanations, assigns quantitative reliability scores, and selects the most consistent description for each voxel pattern. Our framework reveals thousands of interpretable patterns spanning many distinct visual concepts, including fine-grained representations previously unreported.

Community

Dive into BrainExplore!

https://huggingface.co/spaces/mcosarinsky/BrainExplore-demo

Our demo lets you discover hundreds of visual concepts hidden in different brain regions — and compare how they emerge under multiple decomposition strategies. An intuitive window into the visual cortex.

Models citing this paper 0

No model linking this paper

Datasets citing this paper 0

No dataset linking this paper