add everything but lm eval harness

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +12 -0

- Dockerfile +33 -0

- LICENSE +21 -0

- README.md +328 -3

- data/cached_fineweb100B.py +16 -0

- data/cached_fineweb10B.py +16 -0

- data/cached_finewebedu10B.py +16 -0

- data/fineweb.py +126 -0

- data/requirements.txt +2 -0

- eval.sh +1 -0

- eval_grace.slurm +46 -0

- eval_grace_test.slurm +46 -0

- eval_test.sh +1 -0

- hellaswag.py +285 -0

- img/algo_optimizer.png +3 -0

- img/dofa.jpg +0 -0

- img/fig_optimizer.png +3 -0

- img/fig_tuned_nanogpt.png +3 -0

- img/nanogpt_speedrun51.png +3 -0

- img/nanogpt_speedrun52.png +0 -0

- img/nanogpt_speedrun53.png +3 -0

- img/nanogpt_speedrun54.png +0 -0

- logs/000_c2e7a920-6eca-4f21-8a3c-6022d81a4f29.txt +0 -0

- logs/000_c2e7a920-6eca-4f21-8a3c-6022d81a4f29/hellaswag.json +3 -0

- logs/000_c2e7a920-6eca-4f21-8a3c-6022d81a4f29/hellaswag.yaml +5 -0

- logs/000_c2e7a920-6eca-4f21-8a3c-6022d81a4f29/latest_model.pt +3 -0

- logs/000_c2e7a920-6eca-4f21-8a3c-6022d81a4f29/state_step057344.pt +3 -0

- modded-nanogpt-eval.16715025 +15 -0

- modded-nanogpt-eval.16715025.err +0 -0

- modded-nanogpt-train.16700835 +911 -0

- modded-nanogpt-train.16700835.err +0 -0

- pyproject.toml +19 -0

- records/010425_SoftCap/31d6c427-f1f7-4d8a-91be-a67b5dcd13fd.txt +0 -0

- records/010425_SoftCap/README.md +32 -0

- records/010425_SoftCap/curves_010425.png +3 -0

- records/011325_Fp8LmHead/README.md +1 -0

- records/011325_Fp8LmHead/c51969c2-d04c-40a7-bcea-c092c3c2d11a.txt +0 -0

- records/011625_Sub3Min/1d3bd93b-a69e-4118-aeb8-8184239d7566.txt +0 -0

- records/011625_Sub3Min/README.md +138 -0

- records/011625_Sub3Min/attn-entropy.png +0 -0

- records/011625_Sub3Min/attn-scales-pattern.gif +0 -0

- records/011625_Sub3Min/learned-attn-scales.png +0 -0

- records/011625_Sub3Min/long-short-swa.png +3 -0

- records/011825_GPT2Medium/241dd7a7-3d76-4dce-85a4-7df60387f32a.txt +0 -0

- records/011825_GPT2Medium/main.log +241 -0

- records/012625_BatchSize/0bdd5ee9-ac28-4202-bdf1-c906b102b0ec.txt +0 -0

- records/012625_BatchSize/README.md +38 -0

- records/012625_BatchSize/ablations.png +3 -0

- records/012625_BatchSize/c44090cc-1b99-4c95-8624-38fb4b5834f9.txt +0 -0

- records/012625_BatchSize/val_losses.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,15 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

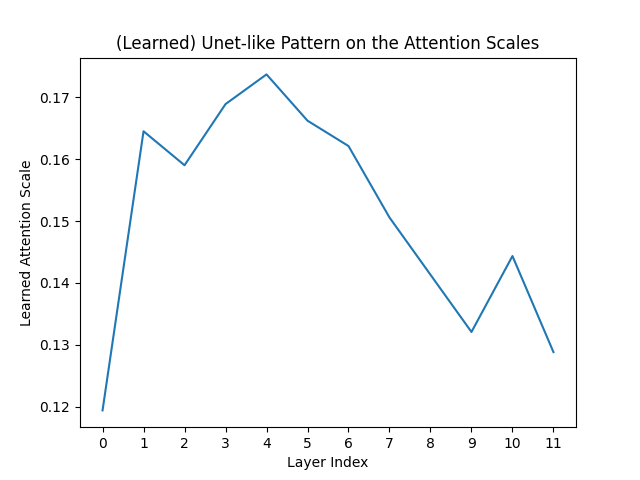

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

img/algo_optimizer.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

img/fig_optimizer.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

img/fig_tuned_nanogpt.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

img/nanogpt_speedrun51.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

img/nanogpt_speedrun53.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

logs/000_c2e7a920-6eca-4f21-8a3c-6022d81a4f29/hellaswag.json filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

records/010425_SoftCap/curves_010425.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

records/011625_Sub3Min/long-short-swa.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

records/012625_BatchSize/ablations.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

records/102924_Optimizers/nanogpt_speedrun81w.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

records/102924_Optimizers/nanogpt_speedrun82w.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

records/110624_ShortcutsTweaks/nanogpt_speedrun111.png filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

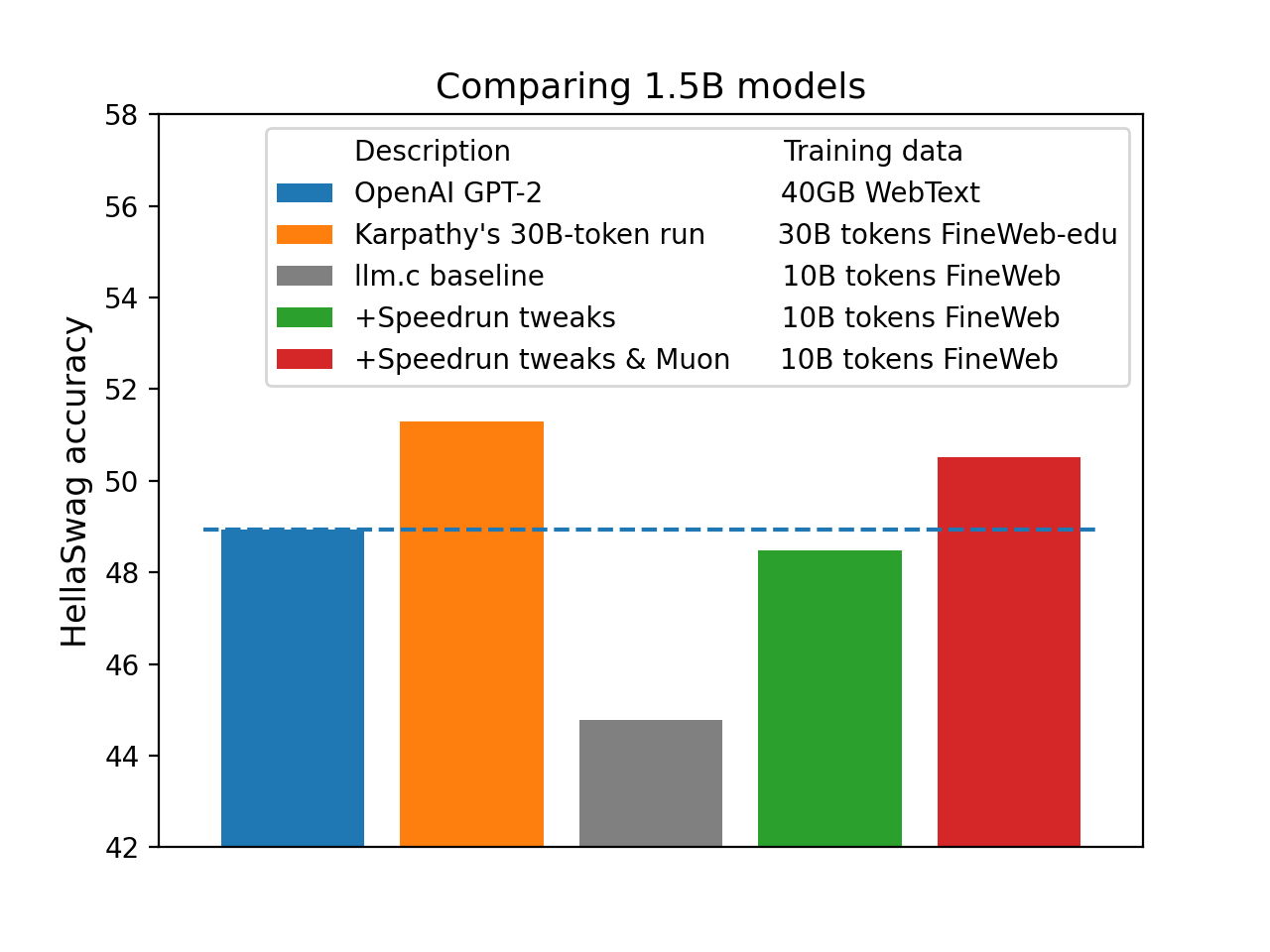

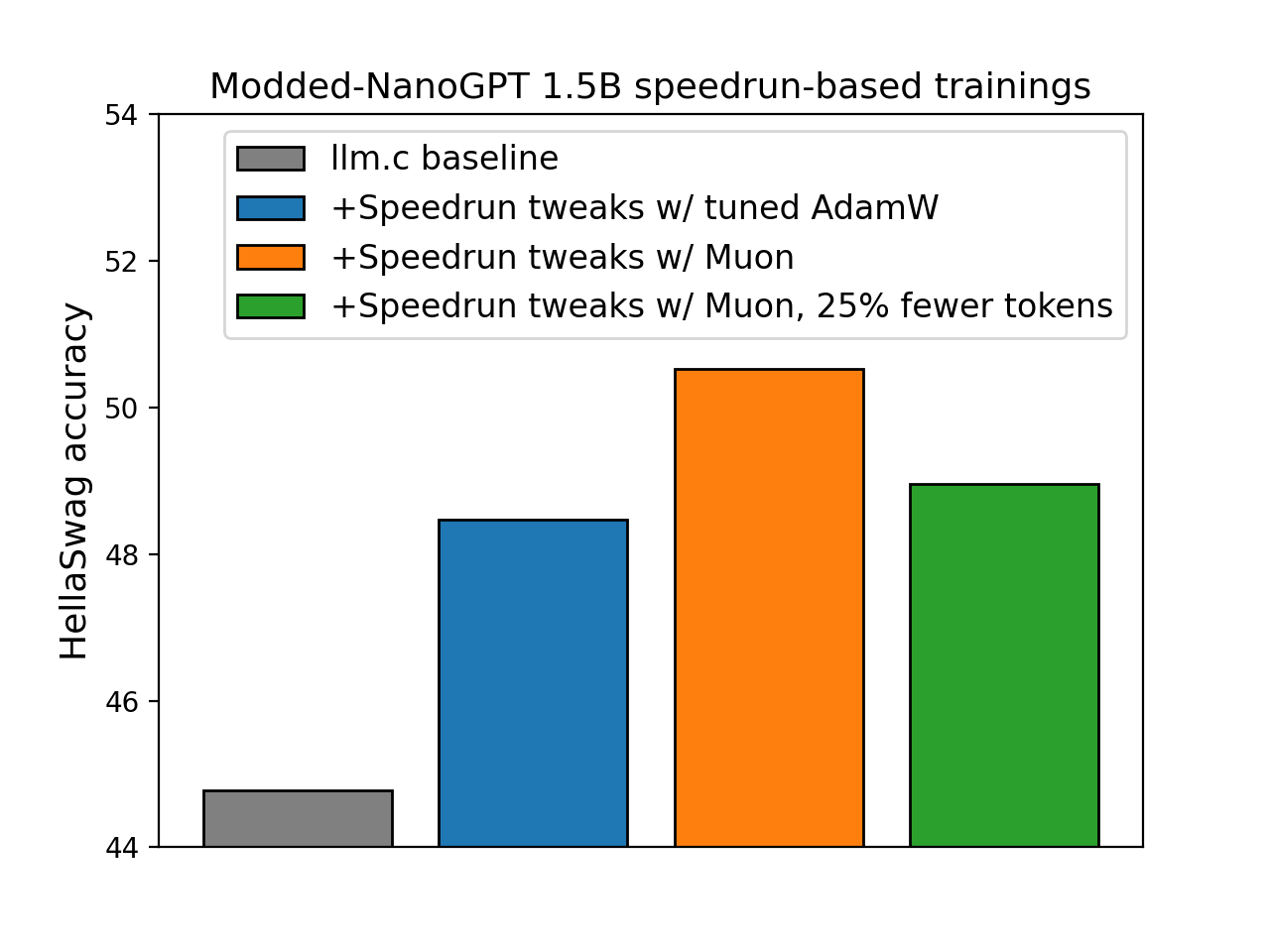

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM nvidia/cuda:12.6.2-cudnn-devel-ubuntu24.04

|

| 2 |

+

|

| 3 |

+

ENV DEBIAN_FRONTEND=noninteractive

|

| 4 |

+

ENV PYTHON_VERSION=3.12.7

|

| 5 |

+

ENV PATH=/usr/local/bin:$PATH

|

| 6 |

+

|

| 7 |

+

RUN apt update && apt install -y --no-install-recommends build-essential libssl-dev zlib1g-dev \

|

| 8 |

+

libbz2-dev libreadline-dev libsqlite3-dev curl git libncursesw5-dev xz-utils tk-dev libxml2-dev \

|

| 9 |

+

libxmlsec1-dev libffi-dev liblzma-dev \

|

| 10 |

+

&& apt clean && rm -rf /var/lib/apt/lists/*

|

| 11 |

+

|

| 12 |

+

RUN curl -O https://www.python.org/ftp/python/${PYTHON_VERSION}/Python-${PYTHON_VERSION}.tgz && \

|

| 13 |

+

tar -xzf Python-${PYTHON_VERSION}.tgz && \

|

| 14 |

+

cd Python-${PYTHON_VERSION} && \

|

| 15 |

+

./configure --enable-optimizations && \

|

| 16 |

+

make -j$(nproc) && \

|

| 17 |

+

make altinstall && \

|

| 18 |

+

cd .. && \

|

| 19 |

+

rm -rf Python-${PYTHON_VERSION} Python-${PYTHON_VERSION}.tgz

|

| 20 |

+

|

| 21 |

+

RUN ln -s /usr/local/bin/python3.12 /usr/local/bin/python && \

|

| 22 |

+

ln -s /usr/local/bin/pip3.12 /usr/local/bin/pip

|

| 23 |

+

|

| 24 |

+

COPY requirements.txt /modded-nanogpt/requirements.txt

|

| 25 |

+

WORKDIR /modded-nanogpt

|

| 26 |

+

|

| 27 |

+

RUN python -m pip install --upgrade pip && \

|

| 28 |

+

pip install -r requirements.txt

|

| 29 |

+

|

| 30 |

+

RUN pip install --pre torch --index-url https://download.pytorch.org/whl/nightly/cu126 --upgrade

|

| 31 |

+

|

| 32 |

+

CMD ["bash"]

|

| 33 |

+

ENTRYPOINT []

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Keller Jordan

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,3 +1,328 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Modded-NanoGPT

|

| 2 |

+

|

| 3 |

+

This repository hosts the *NanoGPT speedrun*, in which we (collaboratively|competitively) search for the fastest algorithm to use 8 NVIDIA H100 GPUs to train a language model that attains 3.28 cross-entropy loss on the [FineWeb](https://huggingface.co/datasets/HuggingFaceFW/fineweb) validation set.

|

| 4 |

+

|

| 5 |

+

The target (3.28 validation loss on FineWeb) follows Andrej Karpathy's [GPT-2 replication in llm.c, which attains that loss after running for 45 minutes](https://github.com/karpathy/llm.c/discussions/481#:~:text=By%20the%20end%20of%20the%20optimization%20we%27ll%20get%20to%20about%203.29).

|

| 6 |

+

The speedrun code also descends from llm.c's [PyTorch trainer](https://github.com/karpathy/llm.c/blob/master/train_gpt2.py), which itself descends from NanoGPT, hence the name of the repo.

|

| 7 |

+

Thanks to the efforts of many contributors, this repo now contains a training algorithm which attains the target performance in:

|

| 8 |

+

* 3 minutes on 8xH100 (the llm.c GPT-2 replication needed 45)

|

| 9 |

+

* 0.73B tokens (the llm.c GPT-2 replication needed 10B)

|

| 10 |

+

|

| 11 |

+

This improvement in training speed has been brought about by the following techniques:

|

| 12 |

+

* Modernized architecture: Rotary embeddings, QK-Norm, and ReLU²

|

| 13 |

+

* The Muon optimizer [[writeup](https://kellerjordan.github.io/posts/muon/)] [[repo](https://github.com/KellerJordan/Muon)]

|

| 14 |

+

* Untie head from embedding, use FP8 matmul for head, and softcap logits (the latter following Gemma 2)

|

| 15 |

+

* Initialization of projection and classification layers to zero (muP-like)

|

| 16 |

+

* Skip connections from embedding to every block as well as between blocks in U-net pattern

|

| 17 |

+

* Extra embeddings which are mixed into the values in attention layers (inspired by Zhou et al. 2024)

|

| 18 |

+

* FlexAttention with long-short sliding window attention pattern (inspired by Gemma 2) and window size warmup

|

| 19 |

+

|

| 20 |

+

As well as many systems optimizations.

|

| 21 |

+

|

| 22 |

+

Contributors list (growing with each new record): [@bozavlado](https://x.com/bozavlado); [@brendanh0gan](https://x.com/brendanh0gan);

|

| 23 |

+

[@fernbear.bsky.social](https://bsky.app/profile/fernbear.bsky.social); [@Grad62304977](https://x.com/Grad62304977);

|

| 24 |

+

[@jxbz](https://x.com/jxbz); [@kellerjordan0](https://x.com/kellerjordan0);

|

| 25 |

+

[@KoszarskyB](https://x.com/KoszarskyB); [@leloykun](https://x.com/@leloykun);

|

| 26 |

+

[@YouJiacheng](https://x.com/YouJiacheng); [@jadenj3o](https://x.com/jadenj3o);

|

| 27 |

+

[@KonstantinWilleke](https://github.com/KonstantinWilleke), [@alexrgilbert](https://github.com/alexrgilbert), [@adricarda](https://github.com/adricarda),

|

| 28 |

+

[@tuttyfrutyee](https://github.com/tuttyfrutyee), [@vdlad](https://github.com/vdlad);

|

| 29 |

+

[@ryanyang0](https://x.com/ryanyang0)

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

---

|

| 33 |

+

|

| 34 |

+

## Running the current record

|

| 35 |

+

|

| 36 |

+

To run the current record, run the following commands.

|

| 37 |

+

```bash

|

| 38 |

+

git clone https://github.com/KellerJordan/modded-nanogpt.git && cd modded-nanogpt

|

| 39 |

+

pip install -r requirements.txt

|

| 40 |

+

pip install --pre torch --index-url https://download.pytorch.org/whl/nightly/cu126 --upgrade

|

| 41 |

+

# downloads only the first 800M training tokens to save time

|

| 42 |

+

python data/cached_fineweb10B.py 8

|

| 43 |

+

./run.sh

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

**Note: torch.compile will add around 5 minutes of latency the first time you run the code.**

|

| 47 |

+

|

| 48 |

+

## Alternative: Running with Docker (recommended for precise timing)

|

| 49 |

+

|

| 50 |

+

For cases where CUDA or NCCL versions aren't compatible with your current system setup, Docker can be a helpful alternative.

|

| 51 |

+

This approach standardizes versions for CUDA, NCCL, CUDNN, and Python, reducing dependency issues and simplifying setup.

|

| 52 |

+

Note: an NVIDIA driver must already be installed on the system (useful if only the NVIDIA driver and Docker are available).

|

| 53 |

+

|

| 54 |

+

```bash

|

| 55 |

+

git clone https://github.com/KellerJordan/modded-nanogpt.git && cd modded-nanogpt

|

| 56 |

+

sudo docker build -t modded-nanogpt .

|

| 57 |

+

sudo docker run -it --rm --gpus all -v $(pwd):/modded-nanogpt modded-nanogpt python data/cached_fineweb10B.py 8

|

| 58 |

+

sudo docker run -it --rm --gpus all -v $(pwd):/modded-nanogpt modded-nanogpt sh run.sh

|

| 59 |

+

```

|

| 60 |

+

|

| 61 |

+

To get an interactive docker, you can use

|

| 62 |

+

```bash

|

| 63 |

+

sudo docker run -it --rm --gpus all -v $(pwd):/modded-nanogpt modded-nanogpt bash

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

---

|

| 67 |

+

|

| 68 |

+

## World record history

|

| 69 |

+

|

| 70 |

+

The following is the historical progression of world speed records for the following competitive task:

|

| 71 |

+

|

| 72 |

+

> *Train a neural network to ≤3.28 validation loss on FineWeb using 8x NVIDIA H100s.*

|

| 73 |

+

|

| 74 |

+

Note: The 3.28 target was selected to match [Andrej Karpathy's GPT-2 (small) reproduction](https://github.com/karpathy/llm.c/discussions/481).

|

| 75 |

+

|

| 76 |

+

| # | Record time | Description | Date | Log | Contributors |

|

| 77 |

+

| - | - | - | - | - | - |

|

| 78 |

+

1 | 45 minutes | [llm.c baseline](https://github.com/karpathy/llm.c/discussions/481) | 05/28/24 | [log](records/101324_llmc/main.log) | @karpathy, llm.c contributors

|

| 79 |

+

2 | 31.4 minutes | [Tuned learning rate & rotary embeddings](https://x.com/kellerjordan0/status/1798863559243513937) | 06/06/24 | [log](records/060624_AdamW/f66d43d7-e449-4029-8adf-e8537bab49ea.log) | @kellerjordan0

|

| 80 |

+

3 | 24.9 minutes | [Introduced the Muon optimizer](https://x.com/kellerjordan0/status/1842300916864844014) | 10/04/24 | none | @kellerjordan0, @jxbz

|

| 81 |

+

4 | 22.3 minutes | [Muon improvements](https://x.com/kellerjordan0/status/1844820919061287009) | 10/11/24 | [log](records/101024_Muon/eb5659d0-fb6a-49e5-a311-f1f89412f726.txt) | @kellerjordan0, @bozavlado

|

| 82 |

+

5 | 15.2 minutes | [Pad embeddings, ReLU², zero-init projections, QK-norm](https://x.com/kellerjordan0/status/1845865698532450646) | 10/14/24 | [log](records/101424_ModernArch/dabaaddd-237c-4ec9-939d-6608a9ed5e27.txt) | @Grad62304977, @kellerjordan0

|

| 83 |

+

6 | 13.1 minutes | [Distributed the overhead of Muon](https://x.com/kellerjordan0/status/1847291684016783746) | 10/18/24 | [log](records/101724_DistributedMuon/22d24867-eb5a-4fcc-ae2c-263d0277dfd1.txt) | @kellerjordan0

|

| 84 |

+

7 | 12.0 minutes | [Upgraded PyTorch 2.5.0](https://x.com/kellerjordan0/status/1847358578686152764) | 10/18/24 | [log](records/101824_PyTorch25/d4bfb25f-688d-4da5-8743-33926fad4842.txt) | @kellerjordan0

|

| 85 |

+

8 | 10.8 minutes | [Untied embedding and head](https://x.com/kellerjordan0/status/1853188916704387239) | 11/03/24 | [log](records/110324_UntieEmbed/d6b50d71-f419-4d26-bb39-a60d55ae7a04.txt) | @Grad62304977, @kellerjordan0

|

| 86 |

+

9 | 8.2 minutes | [Value and embedding skip connections, momentum warmup, logit softcap](https://x.com/kellerjordan0/status/1854296101303800108) | 11/06/24 | [log](records/110624_ShortcutsTweaks/dd7304a6-cc43-4d5e-adb8-c070111464a1.txt) | @Grad62304977, @kellerjordan0

|

| 87 |

+

10 | 7.8 minutes | [Bfloat16 activations](https://x.com/kellerjordan0/status/1855267054774865980) | 11/08/24 | [log](records/110824_CastBf16/a833bed8-2fa8-4cfe-af05-58c1cc48bc30.txt) | @kellerjordan0

|

| 88 |

+

11 | 7.2 minutes | [U-net pattern skip connections & double lr](https://x.com/kellerjordan0/status/1856053121103093922) | 11/10/24 | [log](records/111024_UNetDoubleLr/c87bb826-797b-4f37-98c7-d3a5dad2de74.txt) | @brendanh0gan

|

| 89 |

+

12 | 5.03 minutes | [1024-ctx dense causal attention → 64K-ctx FlexAttention](https://x.com/kellerjordan0/status/1859331370268623321) | 11/19/24 | [log](records/111924_FlexAttention/8384493d-dba9-4991-b16b-8696953f5e6d.txt) | @KoszarskyB

|

| 90 |

+

13 | 4.66 minutes | [Attention window warmup](https://x.com/hi_tysam/status/1860851011797053450) | 11/24/24 | [log](records/112424_WindowWarmup/cf9e4571-c5fc-4323-abf3-a98d862ec6c8.txt) | @fernbear.bsky.social

|

| 91 |

+

14 | 4.41 minutes | [Value Embeddings](https://x.com/KoszarskyB/status/1864746625572257852) | 12/04/24 | [log](records/120424_ValueEmbed) | @KoszarskyB

|

| 92 |

+

15 | 3.95 minutes | [U-net pattern value embeddings, assorted code optimizations](https://x.com/YouJiacheng/status/1865761473886347747) | 12/08/24 | [log](records/120824_UNetValueEmbedsTweaks) | @leloykun, @YouJiacheng

|

| 93 |

+

16 | 3.80 minutes | [Split value embeddings, block sliding window, separate block mask](https://x.com/YouJiacheng/status/1866734331559071981) | 12/10/24 | [log](records/121024_MFUTweaks) | @YouJiacheng

|

| 94 |

+

17 | 3.57 minutes | [Sparsify value embeddings, improve rotary embeddings, drop an attn layer](https://x.com/YouJiacheng/status/1868938024731787640) | 12/17/24 | [log](records/121724_SparsifyEmbeds) | @YouJiacheng

|

| 95 |

+

18 | 3.4 minutes | [Lower logit softcap from 30 to 15](https://x.com/kellerjordan0/status/1876048851158880624) | 01/04/25 | [log](records/010425_SoftCap/31d6c427-f1f7-4d8a-91be-a67b5dcd13fd.txt) | @KoszarskyB

|

| 96 |

+

19 | 3.142 minutes | [FP8 head, offset logits, lr decay to 0.1 instead of 0.0](https://x.com/YouJiacheng/status/1878827972519772241) | 01/13/25 | [log](records/011325_Fp8LmHead/c51969c2-d04c-40a7-bcea-c092c3c2d11a.txt) | @YouJiacheng

|

| 97 |

+

20 | 2.992 minutes | [Merged QKV weights, long-short attention, attention scale, lower Adam epsilon, batched Muon](https://x.com/leloykun/status/1880301753213809016) | 01/16/25 | [log](records/011625_Sub3Min/1d3bd93b-a69e-4118-aeb8-8184239d7566.txt) | @leloykun, @fernbear.bsky.social, @YouJiacheng, @brendanh0gan, @scottjmaddox, @Grad62304977

|

| 98 |

+

21 | 2.933 minutes | [Reduced batch size](https://x.com/leloykun/status/1885640350368420160) | 01/26/25 | [log](records/012625_BatchSize/c44090cc-1b99-4c95-8624-38fb4b5834f9.txt) | @leloykun

|

| 99 |

+

21 | 2.997 minutes | 21st record with new timing | 02/01/25 | [log](records/020125_RuleTweak/eff63a8c-2f7e-4fc5-97ce-7f600dae0bc7.txt) | not a new record, just re-timing #21 with the [updated rules](#timing-change-after-record-21)

|

| 100 |

+

21 | 3.014 minutes | 21st record with latest torch | 05/24/25 | [log](records/052425_StableTorch/89d9f224-3b01-4581-966e-358d692335e0.txt) | not a new record, just re-timing #21 with latest torch

|

| 101 |

+

22 | 2.990 minutes | [Faster gradient all-reduce](https://x.com/KonstantinWille/status/1927137223238909969) | 05/24/25 | [log](records/052425_FasterReduce/23f40b75-06fb-4c3f-87a8-743524769a35.txt) | @KonstantinWilleke, @alexrgilbert, @adricarda, @tuttyfrutyee, @vdlad; The Enigma project

|

| 102 |

+

23 | 2.979 minutes | [Overlap computation and gradient communication](https://x.com/kellerjordan0/status/1927460573098262616) | 05/25/25 | [log](records/052525_EvenFasterReduce/6ae86d05-5cb2-4e40-a512-63246fd08e45.txt) | @ryanyang0

|

| 103 |

+

24 | 2.966 minutes | Replace gradient all_reduce with reduce_scatter | 05/30/25 | [log](records/053025_noallreduce/8054c239-3a18-499e-b0c8-dbd27cb4b3ab.txt) | @vagrawal

|

| 104 |

+

25 | 2.896 minutes | Upgrade PyTorch to 2.9.0.dev20250713+cu126 | 07/13/25 | [log](records/071325_UpgradeTorch190/692f80e0-5e64-4819-97d4-0dc83b7106b9.txt ) | @kellerjordan0

|

| 105 |

+

26 | 2.863 minutes | Align training batch starts with EoS, increase cooldown frac to .45 | 07/13/25 | [log](records/071225_BosAlign/c1fd8a38-bb9f-45c4-8af0-d37f70c993f3.txt) | @ClassicLarry

|

| 106 |

+

|

| 107 |

+

## Rules

|

| 108 |

+

|

| 109 |

+

The only rules are that new records must:

|

| 110 |

+

|

| 111 |

+

1. Not modify the train or validation data pipelines. (You can change the batch size, sequence length, attention structure etc.; just don't change the underlying streams of tokens.)

|

| 112 |

+

2. Attain ≤3.28 mean val loss. (Due to inter-run variance, submissions must provide enough run logs to attain a statistical significance level of p<0.01 that their mean val loss is ≤3.28. Example code to compute p-value can be found [here](records/010425_SoftCap#softer-softcap). For submissions which improve speed by optimizing the systems performance, without touching the ML, this requirement is waived.)

|

| 113 |

+

3. Not use any extra `torch._inductor.config` or `torch.compile` flags. (These can save a few seconds, but they can also make compilation take >30min. This rule was introduced after the 21st record.)

|

| 114 |

+

|

| 115 |

+

> Note: `torch._inductor.config.coordinate_descent_tuning` is allowed for GPT-2 Medium track (a.k.a. 2.92 track).

|

| 116 |

+

|

| 117 |

+

Other than that, anything and everything is fair game!

|

| 118 |

+

|

| 119 |

+

[further clarifications](https://github.com/KellerJordan/modded-nanogpt/discussions/23?sort=new#discussioncomment-12109560)

|

| 120 |

+

|

| 121 |

+

---

|

| 122 |

+

|

| 123 |

+

### Comment on the target metric

|

| 124 |

+

|

| 125 |

+

The target metric is *cross-entropy loss on the FineWeb val set*. To speak mathematically, the goal of the speedrun is *to obtain a probability model of language which assigns a probability of at least `math.exp(-3.28 * 10485760)` to the first 10,485,760 tokens of the FineWeb valset. Hence, e.g., we allow evaluation at any sequence length, so long as we still have a valid probability model of language.

|

| 126 |

+

|

| 127 |

+

---

|

| 128 |

+

|

| 129 |

+

### Timing change after record 21

|

| 130 |

+

|

| 131 |

+

After the 21st record, we made two changes to the timing. First, there used to be an initial "grace period" of 10 untimed steps to allow kernel warmup. We replaced this with an explicit kernel-warmup section which is untimed and uses dummy data. This results in an extra runtime of 850ms from the 10 extra timed steps.

|

| 132 |

+

Second, we banned the use of `torch._inductor.config.coordinate_descent_tuning`. This saves ~25min of untimed pre-run compilation, but results in an extra runtime of ~3s.

|

| 133 |

+

|

| 134 |

+

<!--Note: The original llm.c baseline is intended to be closer to a replication of GPT-2 than to an optimized LLM training.

|

| 135 |

+

So it's no surprise that there is room to improve; as @karpathy has said, 'llm.c still has a lot of pending optimizations.'

|

| 136 |

+

In addition, many of the techniques used in these records are completely standard, such as rotary embeddings.

|

| 137 |

+

The goal of this benchmark/speedrun is simply to find out which techniques actually work, and maybe come up with some new ones.-->

|

| 138 |

+

<!--The goal of this benchmark is simply to find out all the techniques which actually work, because I'm going crazy reading all these

|

| 139 |

+

LLM training papers

|

| 140 |

+

which claim a huge benefit but then use their own idiosyncratic non-competitive benchmark and therefore no one in the community has any idea if it's legit for months.-->

|

| 141 |

+

<!--[LLM](https://arxiv.org/abs/2305.14342) [training](https://arxiv.org/abs/2402.17764) [papers](https://arxiv.org/abs/2410.01131)-->

|

| 142 |

+

<!--I mean hello??? We're in a completely empirical field; it is insane to not have a benchmark. Ideally everyone uses the same LLM training benchmark,

|

| 143 |

+

and then reviewing LLM training papers becomes as simple as checking if they beat the benchmark. It's not like this would be unprecedented, that's how things

|

| 144 |

+

were in the ImageNet days.

|

| 145 |

+

The only possible 'benefit' I can think of for any empirical field to abandon benchmarks is that it would make it easier to publish false results. Oh, I guess that's why it happened.

|

| 146 |

+

Hilarious to think about how, in the often-commented-upon and ongoing collapse of the peer review system, people blame the *reviewers* --

|

| 147 |

+

yeah, those guys doing free labor who everyone constantly musters all of their intelligence to lie to, it's *their* fault! My bad, you caught me monologuing.-->

|

| 148 |

+

|

| 149 |

+

---

|

| 150 |

+

|

| 151 |

+

### Important note about records 22-25

|

| 152 |

+

|

| 153 |

+

Thanks to the statistical testing of [@agrawal](https://www.github.com/agrawal) (holder of the 24th record), we have learned that records 23, 24, and in all likelihood 22 and 25, actually attain a mean loss of 3.281, which is slightly above the 3.28 target.

|

| 154 |

+

Therefore if we were to completely adhere to the speedrun rules, we would have to deny that these are valid records.

|

| 155 |

+

However, we have decided to leave them in place as valid, because of the following two reasons: (a) the extra loss is most likely my (@kellerjordan0) own fault rather than that of the records, and (b) it is most likely easily addressable.

|

| 156 |

+

|

| 157 |

+

Here's what happened: Records #22 to #25 each change only the systems/implementation of the speedrun.

|

| 158 |

+

Therefore, the requirement to do statistical testing to confirm they hit the target was waived, since in theory they should have hit it automatically, by virtue of the fact that they didn't touch the ML (i.e., they didn't change the architecture, learning rate, etc.).

|

| 159 |

+

|

| 160 |

+

So if these records shouldn't have changed the ML, what explains the regression in val loss?

|

| 161 |

+

We think that most likely, the answer is that this regression was indeed not introduced by any of these records. Instead, it was

|

| 162 |

+

probably caused by my own non-record in which I retimed record #21 with newest torch,

|

| 163 |

+

because in this non-record I also changed the constants used to cast the lm_head to fp8.

|

| 164 |

+

I thought that this change should cause only a (small) strict improvement, but apparently that was not the case.

|

| 165 |

+

|

| 166 |

+

Therefore, it is probable that each of records #22-25 could be easily made fully valid by simply reverting the change I made to those constants.

|

| 167 |

+

Therefore they shall be upheld as valid records.

|

| 168 |

+

|

| 169 |

+

For the future, fortunately record #26 brought the speedrun back into the green in terms of <3.28 loss, so (with high p-value) it should be in a good state now.

|

| 170 |

+

|

| 171 |

+

---

|

| 172 |

+

|

| 173 |

+

### Notable attempts & forks

|

| 174 |

+

|

| 175 |

+

**Notable runs:**

|

| 176 |

+

|

| 177 |

+

* [@alexjc's 01/20/2025 2.77-minute TokenMonster-based record](https://x.com/alexjc/status/1881410039639863622).

|

| 178 |

+

This record is technically outside the rules of the speedrun, since we specified that the train/val tokens must be kept fixed.

|

| 179 |

+

However, it's very interesting, and worth including. The run is not more data-efficient; rather, the speedup comes from the improved tokenizer allowing

|

| 180 |

+

the vocabulary size to be reduced (nearly halved!) while preserving the same bytes-per-token, which saves lots of parameters and FLOPs in the head and embeddings.

|

| 181 |

+

|

| 182 |

+

**Notable forks:**

|

| 183 |

+

* [https://github.com/BlinkDL/modded-nanogpt-rwkv](https://github.com/BlinkDL/modded-nanogpt-rwkv)

|

| 184 |

+

* [https://github.com/nikhilvyas/modded-nanogpt-SOAP](https://github.com/nikhilvyas/modded-nanogpt-SOAP)

|

| 185 |

+

|

| 186 |

+

---

|

| 187 |

+

|

| 188 |

+

## Speedrun track 2: GPT-2 Medium

|

| 189 |

+

|

| 190 |

+

The target loss for this track is lowered from 3.28 to 2.92, as per Andrej Karpathy's 350M-parameter llm.c baseline.

|

| 191 |

+

This baseline generates a model with performance similar to the original GPT-2 Medium, whereas the first track's baseline generates a model on par with GPT-2 Small.

|

| 192 |

+

All other rules remain the same.

|

| 193 |

+

|

| 194 |

+

> Note: `torch._inductor.config.coordinate_descent_tuning` is turned on after the record 6 (*).

|

| 195 |

+

|

| 196 |

+

| # | Record time | Description | Date | Log | Contributors |

|

| 197 |

+

| - | - | - | - | - | - |

|

| 198 |

+

1 | 5.8 hours | [llm.c baseline (350M parameters)](https://github.com/karpathy/llm.c/discussions/481) | 05/28/24 | [log](records/011825_GPT2Medium/main.log) | @karpathy, llm.c contributors

|

| 199 |

+

2 | 29.3 minutes | [Initial record based on scaling up the GPT-2 small track speedrun](https://x.com/kellerjordan0/status/1881959719012847703) | 01/18/25 | [log](records/011825_GPT2Medium/241dd7a7-3d76-4dce-85a4-7df60387f32a.txt) | @kellerjordan0

|

| 200 |

+

3 | 28.1 minutes | [Added standard weight decay](https://x.com/kellerjordan0/status/1888320690543284449) | 02/08/25 | [log](records/020825_GPT2MediumWeightDecay/b01743db-605c-4326-b5b1-d388ee5bebc5.txt) | @kellerjordan0

|

| 201 |

+

4 | 27.7 minutes | [Tuned Muon Newton-Schulz coefficients](https://x.com/leloykun/status/1892793848163946799) | 02/14/25 | [log](records/021425_GPT2MediumOptCoeffs/1baa66b2-bff7-4850-aced-d63885ffb4b6.txt) | @leloykun

|

| 202 |

+

5 | 27.2 minutes | [Increased learning rate cooldown phase duration](records/030625_GPT2MediumLongerCooldown/779c041a-2a37-45d2-a18b-ec0f223c2bb7.txt) | 03/06/25 | [log](records/030625_GPT2MediumLongerCooldown/779c041a-2a37-45d2-a18b-ec0f223c2bb7.txt) | @YouJiacheng

|

| 203 |

+

6 | 25.95 minutes* | [2x MLP wd, qkv norm, all_reduce/opt.step() overlap, optimized skip pattern](https://x.com/YouJiacheng/status/1905861218138804534) | 03/25/25 | [log](records/032525_GPT2MediumArchOptTweaks/train_gpt-20250329.txt) | @YouJiacheng

|

| 204 |

+

7 | 25.29 minutes | [Remove FP8 head; ISRU logits softcap; New sharded mixed precision Muon; merge weights](https://x.com/YouJiacheng/status/1912570883878842527) | 04/16/25 | [log](records/041625_GPT2Medium_Record7/223_3310d0b1-b24d-48ee-899f-d5c2a254a195.txt) | @YouJiacheng

|

| 205 |

+

8 | 24.50 minutes | [Cubic sliding window size schedule, 2× max window size (24.84 minutes)](https://x.com/jadenj3o/status/1914893086276169754) [24.5min repro](https://x.com/YouJiacheng/status/1915667616913645985) | 04/22/25 | [log](records/042225_GPT2Medium_Record8/075_640429f2-e726-4e83-aa27-684626239ffc.txt) | @jadenj3o

|

| 206 |

+

|

| 207 |

+

---

|

| 208 |

+

|

| 209 |

+

### Q: What is the point of NanoGPT speedrunning?

|

| 210 |

+

|

| 211 |

+

A: The officially stated goal of NanoGPT speedrunning is as follows: `gotta go fast`. But for something a little more verbose involving an argument for good benchmarking, here's some kind of manifesto, adorned with a blessing from the master. [https://x.com/karpathy/status/1846790537262571739](https://x.com/karpathy/status/1846790537262571739)

|

| 212 |

+

|

| 213 |

+

### Q: What makes "NanoGPT speedrunning" not just another idiosyncratic benchmark?

|

| 214 |

+

|

| 215 |

+

A: Because it is a *competitive* benchmark. In particular, if you attain a new speed record (using whatever method you want), there is an open invitation for you

|

| 216 |

+

to post that record (on arXiv or X) and thereby vacuum up all the clout for yourself. I will even help you do it by reposting you as much as I can.

|

| 217 |

+

|

| 218 |

+

<!--On the contrary, for example, the benchmark used in the [Sophia](https://arxiv.org/abs/2305.14342) paper does *not* have this property.

|

| 219 |

+

There is no such open invitation for anyone to compete on the benchmark they used. In particular, if, for a random and definitely not weirdly specific example, you happen to find better AdamW hyperparameters for their training setup than

|

| 220 |

+

the ones they used which significantly close the gap between AdamW and their proposed optimizer,

|

| 221 |

+

then there is no clear path for you to publish that result in *any* form.

|

| 222 |

+

You could try posting it on X.com, but then you would be risking being perceived as aggressive/confrontational, which is *not a good look* in this racket.

|

| 223 |

+

So if you're rational, the result probably just dies with you and no one else learns anything

|

| 224 |

+

(unless you're in a frontier lab, in which case you can do a nice internal writeup. Boy I'd love to get my hands on those writeups).-->

|

| 225 |

+

|

| 226 |

+

["Artificial intelligence advances by inventing games and gloating to goad others to play" - Professor Ben Recht](https://www.argmin.net/p/too-much-information)

|

| 227 |

+

|

| 228 |

+

### Q: NanoGPT speedrunning is cool and all, but meh it probably won't scale and is just overfitting to val loss

|

| 229 |

+

|

| 230 |

+

A: This is hard to refute, since "at scale" is an infinite category (what if the methods stop working only for >100T models?), making it impossible to fully prove.

|

| 231 |

+

Also, I would agree that some of the methods used in the speedrun are unlikely to scale, particularly those which *impose additional structure* on the network, such as logit softcapping.

|

| 232 |

+

But if the reader cares about 1.5B models, they might be convinced by this result:

|

| 233 |

+

|

| 234 |

+

*Straightforwardly scaling up the speedrun (10/18/24 version) to 1.5B parameters yields a model with GPT-2 (1.5B)-level HellaSwag performance 2.5x more cheaply than [@karpathy's baseline](https://github.com/karpathy/llm.c/discussions/677) ($233 instead of $576):*

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

[[reproducible log](https://github.com/KellerJordan/modded-nanogpt/blob/master/records/102024_ScaleUp1B/ad8d7ae5-7b2d-4ee9-bc52-f912e9174d7a.txt)]

|

| 238 |

+

|

| 239 |

+

|

| 240 |

+

---

|

| 241 |

+

|

| 242 |

+

## [Muon optimizer](https://github.com/KellerJordan/Muon)

|

| 243 |

+

|

| 244 |

+

Muon is defined as follows:

|

| 245 |

+

|

| 246 |

+

|

| 247 |

+

|

| 248 |

+

Where NewtonSchulz5 is the following Newton-Schulz iteration [2, 3], which approximately replaces `G` with `U @ V.T` where `U, S, V = G.svd()`.

|

| 249 |

+

```python

|

| 250 |

+

@torch.compile

|

| 251 |

+

def zeroth_power_via_newtonschulz5(G, steps=5, eps=1e-7):

|

| 252 |

+

assert len(G.shape) == 2

|

| 253 |

+

a, b, c = (3.4445, -4.7750, 2.0315)

|

| 254 |

+

X = G.bfloat16() / (G.norm() + eps)

|

| 255 |

+

if G.size(0) > G.size(1):

|

| 256 |

+

X = X.T

|

| 257 |

+

for _ in range(steps):

|

| 258 |

+

A = X @ X.T

|

| 259 |

+

B = b * A + c * A @ A

|

| 260 |

+

X = a * X + B @ X

|

| 261 |

+

if G.size(0) > G.size(1):

|

| 262 |

+

X = X.T

|

| 263 |

+

return X.to(G.dtype)

|

| 264 |

+

```

|

| 265 |

+

|

| 266 |

+

For this training scenario, Muon has the following favorable properties:

|

| 267 |

+

* Lower memory usage than Adam

|

| 268 |

+

* ~1.5x better sample-efficiency

|

| 269 |

+

* <2% wallclock overhead

|

| 270 |

+

|

| 271 |

+

|

| 272 |

+

### Provenance

|

| 273 |

+

|

| 274 |

+

Many of the choices made to generate this optimizer were obtained experimentally by our pursuit of [CIFAR-10 speedrunning](https://github.com/KellerJordan/cifar10-airbench).

|

| 275 |

+

In particular, we experimentally obtained the following practices:

|

| 276 |

+

* Using Nesterov momentum inside the update, with orthogonalization applied after momentum.

|

| 277 |

+

* Using a specifically quintic Newton-Schulz iteration as the method of orthogonalization.

|

| 278 |

+

* Using non-convergent coefficients for the quintic polynomial in order to maximize slope at zero, and thereby minimize the number of necessary Newton-Schulz iterations.

|

| 279 |

+

It turns out that the variance doesn't actually matter that much, so we end up with a quintic that rapidly converges to the range 0.68, 1.13 upon repeated application, rather than converging more slowly to 1.

|

| 280 |

+

* Running the Newton-Schulz iteration in bfloat16 (whereas Shampoo implementations often depend on inverse-pth-roots run in fp32 or fp64).

|

| 281 |

+

|

| 282 |

+

Our use of a Newton-Schulz iteration for orthogonalization traces to [Bernstein & Newhouse (2024)](https://arxiv.org/abs/2409.20325),

|

| 283 |

+

who suggested it as a way to compute Shampoo [5, 6] preconditioners, and theoretically explored Shampoo without preconditioner accumulation.

|

| 284 |

+

In particular, Jeremy Bernstein @jxbz sent us the draft, which caused us to experiment with various Newton-Schulz iterations as the

|

| 285 |

+

orthogonalization method for this optimizer.

|

| 286 |

+

If we had used SVD instead of a Newton-Schulz iteration, this optimizer would have been too slow to be useful.

|

| 287 |

+

Bernstein & Newhouse also pointed out that Shampoo without preconditioner accumulation is equivalent to steepest descent in the spectral norm,

|

| 288 |

+

and therefore Shampoo can be thought of as a way to smooth out spectral steepest descent.

|

| 289 |

+

The proposed optimizer can be thought of as a second way of smoothing spectral steepest descent, with a different set of memory and runtime tradeoffs

|

| 290 |

+

compared to Shampoo.

|

| 291 |

+

|

| 292 |

+

---

|

| 293 |

+

|

| 294 |

+

## Running on fewer GPUs

|

| 295 |

+

|

| 296 |

+

* To run experiments on fewer GPUs, simply modify `run.sh` to have a different `--nproc_per_node`. This should not change the behavior of the training.

|

| 297 |

+

* If you're running out of memory, you may need to reduce the sequence length for FlexAttention (which does change the training. see [here](https://github.com/KellerJordan/modded-nanogpt/pull/38) for a guide)

|

| 298 |

+

|

| 299 |

+

---

|

| 300 |

+

|

| 301 |

+

## References

|

| 302 |

+

|

| 303 |

+

1. [Guilherme Penedo et al. "The fineweb datasets: Decanting the web for the finest text data at scale." arXiv preprint arXiv:2406.17557 (2024).](https://arxiv.org/abs/2406.17557)

|

| 304 |

+

2. Nicholas J. Higham. Functions of Matrices. Society for Industrial and Applied Mathematics (2008). Equation 5.22.

|

| 305 |

+

3. Günther Schulz. Iterative Berechnung der reziproken Matrix. Z. Angew. Math. Mech., 13:57â59 (1933).

|

| 306 |

+

4. [Jeremy Bernstein and Laker Newhouse. "Old Optimizer, New Norm: An Anthology." arxiv preprint arXiv:2409.20325 (2024).](https://arxiv.org/abs/2409.20325)

|

| 307 |

+

5. [Vineet Gupta, Tomer Koren, and Yoram Singer. "Shampoo: Preconditioned stochastic tensor optimization." International Conference on Machine Learning. PMLR, 2018.](https://arxiv.org/abs/1802.09568)

|

| 308 |

+

6. [Rohan Anil et al. "Scalable second order optimization for deep learning." arXiv preprint arXiv:2002.09018 (2020).](https://arxiv.org/abs/2002.09018)

|

| 309 |

+

7. [Alexander Hägele et al. "Scaling Laws and Compute-Optimal Training Beyond Fixed Training Durations." arXiv preprint arXiv:2405.18392 (2024).](https://arxiv.org/abs/2405.18392)

|

| 310 |

+

8. [Zhanchao Zhou et al. "Value Residual Learning For Alleviating Attention Concentration In Transformers." arXiv preprint arXiv:2410.17897 (2024).](https://arxiv.org/abs/2410.17897)

|

| 311 |

+

9. [Team, Gemma, et al. "Gemma 2: Improving open language models at a practical size." arXiv preprint arXiv:2408.00118 (2024).](https://arxiv.org/abs/2408.00118)

|

| 312 |

+

10. [Alec Radford et al. "Language models are unsupervised multitask learners." OpenAI blog 1.8 (2019).](https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf)

|

| 313 |

+

|

| 314 |

+

## Citation

|

| 315 |

+

|

| 316 |

+

```

|

| 317 |

+

@misc{modded_nanogpt_2024,

|

| 318 |

+

author = {Keller Jordan and Jeremy Bernstein and Brendan Rappazzo and

|

| 319 |

+

@fernbear.bsky.social and Boza Vlado and You Jiacheng and

|

| 320 |

+

Franz Cesista and Braden Koszarsky and @Grad62304977},

|

| 321 |

+

title = {modded-nanogpt: Speedrunning the NanoGPT baseline},

|

| 322 |

+

year = {2024},

|

| 323 |

+

url = {https://github.com/KellerJordan/modded-nanogpt}

|

| 324 |

+

}

|

| 325 |

+

```

|

| 326 |

+

|

| 327 |

+

<img src="img/dofa.jpg" alt="itsover_wereback" style="width:100%;">

|

| 328 |

+

|

data/cached_fineweb100B.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

from huggingface_hub import hf_hub_download

|

| 4 |

+

# Download the GPT-2 tokens of Fineweb100B from huggingface. This

|

| 5 |

+

# saves about an hour of startup time compared to regenerating them.

|

| 6 |

+

def get(fname):

|

| 7 |

+

local_dir = os.path.join(os.path.dirname(__file__), 'fineweb100B')

|

| 8 |

+

if not os.path.exists(os.path.join(local_dir, fname)):

|

| 9 |

+

hf_hub_download(repo_id="kjj0/fineweb100B-gpt2", filename=fname,

|

| 10 |

+

repo_type="dataset", local_dir=local_dir)

|

| 11 |

+

get("fineweb_val_%06d.bin" % 0)

|

| 12 |

+

num_chunks = 1030 # full fineweb100B. Each chunk is 100M tokens

|

| 13 |

+

if len(sys.argv) >= 2: # we can pass an argument to download less

|

| 14 |

+

num_chunks = int(sys.argv[1])

|

| 15 |

+

for i in range(1, num_chunks+1):

|

| 16 |

+

get("fineweb_train_%06d.bin" % i)

|

data/cached_fineweb10B.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

from huggingface_hub import hf_hub_download

|

| 4 |

+

# Download the GPT-2 tokens of Fineweb10B from huggingface. This

|

| 5 |

+

# saves about an hour of startup time compared to regenerating them.

|

| 6 |

+

def get(fname):

|

| 7 |

+

local_dir = os.path.join(os.path.dirname(__file__), 'fineweb10B')

|

| 8 |

+

if not os.path.exists(os.path.join(local_dir, fname)):

|

| 9 |

+

hf_hub_download(repo_id="kjj0/fineweb10B-gpt2", filename=fname,

|

| 10 |

+

repo_type="dataset", local_dir=local_dir)

|

| 11 |

+

get("fineweb_val_%06d.bin" % 0)

|

| 12 |

+

num_chunks = 103 # full fineweb10B. Each chunk is 100M tokens

|

| 13 |

+

if len(sys.argv) >= 2: # we can pass an argument to download less

|

| 14 |

+

num_chunks = int(sys.argv[1])

|

| 15 |

+

for i in range(1, num_chunks+1):

|

| 16 |

+

get("fineweb_train_%06d.bin" % i)

|

data/cached_finewebedu10B.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

from huggingface_hub import hf_hub_download

|

| 4 |

+

# Download the GPT-2 tokens of FinewebEDU10B from huggingface. This

|

| 5 |

+

# saves about an hour of startup time compared to regenerating them.

|

| 6 |

+

def get(fname):

|

| 7 |

+

local_dir = os.path.join(os.path.dirname(__file__), 'finewebedu10B')

|

| 8 |

+

if not os.path.exists(os.path.join(local_dir, fname)):

|

| 9 |

+

hf_hub_download(repo_id="kjj0/finewebedu10B-gpt2", filename=fname,

|

| 10 |

+

repo_type="dataset", local_dir=local_dir)

|

| 11 |

+

get("finewebedu_val_%06d.bin" % 0)

|

| 12 |

+

num_chunks = 99 # full FinewebEDU10B. Each chunk is 100M tokens

|

| 13 |

+

if len(sys.argv) >= 2: # we can pass an argument to download less

|

| 14 |

+

num_chunks = int(sys.argv[1])

|

| 15 |

+

for i in range(1, num_chunks+1):

|

| 16 |

+

get("finewebedu_train_%06d.bin" % i)

|

data/fineweb.py

ADDED

|

@@ -0,0 +1,126 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

FineWeb dataset (for srs pretraining)

|

| 3 |

+

https://huggingface.co/datasets/HuggingFaceFW/fineweb

|

| 4 |

+

|

| 5 |

+

example doc to highlight the structure of the dataset:

|

| 6 |

+

{

|

| 7 |

+

"text": "Posted by mattsmith on 20th April 2012\nStraight from...",

|

| 8 |

+

"id": "<urn:uuid:d853d453-196e-4488-a411-efc2b26c40d2>",

|

| 9 |

+

"dump": "CC-MAIN-2013-20",

|

| 10 |

+

"url": "http://nleastchatter.com/philliesphandom/tag/freddy-galvis/",

|

| 11 |

+

"date": "2013-05-18T07:24:47Z",

|

| 12 |

+

"file_path": "s3://commoncrawl/long.../path.../file.gz",

|

| 13 |

+

"language": "en",

|

| 14 |

+

"language_score": 0.9185474514961243,

|

| 15 |

+

"token_count": 594

|

| 16 |

+

}

|

| 17 |

+

"""

|

| 18 |

+

import os

|

| 19 |

+

import argparse

|

| 20 |

+

import multiprocessing as mp

|

| 21 |

+

import numpy as np

|

| 22 |

+

import tiktoken

|

| 23 |

+

# from huggingface_hub import snapshot_download

|

| 24 |

+

from datasets import load_dataset

|

| 25 |

+

from tqdm import tqdm

|

| 26 |

+

import argparse

|

| 27 |

+

import numpy as np

|

| 28 |

+

def write_datafile(filename, toks):

|

| 29 |

+

"""

|

| 30 |

+

Saves token data as a .bin file, for reading in C.

|

| 31 |

+

- First comes a header with 256 int32s

|

| 32 |

+

- The tokens follow, each as a uint16

|

| 33 |

+

"""

|

| 34 |

+

assert len(toks) < 2**31, "token count too large" # ~2.1B tokens

|

| 35 |

+

# construct the header

|

| 36 |

+

header = np.zeros(256, dtype=np.int32)

|

| 37 |

+

header[0] = 20240520 # magic

|

| 38 |

+

header[1] = 1 # version

|

| 39 |

+

header[2] = len(toks) # number of tokens after the 256*4 bytes of header (each 2 bytes as uint16)

|

| 40 |

+

# construct the tokens numpy array, if not already

|

| 41 |

+

if not isinstance(toks, np.ndarray) or not toks.dtype == np.uint16:

|

| 42 |

+

# validate that no token exceeds a uint16

|

| 43 |

+

maxtok = 2**16

|

| 44 |

+

assert all(0 <= t < maxtok for t in toks), "token dictionary too large for uint16"

|

| 45 |

+

toks_np = np.array(toks, dtype=np.uint16)

|

| 46 |

+

else:

|

| 47 |

+

toks_np = toks

|

| 48 |

+

# write to file

|

| 49 |

+

print(f"writing {len(toks):,} tokens to {filename}")

|

| 50 |

+

with open(filename, "wb") as f:

|

| 51 |

+

f.write(header.tobytes())

|

| 52 |

+

f.write(toks_np.tobytes())

|

| 53 |

+

# ------------------------------------------

|

| 54 |

+

|

| 55 |

+

parser = argparse.ArgumentParser(description="FineWeb dataset preprocessing")

|

| 56 |

+

parser.add_argument("-v", "--version", type=str, default="10B", help="Which version of fineweb to use 10B|100B")

|

| 57 |

+

parser.add_argument("-s", "--shard_size", type=int, default=10**8, help="Size of each shard in tokens")

|

| 58 |

+

args = parser.parse_args()

|

| 59 |

+

|

| 60 |

+

# FineWeb has a few possible subsamples available

|

| 61 |

+

assert args.version in ["10B", "100B"], "version must be one of 10B, 100B"

|

| 62 |

+

if args.version == "10B":

|

| 63 |

+

local_dir = "fineweb10B"

|

| 64 |

+

remote_name = "sample-10BT"

|

| 65 |

+

elif args.version == "100B":

|

| 66 |

+

local_dir = "fineweb100B"

|

| 67 |

+

remote_name = "sample-100BT"

|

| 68 |

+

|

| 69 |

+

# create the cache the local directory if it doesn't exist yet

|

| 70 |

+

DATA_CACHE_DIR = os.path.join(os.path.dirname(__file__), local_dir)

|

| 71 |

+

os.makedirs(DATA_CACHE_DIR, exist_ok=True)

|

| 72 |

+

|

| 73 |

+

# download the dataset

|

| 74 |

+

fw = load_dataset("HuggingFaceFW/fineweb", name=remote_name, split="train")

|

| 75 |

+

|

| 76 |

+

# init the tokenizer

|

| 77 |

+

enc = tiktoken.get_encoding("gpt2")

|

| 78 |

+

eot = enc._special_tokens['<|endoftext|>'] # end of text token

|

| 79 |

+

def tokenize(doc):

|

| 80 |

+

# tokenizes a single document and returns a numpy array of uint16 tokens

|

| 81 |

+

tokens = [eot] # the special <|endoftext|> token delimits all documents

|

| 82 |

+

tokens.extend(enc.encode_ordinary(doc["text"]))

|

| 83 |

+

tokens_np = np.array(tokens)

|

| 84 |

+

assert (0 <= tokens_np).all() and (tokens_np < 2**16).all(), "token dictionary too large for uint16"

|

| 85 |

+

tokens_np_uint16 = tokens_np.astype(np.uint16)

|

| 86 |

+

return tokens_np_uint16

|

| 87 |

+

|

| 88 |

+

# tokenize all documents and write output shards, each of shard_size tokens (last shard has remainder)

|

| 89 |

+

nprocs = max(1, os.cpu_count() - 2) # don't hog the entire system

|

| 90 |

+

with mp.Pool(nprocs) as pool:

|

| 91 |

+

shard_index = 0

|

| 92 |

+

# preallocate buffer to hold current shard

|

| 93 |

+

all_tokens_np = np.empty((args.shard_size,), dtype=np.uint16)

|

| 94 |

+

token_count = 0

|

| 95 |

+

progress_bar = None

|

| 96 |

+

for tokens in pool.imap(tokenize, fw, chunksize=16):

|

| 97 |

+

|

| 98 |

+

# is there enough space in the current shard for the new tokens?

|

| 99 |

+

if token_count + len(tokens) < args.shard_size:

|

| 100 |

+

# simply append tokens to current shard

|

| 101 |

+

all_tokens_np[token_count:token_count+len(tokens)] = tokens

|

| 102 |

+

token_count += len(tokens)

|

| 103 |

+

# update progress bar

|

| 104 |

+

if progress_bar is None:

|

| 105 |

+

progress_bar = tqdm(total=args.shard_size, unit="tokens", desc=f"Shard {shard_index}")

|

| 106 |

+

progress_bar.update(len(tokens))

|

| 107 |

+

else:

|

| 108 |

+

# write the current shard and start a new one

|

| 109 |

+

split = "val" if shard_index == 0 else "train"

|

| 110 |

+

filename = os.path.join(DATA_CACHE_DIR, f"fineweb_{split}_{shard_index:06d}.bin")

|

| 111 |

+

# split the document into whatever fits in this shard; the remainder goes to next one

|

| 112 |

+

remainder = args.shard_size - token_count

|

| 113 |

+

progress_bar.update(remainder)

|

| 114 |

+

all_tokens_np[token_count:token_count+remainder] = tokens[:remainder]

|

| 115 |

+

write_datafile(filename, all_tokens_np)

|

| 116 |

+

shard_index += 1

|

| 117 |

+

progress_bar = None

|

| 118 |

+

# populate the next shard with the leftovers of the current doc

|

| 119 |

+

all_tokens_np[0:len(tokens)-remainder] = tokens[remainder:]

|

| 120 |

+

token_count = len(tokens)-remainder

|

| 121 |

+

|

| 122 |

+

# write any remaining tokens as the last shard

|

| 123 |

+

if token_count != 0:

|

| 124 |

+

split = "val" if shard_index == 0 else "train"

|

| 125 |

+

filename = os.path.join(DATA_CACHE_DIR, f"fineweb_{split}_{shard_index:06d}.bin")

|

| 126 |

+

write_datafile(filename, all_tokens_np[:token_count])

|

data/requirements.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

datasets

|

| 2 |

+

tiktoken

|

eval.sh

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

uv run hellaswag.py logs DEBUG

|

eval_grace.slurm

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

##NECESSARY JOB SPECIFICATIONS

|

| 4 |

+

#SBATCH --job-name=modded-nanogpt-eval # Set the job name to "get_activations"

|

| 5 |

+

#SBATCH --time=2:00:00 # Set the wall clock limit to 24 hours

|

| 6 |

+

#SBATCH --ntasks=1 # Total number of tasks (processes) across all nodes

|

| 7 |

+

#SBATCH --ntasks-per-node=1 # Number of tasks per node

|

| 8 |

+

#SBATCH --mem=16G # Request 16GB per node