Hash

stringlengths 40

40

| Date

stringlengths 19

20

⌀ | Author

stringlengths 2

30

| commit_message

stringlengths 3

28.8k

| IsMerge

bool 1

class | Additions

int64 0

55.2k

| Deletions

int64 0

991

| Total Changes

int64 -3

55.2k

| git_diff

stringlengths 23

47.3k

| Repository Name

stringclasses 159

values | Owner

stringclasses 85

values | Primary Language

stringclasses 20

values | Language

stringclasses 19

values | Stars

float64 218

411k

⌀ | Forks

float64 8

79k

⌀ | Description

stringclasses 96

values | Repository

stringclasses 161

values | type

stringclasses 6

values | Comment

stringlengths 7

156

⌀ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

b52a089f46c10c8184ccf9e276c6cbf3ec1977b2

| null |

boomski

|

Update us.m3u

| false

| 2

| 0

| 2

|

--- us.m3u

@@ -111,6 +111,8 @@ http://170.178.189.66:1935/live/Stream1/.m3u8

http://170.178.189.66:1935/live/Stream1/playlist.m3u8

#EXTINF:-1 tvg-id="" tvg-name="" tvg-logo="https://i.imgur.com/BgwAqlG.png" group-title="",AMGTV

https://api.locastnet.org/api/watch/stream/1094/34/-118/?token=eyJhbGciOiJIUzI1NiJ9.eyJzdWIiOiJkdWRlc2VrLnBldGVyQGdtYWlsLmNvbSIsInJvbGUiOjEsImV4cCI6MTU4OTEyNjI3OH0.UmdOQBwiO5JjceZ-WpWKYXZy2v6aooUi1808NTolbIo&fbclid=IwAR3EW3T_ZSm_BwKxzpTSlethc322oxJ_Z5JYgPOkD4SSB08yvxqmsbM_zTU

+#EXTINF:-1 tvg-id="" tvg-name="" tvg-logo="https://i.imgur.com/DQSHHTS.png" group-title="",America Teve

+http://api.new.livestream.com/accounts/5730046/events/2966225/live.m3u8

#EXTINF:-1 tvg-id="" tvg-name="" tvg-logo="https://i.imgur.com/HidIMbv.png" group-title="",AntennaTV

https://api.locastnet.org/api/watch/stream/1080/34/-118/?token=eyJhbGciOiJIUzI1NiJ9.eyJzdWIiOiJkdWRlc2VrLnBldGVyQGdtYWlsLmNvbSIsInJvbGUiOjEsImV4cCI6MTU4OTEyNjI3OH0.UmdOQBwiO5JjceZ-WpWKYXZy2v6aooUi1808NTolbIo&fbclid=IwAR3EW3T_ZSm_BwKxzpTSlethc322oxJ_Z5JYgPOkD4SSB08yvxqmsbM_zTU

#EXTINF:-1 tvg-id="" tvg-name="" tvg-logo="https://i.imgur.com/hSYez3V.png" group-title="",Atlanta Channel

|

iptv-org_iptv.json

| null | null | null | null | null | null |

iptv-org_iptv.json

|

NEW_FEAT

|

4, Added new channels

|

8e77fa5a0f855975febe3a6945736540d981d9f8

|

2024-03-08 19:36:40

|

Toshiaki Takeuchi

|

Reimplemented DALL-E support

| false

| 8

| 24

| 32

|

--- MatGPT.mlapp

Binary files a/MatGPT.mlapp and b/MatGPT.mlapp differ

--- contents/presets.csv

@@ -3,7 +3,7 @@ AI Assistant,You are a helpful assistant. Answer as concisely as possible. ,Wher

Read a web page,You are a helpful assistant that can read a small web page and analyze its content. The content of the page will be extracted by an external function and stored in the chat history. ,What is this page about? https://www.mathworks.com/help/matlab/text-files.html,gpt-3.5-turbo,1000,0,0,1,1

Read a local file,You are a helpful assistant that can read a small file and analyze its content. The content of the file will be extracted by an external function and stored in the chat history. ,Select a file using the paper clip icon on the left.,gpt-3.5-turbo,1000,0,0,1,1

Understand an image,You are a helpful assistant that can see an image and analyze its content.,What is in this image? https://www.mathworks.com/help/examples/matlab/win64/DisplayGrayscaleRGBIndexedOrBinaryImageExample_04.png,gpt-4-vision-preview,1000,1,0,0,0

-Generate an image,,Create a 3D avatar of a whimsical sushi on the beach. He is decorated with various sushi elements and is playfully interacting with the beach environment.,dall-e-3,Inf,0,0,0,0

+Generate an image,You are a helpful assistant that can generate an image.,Create a 3D avatar of a whimsical sushi on the beach. He is decorated with various sushi elements and is playfully interacting with the beach environment.,gpt-3.5-turbo,1000,1,0,0,0

English to MATLAB Code,You are a helpful assistant that generates MATLAB code. ,"Define two random vectors x and y, fit a linear model to the data, and plot both the data and fitted line.",gpt-3.5-turbo,1000,0,1,1,1

English to Simulink Model,"You are a helpful assistant that creates Simulink models.

You create the models by generating MATLAB code that adds all the necessary blocks and set their parameters.

--- helpers/MsgHelper.m

@@ -16,8 +16,7 @@

struct('name','gpt-4-0613','attributes',struct('contextwindow',8192,'cutoff','Sep 2021'),'legacy',false), ...

struct('name','gpt-4-1106-preview','attributes',struct('contextwindow',128000,'cutoff','Apr 2023'),'legacy',false), ...

struct('name','gpt-4-vision-preview','attributes',struct('contextwindow',128000,'cutoff','Apr 2023'),'legacy',false), ...

- struct('name','gpt-4-turbo-preview','attributes',struct('contextwindow',128000,'cutoff','Apr 2023'),'legacy',false), ...

- struct('name','dall-e-3','attributes',struct('contextwindow','n/a','cutoff','n/a'),'legacy',false), ...

+ struct('name','gpt-4-turbo-preview','attributes',struct('contextwindow',128000,'cutoff','Apr 2023'),'legacy',false) ...

];

contextwindow = models(arrayfun(@(x) string(x.name), models) == modelName).attributes.contextwindow;

cutoff = models(arrayfun(@(x) string(x.name), models) == modelName).attributes.cutoff;

@@ -66,7 +65,7 @@

args = "{""arg1"": 1 }";

funCall = struct("name", functionName, "arguments", args);

toolCall = struct("id", id, "type", "function", "function", funCall);

- toolCallPrompt = struct("role", "assistant", "content", [], "tool_calls", toolCall);

+ toolCallPrompt = struct("role", "assistant", "content", "", "tool_calls", toolCall);

messages = addResponseMessage(messages, toolCallPrompt);

% add content as the function result

messages = addToolMessage(messages,id,functionName,content);

@@ -143,5 +142,21 @@

imgTag = "<img width=512 src=" + urlEncoded + ">";

end

+ % function call to generate image

+ function [image, url, response] = generateImage(prompt)

+ mdl = openAIImages(ModelName="dall-e-3");

+ [images, response] = generate(mdl,string(prompt));

+ image = images{1};

+ url = response.Body.Data.data.url;

+ end

+

+ % function call to understand image

+ function [txt,message,response] = understandImage(prompt,image,max_tokens)

+ chat = openAIChat("You are an AI assistant","ModelName","gpt-4-vision-preview");

+ messages = openAIMessages;

+ messages = addUserMessageWithImages(messages,prompt,image);

+ [txt,message,response] = generate(chat,messages,MaxNumTokens=max_tokens);

+ end

+

end

end

\ No newline at end of file

--- helpers/llms-with-matlab/+llms/+stream/responseStreamer.m

@@ -45,8 +45,9 @@

catch ME

errID = 'llms:stream:responseStreamer:InvalidInput';

msg = "Input does not have the expected json format. " + str{i};

- ME = MException(errID,msg);

- throw(ME)

+ causeException = MException(errID,msg);

+ ME = addCause(ME,causeException);

+ rethrow(ME)

end

if ischar(json.choices.finish_reason) && ismember(json.choices.finish_reason,["stop","tool_calls"])

stop = true;

--- helpers/llms-with-matlab/openAIChat.m

@@ -311,8 +311,8 @@ function mustBeValidFunctionCall(this, functionCall)

if ~isempty(this.Tools)

toolChoice = "auto";

end

- elseif ~ismember(toolChoice,["auto","none"])

- % if toolChoice is not empty, then it must be "auto", "none" or in the format

+ elseif ToolChoice ~= "auto"

+ % if toolChoice is not empty, then it must be in the format

% {"type": "function", "function": {"name": "my_function"}}

toolChoice = struct("type","function","function",struct("name",toolChoice));

end

|

matgpt

|

toshiakit

|

MATLAB

|

MATLAB

| 218

| 33

|

MATLAB app to access ChatGPT API from OpenAI

|

toshiakit_matgpt

|

NEW_FEAT

|

Obvious

|

a0cc73263a1a8b3c6a01d6e8b9781da315d09b92

|

2024-12-29 20:51:56

|

Eser DENIZ

|

feat: default notification title (#451)

| false

| 3

| 1

| 4

|

--- src/Notification.php

@@ -12,9 +12,7 @@ class Notification

protected string $event = '';

- final public function __construct(protected Client $client) {

- $this->title = config('app.name');

- }

+ final public function __construct(protected Client $client) {}

public static function new()

{

|

laravel

|

nativephp

|

PHP

|

PHP

| 3,498

| 182

|

Laravel wrapper for the NativePHP framework

|

nativephp_laravel

|

CODE_IMPROVEMENT

|

Code change: added final modifier

|

3f523871c666bd59dbd992121f614d97480eceef

|

2025-03-20 05:52:06

|

netdatabot

|

[ci skip] Update changelog and version for nightly build: v2.3.0-3-nightly.

| false

| 10

| 3

| 13

|

--- CHANGELOG.md

@@ -1,14 +1,5 @@

# Changelog

-## [**Next release**](https://github.com/netdata/netdata/tree/HEAD)

-

-[Full Changelog](https://github.com/netdata/netdata/compare/v2.3.0...HEAD)

-

-**Merged pull requests:**

-

-- fix reliability calculation [\#19909](https://github.com/netdata/netdata/pull/19909) ([ktsaou](https://github.com/ktsaou))

-- new exit cause: shutdown timeout [\#19903](https://github.com/netdata/netdata/pull/19903) ([ktsaou](https://github.com/ktsaou))

-

## [v2.3.0](https://github.com/netdata/netdata/tree/v2.3.0) (2025-03-19)

[Full Changelog](https://github.com/netdata/netdata/compare/v2.2.6...v2.3.0)

@@ -486,6 +477,8 @@

- Revert "prevent memory corruption in dbengine" [\#19364](https://github.com/netdata/netdata/pull/19364) ([ktsaou](https://github.com/ktsaou))

- prevent memory corruption in dbengine [\#19363](https://github.com/netdata/netdata/pull/19363) ([ktsaou](https://github.com/ktsaou))

- metrics-cardinality function [\#19362](https://github.com/netdata/netdata/pull/19362) ([ktsaou](https://github.com/ktsaou))

+- avoid checking replication status all the time [\#19361](https://github.com/netdata/netdata/pull/19361) ([ktsaou](https://github.com/ktsaou))

+- respect flood protection configuration for daemon [\#19360](https://github.com/netdata/netdata/pull/19360) ([ktsaou](https://github.com/ktsaou))

## [v2.1.1](https://github.com/netdata/netdata/tree/v2.1.1) (2025-01-07)

--- packaging/version

@@ -1 +1 @@

-v2.3.0-3-nightly

+v2.3.0

|

netdata

|

netdata

|

C

|

C

| 73,681

| 6,023

|

X-Ray Vision for your infrastructure!

|

netdata_netdata

|

CONFIG_CHANGE

|

Version/release update

|

a57939a077ebaa7bbb1f14b3d8ff4bc6b44b289d

|

2023-06-06 19:12:15

|

dependabot[bot]

|

Build(deps-dev): Bump rollup from 3.23.0 to 3.23.1 (#38714) Bumps [rollup](https://github.com/rollup/rollup) from 3.23.0 to 3.23.1.

- [Release notes](https://github.com/rollup/rollup/releases)

- [Changelog](https://github.com/rollup/rollup/blob/master/CHANGELOG.md)

- [Commits](https://github.com/rollup/rollup/compare/v3.23.0...v3.23.1)

---

updated-dependencies:

- dependency-name: rollup

dependency-type: direct:development

update-type: version-update:semver-patch

...

Signed-off-by: dependabot[bot] <[email protected]>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

| false

| 8

| 8

| 16

|

--- package-lock.json

@@ -58,7 +58,7 @@

"npm-run-all2": "^6.0.5",

"postcss": "^8.4.24",

"postcss-cli": "^10.1.0",

- "rollup": "^3.23.1",

+ "rollup": "^3.23.0",

"rollup-plugin-istanbul": "^4.0.0",

"rtlcss": "^4.1.0",

"sass": "^1.62.1",

@@ -9090,9 +9090,9 @@

}

},

"node_modules/rollup": {

- "version": "3.23.1",

- "resolved": "https://registry.npmjs.org/rollup/-/rollup-3.23.1.tgz",

- "integrity": "sha512-ybRdFVHOoljGEFILHLd2g/qateqUdjE6YS41WXq4p3C/WwD3xtWxV4FYWETA1u9TeXQc5K8L8zHE5d/scOvrOQ==",

+ "version": "3.23.0",

+ "resolved": "https://registry.npmjs.org/rollup/-/rollup-3.23.0.tgz",

+ "integrity": "sha512-h31UlwEi7FHihLe1zbk+3Q7z1k/84rb9BSwmBSr/XjOCEaBJ2YyedQDuM0t/kfOS0IxM+vk1/zI9XxYj9V+NJQ==",

"dev": true,

"bin": {

"rollup": "dist/bin/rollup"

@@ -17370,9 +17370,9 @@

}

},

"rollup": {

- "version": "3.23.1",

- "resolved": "https://registry.npmjs.org/rollup/-/rollup-3.23.1.tgz",

- "integrity": "sha512-ybRdFVHOoljGEFILHLd2g/qateqUdjE6YS41WXq4p3C/WwD3xtWxV4FYWETA1u9TeXQc5K8L8zHE5d/scOvrOQ==",

+ "version": "3.23.0",

+ "resolved": "https://registry.npmjs.org/rollup/-/rollup-3.23.0.tgz",

+ "integrity": "sha512-h31UlwEi7FHihLe1zbk+3Q7z1k/84rb9BSwmBSr/XjOCEaBJ2YyedQDuM0t/kfOS0IxM+vk1/zI9XxYj9V+NJQ==",

"dev": true,

"requires": {

"fsevents": "~2.3.2"

--- package.json

@@ -142,7 +142,7 @@

"npm-run-all2": "^6.0.5",

"postcss": "^8.4.24",

"postcss-cli": "^10.1.0",

- "rollup": "^3.23.1",

+ "rollup": "^3.23.0",

"rollup-plugin-istanbul": "^4.0.0",

"rtlcss": "^4.1.0",

"sass": "^1.62.1",

|

bootstrap

|

twbs

|

JavaScript

|

JavaScript

| 171,693

| 79,045

|

The most popular HTML, CSS, and JavaScript framework for developing responsive, mobile first projects on the web.

|

twbs_bootstrap

|

CONFIG_CHANGE

|

version changes are done

|

4e230e81e753453d7be862f11ea0fff8290e2209

|

2023-08-31 07:20:31

|

Goh Chun Lin

|

Sign Manifesto

| false

| 5

| 0

| 5

|

--- index.html

@@ -4382,11 +4382,6 @@

<td>Individual</td>

<td>DevOps; SRE; Crypto Enthusiast; open-source community efforts</td>

</tr>

- <tr>

- <td><a href="https://github.com/goh-chunlin">Goh Chun Lin</td>

- <td>Individual</td>

- <td>Development; Documentation; Outreach; open-source community efforts</td>

- </tr>

</tbody>

</table>

</div>

|

manifesto

|

opentofu

|

HTML

|

HTML

| 36,134

| 1,083

|

The OpenTF Manifesto expresses concern over HashiCorp's switch of the Terraform license from open-source to the Business Source License (BSL) and calls for the tool's return to a truly open-source license.

|

opentofu_manifesto

|

NEW_FEAT

|

a new table row with links added in the code probably adding a new feature in the UI

|

5976310916458868b5cbd9d8c7cc7de5af418230

|

2024-12-02 06:06:16

|

Caleb White

|

worktree: refactor infer_backlink return The previous round[1] was merged a bit early before reviewer feedback could be applied. This correctly indents a code block and updates the `infer_backlink` function to return `-1` on failure and strbuf.len on success. [1]: https://lore.kernel.org/git/[email protected] Signed-off-by: Caleb White <[email protected]> Signed-off-by: Junio C Hamano <[email protected]>

| false

| 8

| 7

| 15

|

--- worktree.c

@@ -111,9 +111,9 @@ struct worktree *get_linked_worktree(const char *id,

strbuf_strip_suffix(&worktree_path, "/.git");

if (!is_absolute_path(worktree_path.buf)) {

- strbuf_strip_suffix(&path, "gitdir");

- strbuf_addbuf(&path, &worktree_path);

- strbuf_realpath_forgiving(&worktree_path, path.buf, 0);

+ strbuf_strip_suffix(&path, "gitdir");

+ strbuf_addbuf(&path, &worktree_path);

+ strbuf_realpath_forgiving(&worktree_path, path.buf, 0);

}

CALLOC_ARRAY(worktree, 1);

@@ -725,10 +725,8 @@ static int is_main_worktree_path(const char *path)

* won't know which <repo>/worktrees/<id>/gitdir to repair. However, we may

* be able to infer the gitdir by manually reading /path/to/worktree/.git,

* extracting the <id>, and checking if <repo>/worktrees/<id> exists.

- *

- * Returns -1 on failure and strbuf.len on success.

*/

-static ssize_t infer_backlink(const char *gitfile, struct strbuf *inferred)

+static int infer_backlink(const char *gitfile, struct strbuf *inferred)

{

struct strbuf actual = STRBUF_INIT;

const char *id;

@@ -749,11 +747,12 @@ static ssize_t infer_backlink(const char *gitfile, struct strbuf *inferred)

goto error;

strbuf_release(&actual);

- return inferred->len;

+ return 1;

+

error:

strbuf_release(&actual);

strbuf_reset(inferred); /* clear invalid path */

- return -1;

+ return 0;

}

/*

|

git

| null |

C

|

C

| null | null |

Version control

|

_git

|

CODE_IMPROVEMENT

|

obvious

|

5e0c28438b8aeba78cbf484c54a6140ccde1d4c9

|

2024-11-23 12:11:19

|

2dust

|

Refactor Localization for AmazTool

| false

| 504

| 98

| 602

|

--- v2rayN/AmazTool/AmazTool.csproj

@@ -10,18 +10,8 @@

</PropertyGroup>

<ItemGroup>

- <Compile Update="Resx\Resource.Designer.cs">

- <DesignTime>True</DesignTime>

- <AutoGen>True</AutoGen>

- <DependentUpon>Resource.resx</DependentUpon>

- </Compile>

- </ItemGroup>

-

- <ItemGroup>

- <EmbeddedResource Update="Resx\Resource.resx">

- <Generator>ResXFileCodeGenerator</Generator>

- <LastGenOutput>Resource.Designer.cs</LastGenOutput>

- </EmbeddedResource>

+ <EmbeddedResource Include="Assets\en-US.json" />

+ <EmbeddedResource Include="Assets\zh-CN.json" />

</ItemGroup>

</Project>

\ No newline at end of file

--- v2rayN/AmazTool/Assets/en-US.json

@@ -0,0 +1,14 @@

+{

+ "Restart_v2rayN": "Start v2rayN, please wait...",

+ "Guidelines": "Please run it from the main application.",

+ "Upgrade_File_Not_Found": "Upgrade failed, file not found.",

+ "In_Progress": "In progress, please wait...",

+ "Try_Terminate_Process": "Try to terminate the v2rayN process.",

+ "Failed_Terminate_Process": "Failed to terminate the v2rayN.Close it manually,or the upgrade may fail.",

+ "Start_Unzipping": "Start extracting the update package.",

+ "Success_Unzipping": "Successfully extracted the update package!",

+ "Failed_Unzipping": "Failed to extract the update package!",

+ "Failed_Upgrade": "Upgrade failed!",

+ "Success_Upgrade": "Upgrade success!",

+ "Information": "Information"

+}

\ No newline at end of file

--- v2rayN/AmazTool/Assets/zh-CN.json

@@ -0,0 +1,14 @@

+{

+ "Restart_v2rayN": "正在重启,请等待...",

+ "Guidelines": "请从主应用运行!",

+ "Upgrade_File_Not_Found": "升级失败,文件不存在!",

+ "In_Progress": "正在进行中,请等待...",

+ "Try_Terminate_Process": "尝试结束 v2rayN 进程...",

+ "Failed_Terminate_Process": "请手动关闭正在运行的v2rayN,否则可能升级失败。",

+ "Start_Unzipping": "开始解压缩更新包...",

+ "Success_Unzipping": "解压缩更新包成功!",

+ "Failed_Unzipping": "解压缩更新包失败!",

+ "Failed_Upgrade": "升级失败!",

+ "Success_Upgrade": "升级成功!",

+ "Information": "提示"

+}

\ No newline at end of file

--- v2rayN/AmazTool/LocalizationHelper.cs

@@ -0,0 +1,59 @@

+using System.Globalization;

+using System.Reflection;

+using System.Text.Json;

+

+namespace AmazTool

+{

+ public class LocalizationHelper

+ {

+ private static Dictionary<string, string> _languageResources = [];

+

+ static LocalizationHelper()

+ {

+ // 加载语言资源

+ LoadLanguageResources();

+ }

+

+ private static void LoadLanguageResources()

+ {

+ try

+ {

+ var currentLanguage = CultureInfo.CurrentCulture.Name;

+ if (currentLanguage != "zh-CN" && currentLanguage != "en-US")

+ {

+ currentLanguage = "en-US";

+ }

+

+ var resourceName = $"AmazTool.Assets.{currentLanguage}.json";

+ var assembly = Assembly.GetExecutingAssembly();

+

+ using var stream = assembly.GetManifestResourceStream(resourceName);

+ if (stream == null) return;

+

+ using StreamReader reader = new(stream);

+ var json = reader.ReadToEnd();

+ if (!string.IsNullOrEmpty(json))

+ {

+ _languageResources = JsonSerializer.Deserialize<Dictionary<string, string>>(json) ?? new Dictionary<string, string>();

+ }

+ }

+ catch (IOException ex)

+ {

+ Console.WriteLine($"Failed to read language resource file: {ex.Message}");

+ }

+ catch (JsonException ex)

+ {

+ Console.WriteLine($"Failed to parse JSON data: {ex.Message}");

+ }

+ catch (Exception ex)

+ {

+ Console.WriteLine($"Unexpected error occurred: {ex.Message}");

+ }

+ }

+

+ public static string GetLocalizedValue(string key)

+ {

+ return _languageResources.TryGetValue(key, out var translation) ? translation : key;

+ }

+ }

+}

\ No newline at end of file

--- v2rayN/AmazTool/Program.cs

@@ -10,7 +10,7 @@

{

if (args.Length == 0)

{

- Console.WriteLine(Resx.Resource.Guidelines);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Guidelines"));

Thread.Sleep(5000);

return;

}

--- v2rayN/AmazTool/Resx/Resource.Designer.cs

@@ -1,171 +0,0 @@

-//------------------------------------------------------------------------------

-// <auto-generated>

-// 此代码由工具生成。

-// 运行时版本:4.0.30319.42000

-//

-// 对此文件的更改可能会导致不正确的行为,并且如果

-// 重新生成代码,这些更改将会丢失。

-// </auto-generated>

-//------------------------------------------------------------------------------

-

-namespace AmazTool.Resx {

- using System;

-

-

- /// <summary>

- /// 一个强类型的资源类,用于查找本地化的字符串等。

- /// </summary>

- // 此类是由 StronglyTypedResourceBuilder

- // 类通过类似于 ResGen 或 Visual Studio 的工具自动生成的。

- // 若要添加或移除成员,请编辑 .ResX 文件,然后重新运行 ResGen

- // (以 /str 作为命令选项),或重新生成 VS 项目。

- [global::System.CodeDom.Compiler.GeneratedCodeAttribute("System.Resources.Tools.StronglyTypedResourceBuilder", "17.0.0.0")]

- [global::System.Diagnostics.DebuggerNonUserCodeAttribute()]

- [global::System.Runtime.CompilerServices.CompilerGeneratedAttribute()]

- internal class Resource {

-

- private static global::System.Resources.ResourceManager resourceMan;

-

- private static global::System.Globalization.CultureInfo resourceCulture;

-

- [global::System.Diagnostics.CodeAnalysis.SuppressMessageAttribute("Microsoft.Performance", "CA1811:AvoidUncalledPrivateCode")]

- internal Resource() {

- }

-

- /// <summary>

- /// 返回此类使用的缓存的 ResourceManager 实例。

- /// </summary>

- [global::System.ComponentModel.EditorBrowsableAttribute(global::System.ComponentModel.EditorBrowsableState.Advanced)]

- internal static global::System.Resources.ResourceManager ResourceManager {

- get {

- if (object.ReferenceEquals(resourceMan, null)) {

- global::System.Resources.ResourceManager temp = new global::System.Resources.ResourceManager("AmazTool.Resx.Resource", typeof(Resource).Assembly);

- resourceMan = temp;

- }

- return resourceMan;

- }

- }

-

- /// <summary>

- /// 重写当前线程的 CurrentUICulture 属性,对

- /// 使用此强类型资源类的所有资源查找执行重写。

- /// </summary>

- [global::System.ComponentModel.EditorBrowsableAttribute(global::System.ComponentModel.EditorBrowsableState.Advanced)]

- internal static global::System.Globalization.CultureInfo Culture {

- get {

- return resourceCulture;

- }

- set {

- resourceCulture = value;

- }

- }

-

- /// <summary>

- /// 查找类似 Failed to terminate the v2rayN.Close it manually,or the upgrade may fail. 的本地化字符串。

- /// </summary>

- internal static string FailedTerminateProcess {

- get {

- return ResourceManager.GetString("FailedTerminateProcess", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Failed to extract the update package. 的本地化字符串。

- /// </summary>

- internal static string FailedUnzipping {

- get {

- return ResourceManager.GetString("FailedUnzipping", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Upgrade failed. 的本地化字符串。

- /// </summary>

- internal static string FailedUpgrade {

- get {

- return ResourceManager.GetString("FailedUpgrade", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Please run it from the main application. 的本地化字符串。

- /// </summary>

- internal static string Guidelines {

- get {

- return ResourceManager.GetString("Guidelines", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Information 的本地化字符串。

- /// </summary>

- internal static string Information {

- get {

- return ResourceManager.GetString("Information", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 In progress, please wait... 的本地化字符串。

- /// </summary>

- internal static string InProgress {

- get {

- return ResourceManager.GetString("InProgress", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Start v2rayN, please wait... 的本地化字符串。

- /// </summary>

- internal static string Restartv2rayN {

- get {

- return ResourceManager.GetString("Restartv2rayN", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Start extracting the update package... 的本地化字符串。

- /// </summary>

- internal static string StartUnzipping {

- get {

- return ResourceManager.GetString("StartUnzipping", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Successfully extracted the update package. 的本地化字符串。

- /// </summary>

- internal static string SuccessUnzipping {

- get {

- return ResourceManager.GetString("SuccessUnzipping", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Upgrade success. 的本地化字符串。

- /// </summary>

- internal static string SuccessUpgrade {

- get {

- return ResourceManager.GetString("SuccessUpgrade", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Try to terminate the v2rayN process... 的本地化字符串。

- /// </summary>

- internal static string TryTerminateProcess {

- get {

- return ResourceManager.GetString("TryTerminateProcess", resourceCulture);

- }

- }

-

- /// <summary>

- /// 查找类似 Upgrade failed, file not found. 的本地化字符串。

- /// </summary>

- internal static string UpgradeFileNotFound {

- get {

- return ResourceManager.GetString("UpgradeFileNotFound", resourceCulture);

- }

- }

- }

-}

--- v2rayN/AmazTool/Resx/Resource.resx

@@ -1,156 +0,0 @@

-<?xml version="1.0" encoding="utf-8"?>

-<root>

- <!--

- Microsoft ResX Schema

-

- Version 2.0

-

- The primary goals of this format is to allow a simple XML format

- that is mostly human readable. The generation and parsing of the

- various data types are done through the TypeConverter classes

- associated with the data types.

-

- Example:

-

- ... ado.net/XML headers & schema ...

- <resheader name="resmimetype">text/microsoft-resx</resheader>

- <resheader name="version">2.0</resheader>

- <resheader name="reader">System.Resources.ResXResourceReader, System.Windows.Forms, ...</resheader>

- <resheader name="writer">System.Resources.ResXResourceWriter, System.Windows.Forms, ...</resheader>

- <data name="Name1"><value>this is my long string</value><comment>this is a comment</comment></data>

- <data name="Color1" type="System.Drawing.Color, System.Drawing">Blue</data>

- <data name="Bitmap1" mimetype="application/x-microsoft.net.object.binary.base64">

- <value>[base64 mime encoded serialized .NET Framework object]</value>

- </data>

- <data name="Icon1" type="System.Drawing.Icon, System.Drawing" mimetype="application/x-microsoft.net.object.bytearray.base64">

- <value>[base64 mime encoded string representing a byte array form of the .NET Framework object]</value>

- <comment>This is a comment</comment>

- </data>

-

- There are any number of "resheader" rows that contain simple

- name/value pairs.

-

- Each data row contains a name, and value. The row also contains a

- type or mimetype. Type corresponds to a .NET class that support

- text/value conversion through the TypeConverter architecture.

- Classes that don't support this are serialized and stored with the

- mimetype set.

-

- The mimetype is used for serialized objects, and tells the

- ResXResourceReader how to depersist the object. This is currently not

- extensible. For a given mimetype the value must be set accordingly:

-

- Note - application/x-microsoft.net.object.binary.base64 is the format

- that the ResXResourceWriter will generate, however the reader can

- read any of the formats listed below.

-

- mimetype: application/x-microsoft.net.object.binary.base64

- value : The object must be serialized with

- : System.Runtime.Serialization.Formatters.Binary.BinaryFormatter

- : and then encoded with base64 encoding.

-

- mimetype: application/x-microsoft.net.object.soap.base64

- value : The object must be serialized with

- : System.Runtime.Serialization.Formatters.Soap.SoapFormatter

- : and then encoded with base64 encoding.

-

- mimetype: application/x-microsoft.net.object.bytearray.base64

- value : The object must be serialized into a byte array

- : using a System.ComponentModel.TypeConverter

- : and then encoded with base64 encoding.

- -->

- <xsd:schema id="root" xmlns="" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:msdata="urn:schemas-microsoft-com:xml-msdata">

- <xsd:import namespace="http://www.w3.org/XML/1998/namespace" />

- <xsd:element name="root" msdata:IsDataSet="true">

- <xsd:complexType>

- <xsd:choice maxOccurs="unbounded">

- <xsd:element name="metadata">

- <xsd:complexType>

- <xsd:sequence>

- <xsd:element name="value" type="xsd:string" minOccurs="0" />

- </xsd:sequence>

- <xsd:attribute name="name" use="required" type="xsd:string" />

- <xsd:attribute name="type" type="xsd:string" />

- <xsd:attribute name="mimetype" type="xsd:string" />

- <xsd:attribute ref="xml:space" />

- </xsd:complexType>

- </xsd:element>

- <xsd:element name="assembly">

- <xsd:complexType>

- <xsd:attribute name="alias" type="xsd:string" />

- <xsd:attribute name="name" type="xsd:string" />

- </xsd:complexType>

- </xsd:element>

- <xsd:element name="data">

- <xsd:complexType>

- <xsd:sequence>

- <xsd:element name="value" type="xsd:string" minOccurs="0" msdata:Ordinal="1" />

- <xsd:element name="comment" type="xsd:string" minOccurs="0" msdata:Ordinal="2" />

- </xsd:sequence>

- <xsd:attribute name="name" type="xsd:string" use="required" msdata:Ordinal="1" />

- <xsd:attribute name="type" type="xsd:string" msdata:Ordinal="3" />

- <xsd:attribute name="mimetype" type="xsd:string" msdata:Ordinal="4" />

- <xsd:attribute ref="xml:space" />

- </xsd:complexType>

- </xsd:element>

- <xsd:element name="resheader">

- <xsd:complexType>

- <xsd:sequence>

- <xsd:element name="value" type="xsd:string" minOccurs="0" msdata:Ordinal="1" />

- </xsd:sequence>

- <xsd:attribute name="name" type="xsd:string" use="required" />

- </xsd:complexType>

- </xsd:element>

- </xsd:choice>

- </xsd:complexType>

- </xsd:element>

- </xsd:schema>

- <resheader name="resmimetype">

- <value>text/microsoft-resx</value>

- </resheader>

- <resheader name="version">

- <value>2.0</value>

- </resheader>

- <resheader name="reader">

- <value>System.Resources.ResXResourceReader, System.Windows.Forms, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</value>

- </resheader>

- <resheader name="writer">

- <value>System.Resources.ResXResourceWriter, System.Windows.Forms, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</value>

- </resheader>

- <data name="Restartv2rayN" xml:space="preserve">

- <value>Start v2rayN, please wait...</value>

- </data>

- <data name="Guidelines" xml:space="preserve">

- <value>Please run it from the main application.</value>

- </data>

- <data name="UpgradeFileNotFound" xml:space="preserve">

- <value>Upgrade failed, file not found.</value>

- </data>

- <data name="InProgress" xml:space="preserve">

- <value>In progress, please wait...</value>

- </data>

- <data name="TryTerminateProcess" xml:space="preserve">

- <value>Try to terminate the v2rayN process...</value>

- </data>

- <data name="FailedTerminateProcess" xml:space="preserve">

- <value>Failed to terminate the v2rayN.Close it manually,or the upgrade may fail.</value>

- </data>

- <data name="StartUnzipping" xml:space="preserve">

- <value>Start extracting the update package...</value>

- </data>

- <data name="SuccessUnzipping" xml:space="preserve">

- <value>Successfully extracted the update package.</value>

- </data>

- <data name="FailedUnzipping" xml:space="preserve">

- <value>Failed to extract the update package.</value>

- </data>

- <data name="FailedUpgrade" xml:space="preserve">

- <value>Upgrade failed.</value>

- </data>

- <data name="SuccessUpgrade" xml:space="preserve">

- <value>Upgrade success.</value>

- </data>

- <data name="Information" xml:space="preserve">

- <value>Information</value>

- </data>

-</root>

\ No newline at end of file

--- v2rayN/AmazTool/Resx/Resource.zh-Hans.resx

@@ -1,156 +0,0 @@

-<?xml version="1.0" encoding="utf-8"?>

-<root>

- <!--

- Microsoft ResX Schema

-

- Version 2.0

-

- The primary goals of this format is to allow a simple XML format

- that is mostly human readable. The generation and parsing of the

- various data types are done through the TypeConverter classes

- associated with the data types.

-

- Example:

-

- ... ado.net/XML headers & schema ...

- <resheader name="resmimetype">text/microsoft-resx</resheader>

- <resheader name="version">2.0</resheader>

- <resheader name="reader">System.Resources.ResXResourceReader, System.Windows.Forms, ...</resheader>

- <resheader name="writer">System.Resources.ResXResourceWriter, System.Windows.Forms, ...</resheader>

- <data name="Name1"><value>this is my long string</value><comment>this is a comment</comment></data>

- <data name="Color1" type="System.Drawing.Color, System.Drawing">Blue</data>

- <data name="Bitmap1" mimetype="application/x-microsoft.net.object.binary.base64">

- <value>[base64 mime encoded serialized .NET Framework object]</value>

- </data>

- <data name="Icon1" type="System.Drawing.Icon, System.Drawing" mimetype="application/x-microsoft.net.object.bytearray.base64">

- <value>[base64 mime encoded string representing a byte array form of the .NET Framework object]</value>

- <comment>This is a comment</comment>

- </data>

-

- There are any number of "resheader" rows that contain simple

- name/value pairs.

-

- Each data row contains a name, and value. The row also contains a

- type or mimetype. Type corresponds to a .NET class that support

- text/value conversion through the TypeConverter architecture.

- Classes that don't support this are serialized and stored with the

- mimetype set.

-

- The mimetype is used for serialized objects, and tells the

- ResXResourceReader how to depersist the object. This is currently not

- extensible. For a given mimetype the value must be set accordingly:

-

- Note - application/x-microsoft.net.object.binary.base64 is the format

- that the ResXResourceWriter will generate, however the reader can

- read any of the formats listed below.

-

- mimetype: application/x-microsoft.net.object.binary.base64

- value : The object must be serialized with

- : System.Runtime.Serialization.Formatters.Binary.BinaryFormatter

- : and then encoded with base64 encoding.

-

- mimetype: application/x-microsoft.net.object.soap.base64

- value : The object must be serialized with

- : System.Runtime.Serialization.Formatters.Soap.SoapFormatter

- : and then encoded with base64 encoding.

-

- mimetype: application/x-microsoft.net.object.bytearray.base64

- value : The object must be serialized into a byte array

- : using a System.ComponentModel.TypeConverter

- : and then encoded with base64 encoding.

- -->

- <xsd:schema id="root" xmlns="" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:msdata="urn:schemas-microsoft-com:xml-msdata">

- <xsd:import namespace="http://www.w3.org/XML/1998/namespace" />

- <xsd:element name="root" msdata:IsDataSet="true">

- <xsd:complexType>

- <xsd:choice maxOccurs="unbounded">

- <xsd:element name="metadata">

- <xsd:complexType>

- <xsd:sequence>

- <xsd:element name="value" type="xsd:string" minOccurs="0" />

- </xsd:sequence>

- <xsd:attribute name="name" use="required" type="xsd:string" />

- <xsd:attribute name="type" type="xsd:string" />

- <xsd:attribute name="mimetype" type="xsd:string" />

- <xsd:attribute ref="xml:space" />

- </xsd:complexType>

- </xsd:element>

- <xsd:element name="assembly">

- <xsd:complexType>

- <xsd:attribute name="alias" type="xsd:string" />

- <xsd:attribute name="name" type="xsd:string" />

- </xsd:complexType>

- </xsd:element>

- <xsd:element name="data">

- <xsd:complexType>

- <xsd:sequence>

- <xsd:element name="value" type="xsd:string" minOccurs="0" msdata:Ordinal="1" />

- <xsd:element name="comment" type="xsd:string" minOccurs="0" msdata:Ordinal="2" />

- </xsd:sequence>

- <xsd:attribute name="name" type="xsd:string" use="required" msdata:Ordinal="1" />

- <xsd:attribute name="type" type="xsd:string" msdata:Ordinal="3" />

- <xsd:attribute name="mimetype" type="xsd:string" msdata:Ordinal="4" />

- <xsd:attribute ref="xml:space" />

- </xsd:complexType>

- </xsd:element>

- <xsd:element name="resheader">

- <xsd:complexType>

- <xsd:sequence>

- <xsd:element name="value" type="xsd:string" minOccurs="0" msdata:Ordinal="1" />

- </xsd:sequence>

- <xsd:attribute name="name" type="xsd:string" use="required" />

- </xsd:complexType>

- </xsd:element>

- </xsd:choice>

- </xsd:complexType>

- </xsd:element>

- </xsd:schema>

- <resheader name="resmimetype">

- <value>text/microsoft-resx</value>

- </resheader>

- <resheader name="version">

- <value>2.0</value>

- </resheader>

- <resheader name="reader">

- <value>System.Resources.ResXResourceReader, System.Windows.Forms, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</value>

- </resheader>

- <resheader name="writer">

- <value>System.Resources.ResXResourceWriter, System.Windows.Forms, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089</value>

- </resheader>

- <data name="Restartv2rayN" xml:space="preserve">

- <value>正在重启,请等待...</value>

- </data>

- <data name="Guidelines" xml:space="preserve">

- <value>请从主应用运行。</value>

- </data>

- <data name="UpgradeFileNotFound" xml:space="preserve">

- <value>升级失败,文件不存在。</value>

- </data>

- <data name="InProgress" xml:space="preserve">

- <value>正在进行中,请等待...</value>

- </data>

- <data name="TryTerminateProcess" xml:space="preserve">

- <value>尝试结束 v2rayN 进程...</value>

- </data>

- <data name="FailedTerminateProcess" xml:space="preserve">

- <value>请手动关闭正在运行的v2rayN,否则可能升级失败。</value>

- </data>

- <data name="StartUnzipping" xml:space="preserve">

- <value>开始解压缩更新包...</value>

- </data>

- <data name="SuccessUnzipping" xml:space="preserve">

- <value>解压缩更新包成功。</value>

- </data>

- <data name="FailedUnzipping" xml:space="preserve">

- <value>解压缩更新包失败。</value>

- </data>

- <data name="FailedUpgrade" xml:space="preserve">

- <value>升级失败。</value>

- </data>

- <data name="SuccessUpgrade" xml:space="preserve">

- <value>升级成功。</value>

- </data>

- <data name="Information" xml:space="preserve">

- <value>提示</value>

- </data>

-</root>

\ No newline at end of file

--- v2rayN/AmazTool/UpgradeApp.cs

@@ -8,17 +8,17 @@ namespace AmazTool

{

public static void Upgrade(string fileName)

{

- Console.WriteLine($"{Resx.Resource.StartUnzipping}\n{fileName}");

+ Console.WriteLine($"{LocalizationHelper.GetLocalizedValue("Start_Unzipping")}\n{fileName}");

Waiting(9);

if (!File.Exists(fileName))

{

- Console.WriteLine(Resx.Resource.UpgradeFileNotFound);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Upgrade_File_Not_Found"));

return;

}

- Console.WriteLine(Resx.Resource.TryTerminateProcess);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Try_Terminate_Process"));

try

{

var existing = Process.GetProcessesByName(V2rayN);

@@ -35,10 +35,10 @@ namespace AmazTool

catch (Exception ex)

{

// Access may be denied without admin right. The user may not be an administrator.

- Console.WriteLine(Resx.Resource.FailedTerminateProcess + ex.StackTrace);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Failed_Terminate_Process") + ex.StackTrace);

}

- Console.WriteLine(Resx.Resource.StartUnzipping);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Start_Unzipping"));

StringBuilder sb = new();

try

{

@@ -81,16 +81,16 @@ namespace AmazTool

}

catch (Exception ex)

{

- Console.WriteLine(Resx.Resource.FailedUpgrade + ex.StackTrace);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Failed_Upgrade") + ex.StackTrace);

//return;

}

if (sb.Length > 0)

{

- Console.WriteLine(Resx.Resource.FailedUpgrade + sb.ToString());

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Failed_Upgrade") + sb.ToString());

//return;

}

- Console.WriteLine(Resx.Resource.Restartv2rayN);

+ Console.WriteLine(LocalizationHelper.GetLocalizedValue("Restart_v2rayN"));

Waiting(9);

Process process = new()

{

|

v2rayn

|

2dust

|

C#

|

C#

| 75,986

| 12,289

|

A GUI client for Windows, Linux and macOS, support Xray and sing-box and others

|

2dust_v2rayn

|

CODE_IMPROVEMENT

|

simplify decoder draining logic

|

95ecd740aeffc8341172a93a5dc4f78ab9a9fd78

|

2024-08-28 13:25:36

|

Edward Hsing

|

Update README.md

| false

| 1

| 0

| 1

|

--- README.md

@@ -1,7 +1,6 @@

# US.KG – A FREE NAME FOR EVERYONE

[Registry Website (https://nic.us.kg/)](https://nic.us.kg/)

#### Your request is typically processed within 15 minutes after submission.

-#### Please DO NOT download any untrusted binary files from "issues" and run them, they are viruses! We will not ask you to download any files

## Domain names no longer cost

Now, regardless of your project, whether you’re an individual or an organization, you can easily register and own your own *.US.KG domain name, 100% completely free. You can host your website with any third-party DNS service you like, such as Cloudflare, FreeDNS by afraid, hostry…

|

freedomain

|

digitalplatdev

|

HTML

|

HTML

| 41,142

| 933

|

DigitalPlat FreeDomain: Free Domain For Everyone

|

digitalplatdev_freedomain

|

DOC_CHANGE

|

changes in readme

|

0f6d0a44eac8accfbef6c8940a83ea0c19c92d21

|

2023-12-30 22:43:27

|

Kingkor Roy Tirtho

|

chore: fix not closing player

| false

| 2

| 3

| 5

|

--- lib/components/player/player.dart

@@ -95,8 +95,9 @@ class PlayerView extends HookConsumerWidget {

final topPadding = MediaQueryData.fromView(View.of(context)).padding.top;

return PopScope(

- canPop: false,

- onPopInvoked: (didPop) async {

+ canPop: panelController.isPanelOpen,

+ onPopInvoked: (canPop) async {

+ if (!canPop) return;

panelController.close();

},

child: IconTheme(

|

spotube

|

krtirtho

|

Dart

|

Dart

| 35,895

| 1,491

|

🎧 Open source Spotify client that doesn't require Premium nor uses Electron! Available for both desktop & mobile!

|

krtirtho_spotube

|

BUG_FIX

|

not closing player is fixed

|

e35ab35930a837561e3604baaaeff6bc26b49863

|

2025-02-27 19:09:28

|

Niklas Mischkulnig

|

Turbopack: prevent panic in swc issue emitter (#76595)

| false

| 46

| 18

| 64

|

--- turbopack/crates/turbopack-core/src/issue/analyze.rs

@@ -23,7 +23,7 @@ pub struct AnalyzeIssue {

impl AnalyzeIssue {

#[turbo_tasks::function]

pub fn new(

- severity: IssueSeverity,

+ severity: ResolvedVc<IssueSeverity>,

source_ident: ResolvedVc<AssetIdent>,

title: ResolvedVc<RcStr>,

message: ResolvedVc<StyledString>,

@@ -31,7 +31,7 @@ impl AnalyzeIssue {

source: Option<IssueSource>,

) -> Vc<Self> {

Self {

- severity: severity.resolved_cell(),

+ severity,

source_ident,

title,

message,

--- turbopack/crates/turbopack-core/src/issue/mod.rs

@@ -30,7 +30,7 @@ use crate::{

};

#[turbo_tasks::value(shared)]

-#[derive(PartialOrd, Ord, Copy, Clone, Hash, Debug, DeterministicHash, TaskInput)]

+#[derive(PartialOrd, Ord, Copy, Clone, Hash, Debug, DeterministicHash)]

#[serde(rename_all = "camelCase")]

pub enum IssueSeverity {

Bug,

--- turbopack/crates/turbopack-ecmascript/src/references/esm/export.rs

@@ -413,7 +413,7 @@ pub async fn expand_star_exports(

async fn emit_star_exports_issue(source_ident: Vc<AssetIdent>, message: RcStr) -> Result<()> {

AnalyzeIssue::new(

- IssueSeverity::Warning,

+ IssueSeverity::Warning.cell(),

source_ident,

Vc::cell("unexpected export *".into()),

StyledString::Text(message).cell(),

--- turbopack/crates/turbopack-ecmascript/src/references/mod.rs

@@ -939,7 +939,7 @@ pub(crate) async fn analyse_ecmascript_module_internal(

analysis.set_async_module(async_module);

} else if let Some(span) = top_level_await_span {

AnalyzeIssue::new(

- IssueSeverity::Error,

+ IssueSeverity::Error.cell(),

source.ident(),

Vc::cell("unexpected top level await".into()),

StyledString::Text("top level await is only supported in ESM modules.".into())

--- turbopack/crates/turbopack-swc-utils/src/emitter.rs

@@ -27,46 +27,19 @@ impl IssueCollector {

};

for issue in issues {

- AnalyzeIssue::new(

- issue.severity,

- issue.source.ident(),

- Vc::cell(issue.title),

- issue.message.cell(),

- issue.code,

- issue.issue_source,

- )

- .to_resolved()

- .await?

- .emit();

+ issue.to_resolved().await?.emit();

}

Ok(())

}

pub fn last_emitted_issue(&self) -> Option<Vc<AnalyzeIssue>> {

let inner = self.inner.lock();

- inner.emitted_issues.last().map(|issue| {

- AnalyzeIssue::new(

- issue.severity,

- issue.source.ident(),

- Vc::cell(issue.title.clone()),

- issue.message.clone().cell(),

- issue.code.clone(),

- issue.issue_source.clone(),

- )

- })

+ inner.emitted_issues.last().copied()

}

}

struct IssueCollectorInner {

- emitted_issues: Vec<PlainAnalyzeIssue>,

-}

-struct PlainAnalyzeIssue {

- severity: IssueSeverity,

- source: ResolvedVc<Box<dyn Source>>,

- title: RcStr,

- message: StyledString,

- code: Option<RcStr>,

- issue_source: Option<IssueSource>,

+ emitted_issues: Vec<Vc<AnalyzeIssue>>,

}

pub struct IssueEmitter {

@@ -115,7 +88,7 @@ impl Emitter for IssueEmitter {

.as_ref()

.is_some_and(|d| matches!(d, DiagnosticId::Lint(_)));

- let severity = if is_lint {

+ let severity = (if is_lint {

IssueSeverity::Suggestion

} else {

match level {

@@ -128,7 +101,8 @@ impl Emitter for IssueEmitter {

Level::Cancelled => IssueSeverity::Error,

Level::FailureNote => IssueSeverity::Note,

}

- };

+ })

+ .resolved_cell();

let title;

if let Some(t) = self.title.as_ref() {

@@ -144,16 +118,14 @@ impl Emitter for IssueEmitter {

});

// TODO add other primary and secondary spans with labels as sub_issues

- // This can be invoked by swc on different threads, so we cannot call any turbo-tasks or

- // create cells here.

- let issue = PlainAnalyzeIssue {

- severity,

- source: self.source,

- title,

- message: StyledString::Text(message.into()),

+ let issue = AnalyzeIssue::new(

+ *severity,

+ self.source.ident(),

+ Vc::cell(title),

+ StyledString::Text(message.into()).cell(),

code,

- issue_source: source,

- };

+ source,

+ );

let mut inner = self.inner.lock();

inner.emitted_issues.push(issue);

|

next.js

|

vercel

|

JavaScript

|

JavaScript

| 129,891

| 27,821

|

The React Framework

|

vercel_next.js

|

CONFIG_CHANGE

|

Obvious

|

991609379c1651e8ad19f9bf9d89dc7895d86627

|

2023-05-03 10:23:12

|

Guide

|

[docs update]简单完善

| false

| 83

| 68

| 151

|

--- README.md

@@ -33,6 +33,7 @@

- [项目介绍](./docs/javaguide/intro.md)

- [贡献指南](./docs/javaguide/contribution-guideline.md)

- [常见问题](./docs/javaguide/faq.md)

+- [项目待办](./docs/javaguide/todo.md)

## Java

--- docs/.vuepress/navbar.ts

@@ -4,7 +4,7 @@ export default navbar([

{ text: "面试指南", icon: "java", link: "/home.md" },

{

text: "知识星球",

- icon: "planet",

+ icon: "code",

link: "/about-the-author/zhishixingqiu-two-years.md",

},

{ text: "开源项目", icon: "github", link: "/open-source-project/" },

@@ -23,7 +23,12 @@ export default navbar([

text: "更新历史",

icon: "history",

link: "/timeline/",

- }

+ },

+ {

+ text: "旧版入口(不推荐)",

+ icon: "java",

+ link: "https://snailclimb.gitee.io/javaguide/#/",

+ },

],

},

]);

--- docs/.vuepress/sidebar/about-the-author.ts

@@ -3,7 +3,7 @@ import { arraySidebar } from "vuepress-theme-hope";

export const aboutTheAuthor = arraySidebar([

{

text: "个人经历",

- icon: "experience",

+ icon: "zuozhe",

collapsible: false,

children: [

"internet-addiction-teenager",

--- docs/.vuepress/sidebar/index.ts

@@ -11,6 +11,7 @@ export default sidebar({

"/books/": books,

"/about-the-author/": aboutTheAuthor,

"/high-quality-technical-articles/": highQualityTechnicalArticles,

+ "/javaguide/": ["intro", "history", "contribution-guideline", "faq", "todo"],

"/zhuanlan/": [

"java-mian-shi-zhi-bei",

"handwritten-rpc-framework",

@@ -18,17 +19,6 @@ export default sidebar({

],

// 必须放在最后面

"/": [

- {

- text: "必看",

- icon: "star",

- collapsible: true,

- prefix: "javaguide/",

- children: [

- "intro",

- "contribution-guideline",

- "faq",

- ],

- },

{

text: "面试准备",

icon: "interview",

@@ -374,7 +364,7 @@ export default sidebar({

{

text: "常用框架",

prefix: "system-design/framework/",

- icon: "component",

+ icon: "framework",

collapsible: true,

children: [

{

@@ -403,7 +393,7 @@ export default sidebar({

},

{

text: "系统设计",

- icon: "design",

+ icon: "xitongsheji",

prefix: "system-design/",

collapsible: true,

children: [

@@ -453,7 +443,6 @@ export default sidebar({

text: "理论&算法&协议",

icon: "suanfaku",

prefix: "protocol/",

- collapsible: true,

children: [

"cap-and-base-theorem",

"paxos-algorithm",

@@ -461,8 +450,13 @@ export default sidebar({

"gossip-protocl",

],

},

+ "api-gateway",

+ "distributed-id",

+ "distributed-lock",

+ "distributed-transaction",

+ "distributed-configuration-center",

{

- text: "RPC详解",

+ text: "RPC(远程调用)详解",

prefix: "rpc/",

icon: "network",

collapsible: true,

@@ -479,11 +473,6 @@ export default sidebar({

"zookeeper/zookeeper-in-action",

],

},

- "api-gateway",

- "distributed-id",

- "distributed-lock",

- "distributed-transaction",

- "distributed-configuration-center",

],

},

{

--- docs/.vuepress/sidebar/open-source-project.ts

@@ -14,7 +14,7 @@ export const openSourceProject = arraySidebar([

{

text: "系统设计",

link: "system-design",

- icon: "design",

+ icon: "xitongsheji",

},

{

text: "工具类库",

--- docs/.vuepress/theme.ts

@@ -10,7 +10,7 @@ export default hopeTheme({

logo: "/logo.png",

hostname: "https://javaguide.cn/",

- iconAssets: "//at.alicdn.com/t/c/font_2922463_kweia6fbo9.css",

+ iconAssets: "//at.alicdn.com/t/c/font_2922463_9aayheyb3v7.css",

author: {

name: "Guide",

--- docs/about-the-author/zhishixingqiu-two-years.md

@@ -127,7 +127,7 @@ star: 2

-**方式二(推荐)** :添加我的个人微信(**javaguide1024**)领取一个 **30** 元的星球专属优惠券(一定要备注“优惠卷”)。

+**方式二(推荐)** :添加我的个人微信(**guidege666**)领取一个 **30** 元的星球专属优惠券(一定要备注“优惠卷”)。

**一定要备注“优惠卷”**,不然通过不了。

--- docs/cs-basics/network/other-network-questions2.md

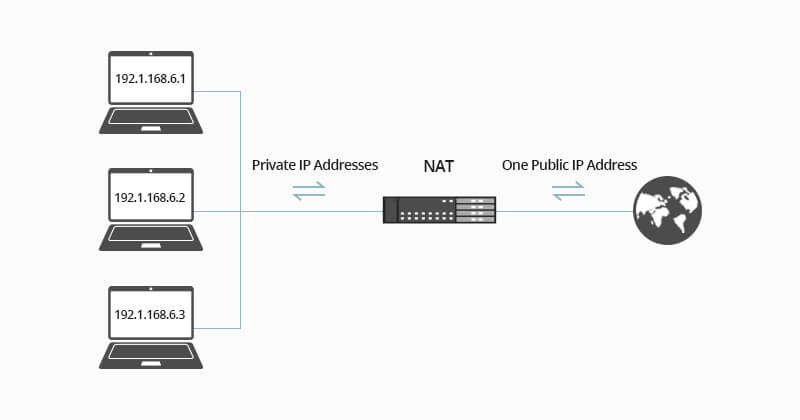

@@ -137,8 +137,6 @@ NAT 不光可以缓解 IPv4 地址资源短缺的问题,还可以隐藏内部

-相关阅读:[NAT 协议详解(网络层)](./nat.md)。

-

## ARP

### 什么是 Mac 地址?

--- docs/high-performance/cdn.md

@@ -1,7 +1,7 @@

---

title: CDN(内容分发网络)详解

category: 高性能

-icon: cdn

+icon: "cdn"

head:

- - meta

- name: keywords

--- docs/high-performance/load-balancing.md

@@ -1,7 +1,7 @@

---

title: 负载均衡详解

category: 高性能

-icon: fuzaijunheng

+icon: "fuzaijunheng"

head:

- - meta

- name: keywords

--- docs/high-performance/read-and-write-separation-and-library-subtable.md

@@ -1,7 +1,7 @@

---

title: 读写分离和分库分表详解

category: 高性能

-icon: mysql

+icon: "mysql"

head:

- - meta

- name: keywords

--- docs/high-performance/sql-optimization.md

@@ -1,7 +1,7 @@

---

-title: 常见SQL优化手段总结(付费)

+title: 常见 SQL 优化手段总结(付费)

category: 高性能

-icon: SQL

+icon: "mysql"

head:

- - meta

- name: keywords

--- docs/home.md

@@ -6,7 +6,7 @@ title: JavaGuide(Java学习&&面试指南)

::: tip 友情提示

- **面试专版** :准备 Java 面试的小伙伴可以考虑面试专版:**[《Java 面试指北 》](./zhuanlan/java-mian-shi-zhi-bei.md)** (质量很高,专为面试打造,配合 JavaGuide 食用)。

-- **知识星球** :专属面试小册/一对一交流/简历修改/专属求职指南,欢迎加入 **[JavaGuide 知识星球](./about-the-author/zhishixingqiu-two-years.md)**(点击链接即可查看星球的详细介绍,一定确定自己真的需要再加入)。

+- **知识星球** :专属面试小册/一对一交流/简历修改/专属求职指南,欢迎加入 **[JavaGuide 知识星球](./about-the-author/zhishixingqiu-two-years.md)**(点击链接即可查看星球的详细介绍,一定一定一定确定自己真的需要再加入,一定一定要看完详细介绍之后再加我)。

- **转载须知** :以下所有文章如非文首说明为转载皆为 JavaGuide 原创,转载在文首注明出处,如发现恶意抄袭/搬运,会动用法律武器维护自己的权益。让我们一起维护一个良好的技术创作环境!

:::

@@ -26,6 +26,13 @@ title: JavaGuide(Java学习&&面试指南)

[](https://javaguide.cn/about-the-author/zhishixingqiu-two-years.html)

+## 项目相关

+

+- [项目介绍](./javaguide/intro.md)

+- [贡献指南](./javaguide/contribution-guideline.md)

+- [常见问题](./javaguide/faq.md)

+- [项目代办](./javaguide/todo.md)

+

## Java

### 基础

@@ -140,7 +147,7 @@ JVM 这部分内容主要参考 [JVM 虚拟机规范-Java8](https://docs.oracle.

- [TCP 三次握手和四次挥手(传输层)](./cs-basics/network/tcp-connection-and-disconnection.md)

- [TCP 传输可靠性保障(传输层)](./cs-basics/network/tcp-reliability-guarantee.md)

- [ARP 协议详解(网络层)](./cs-basics/network/arp.md)

-- [NAT 协议详解(网络层)](./cs-basics/network/nat.md)

+- [NAT 协议详解(网络层)](./docs/cs-basics/network/nat.md)

- [网络攻击常见手段总结(安全)](./cs-basics/network/network-attack-means.md)

### 数据结构

--- docs/interview-preparation/interview-experience.md

@@ -1,7 +1,6 @@

---

title: 优质面经汇总(付费)

category: 知识星球

-icon: experience

---

古人云:“**他山之石,可以攻玉**” 。善于学习借鉴别人的面试的成功经验或者失败的教训,可以让自己少走许多弯路。

--- docs/interview-preparation/key-points-of-interview.md

@@ -1,7 +1,6 @@

---

-title: Java面试重点总结(重要)

+title: Java面试重点总结

category: 面试准备

-icon: star

---

::: tip 友情提示

--- docs/interview-preparation/project-experience-guide.md

@@ -1,7 +1,6 @@

---

title: 项目经验指南

category: 面试准备

-icon: project

---

::: tip 友情提示

--- docs/interview-preparation/resume-guide.md

@@ -1,7 +1,6 @@

---

-title: 程序员简历编写指南(重要)

+title: 程序员简历编写指南

category: 面试准备

-icon: jianli

---

::: tip 友情提示

--- docs/interview-preparation/self-test-of-common-interview-questions.md

@@ -1,7 +1,6 @@

---

title: 常见面试题自测(付费)

category: 知识星球

-icon: security-fill

---

面试之前,强烈建议大家多拿常见的面试题来进行自测,检查一下自己的掌握情况,这是一种非常实用的备战技术面试的小技巧。

--- docs/interview-preparation/teach-you-how-to-prepare-for-the-interview-hand-in-hand.md

@@ -1,7 +1,6 @@

---

-title: 手把手教你如何准备Java面试(重要)

+title: 手把手教你如何准备Java面试

category: 知识星球

-icon: path

---

::: tip 友情提示

--- docs/javaguide/contribution-guideline.md

@@ -1,7 +1,6 @@

---

title: 贡献指南

category: 走近项目

-icon: guide

---

欢迎参与 JavaGuide 的维护工作,这是一件非常有意义的事情。详细信息请看:[JavaGuide 贡献指南](https://zhuanlan.zhihu.com/p/464832264) 。

--- docs/javaguide/faq.md

@@ -1,13 +1,8 @@

---

title: 常见问题

category: 走近项目

-icon: help

---

-## JavaGuide 是否支持 RSS?

-

-必须支持!推荐 RSS 订阅本网站获取最新更新。

-

## JavaGuide 有没有 PDF 版本?

由于 JavaGuide 内容在持续完善,所以并没有一个完全与之同步的 PDF 版本提供。如果你想要 PDF 版本的话,可以考虑 **《JavaGuide 面试突击版》** ,这是对 JavaGuide 内容的浓缩总结。

@@ -39,7 +34,7 @@ JavaGuide 这个项目诞生一年左右就有出版社的老师联系我了,

- JavaGuide 的很多内容我还不是很满意,也一直在维护中,细心的小伙伴看我的提交记录就明白了。

- 开源版本更容易维护和修改,也能让更多人更方便地参与到项目的建设中,这也是我最初做这个项目的初衷。

- 我觉得出书是一件神圣的事情,自认能力还不够。

-- 个人精力有限,不光有本职工作,还弄了一个[知识星球](https://javaguide.cn/about-the-author/zhishixingqiu-two-years.html)赚点外快,还要维护完善 JavaGuide。

+- 个人精力有限,不光有本职工作,还弄了一个[知识星球](http://localhost:8080/about-the-author/zhishixingqiu-two-years.html)赚点外快,还要维护完善 JavaGuide。

- ......

这几年一直在默默完善,真心希望 JavaGuide 越来越好,帮助到更多朋友!也欢迎大家参与进来!

--- docs/javaguide/intro.md

@@ -1,10 +1,9 @@

---

title: 项目介绍

category: 走近项目

-icon: about

---

-我是 19 年大学毕业的,在大三准备面试的时候,我开源了 JavaGuide 。我把自己准备面试过程中的一些总结都毫不保留地通过 JavaGuide 分享了出来。

+在大三准备面试的时候,我开源了 JavaGuide 。我把自己准备面试过程中的一些总结都毫不保留地通过 JavaGuide 分享了出来。

开源 JavaGuide 初始想法源于自己的个人那一段比较迷茫的学习经历。主要目的是为了通过这个开源平台来帮助一些在学习 Java 或者面试过程中遇到问题的小伙伴。

@@ -13,23 +12,7 @@ icon: about

相比于其他通过 JavaGuide 学到东西或者说助力获得 offer 的朋友来说 , JavaGuide 对我的意义更加重大。不夸张的说,有时候真的感觉像是自己的孩子一点一点长大一样,我一直用心呵护着它。虽然,我花了很长时间来维护它,但是,我觉得非常值得!非常有意义!

-希望大家对面试不要抱有侥幸的心理,打铁还需自身硬! 我希望这个文档是为你学习 Java 指明方向,而不仅仅是用来应付面试用的。

-

-另外,JavaGuide 不可能把面试中的所有内容都给涵盖住,尤其是阿里、美团这种挖的比较深入的面试。你可以根据你的目标公司进行针对性的深入学习,多看一些目标公司的面经进行查漏补缺,没事就自测一下,多多思考总结。

-

-加油!奥利给!

-

-## 学习建议

-

-JavaGuide 整体目录规划已经非常清晰了,你可以从头开始学习,也可以根据自身情况来选择性地学习。

-

-## 知识星球

-

-对于准备面试的同学来说,强烈推荐我创建的一个纯粹的[Java 面试知识星球](../about-the-author/zhishixingqiu-two-years.md),干货非常多,学习氛围也很不错!

-

-下面是星球提供的部分服务(点击下方图片即可获取知识星球的详细介绍):

-

-[](../about-the-author/zhishixingqiu-two-years.md)

+希望大家对面试不要抱有侥幸的心理,打铁还需自身硬! 我希望这个文档是为你学习 Java 指明方向,而不是用来应付面试用的。加油!奥利给!

## 项目说明

@@ -40,3 +23,5 @@ JavaGuide 整体目录规划已经非常清晰了,你可以从头开始学习

## 贡献者

[你可以点此链接查看 JavaGuide 的所有贡献者。](https://github.com/Snailclimb/JavaGuide/graphs/contributors) 感谢你们让 JavaGuide 变得更好!如果你们来到武汉一定要找我,我请你们吃饭玩耍。

+

+欢迎参与 [JavaGuide 的维护工作](https://zhuanlan.zhihu.com/p/464832264),这是一件非常有意义的事情。

--- docs/javaguide/todo.md

@@ -0,0 +1,13 @@

+---

+title: 项目待办

+category: 走近项目

+---

+

+- [x] 数据结构内容完善

+- [x] Java 基础内容完善

+- [x] 大篇幅文章拆分

+- [ ] JVM 内容更新完善

+- [ ] 计算机网络知识点完善

+- [ ] 分布式常见理论和算法总结完善

+

+欢迎参与 JavaGuide 的维护工作,这是一件非常有意义的事情。详细信息请看:[JavaGuide 贡献指南](./contribution-guideline.md) 。

--- docs/open-source-project/readme.md

@@ -16,7 +16,7 @@ category: 开源项目

另外,我的公众号还会定期分享优质开源项目,每月一期,每一期我都会精选 5 个高质量的 Java 开源项目。

-目前已经更新到了第 19 期:

+目前已经更新到了第 16 期:

1. [一款基于 Spring Boot + Vue 的一站式开源持续测试平台](http://mp.weixin.qq.com/s?__biz=Mzg2OTA0Njk0OA==&mid=2247515383&idx=1&sn=ba7244020c05d966b483d8c302d54e85&chksm=cea1f33cf9d67a2a111bcf6cadc3cc1c44828ba2302cd3e13bbd88349e43d4254808e6434133&scene=21#wechat_redirect)。

2. [用 Java 写个沙盒塔防游戏!已上架 Steam,Apple Store](https://mp.weixin.qq.com/s?__biz=Mzg2OTA0Njk0OA==&mid=2247515981&idx=1&sn=e4b9c06af65f739bdcdf76bdc35d59f6&chksm=cea1f086f9d679908bd6604b1c42d67580160d9789951f3707ad2f5de4d97aa72121d8fe777e&token=435278690&lang=zh_CN&scene=21#wechat_redirect)

@@ -35,8 +35,6 @@ category: 开源项目

15. [31.2k!这是我见过最强的后台管理系统 !!](https://mp.weixin.qq.com/s/esaivn2z_66CcrRJlDYLEA)

16. [14.3k star,这是我见过最强的第三方登录工具库!!](https://mp.weixin.qq.com/s/6-TnCHUMEIFWQVl-pIWBOA)

17. [3.2k!这是我见过最强的消息推送平台!!](https://mp.weixin.qq.com/s/heag76H4UwZmr8oBY_2gcw)

-18. [好家伙,又一本技术书籍开源了!!](https://mp.weixin.qq.com/s/w-JuBlcqCeAZR0xUFWzvHQ)

-19. [开箱即用的 ChatGPT Java SDK!支持 GPT3.5、 GPT4 API](https://mp.weixin.qq.com/s/WhI2K1VF0h_57TEVGCwuCA)

推荐你在我的公众号“**JavaGuide**”回复“**开源**”在线阅读[「优质开源项目推荐」](https://mp.weixin.qq.com/mp/appmsgalbum?__biz=Mzg2OTA0Njk0OA==&action=getalbum&album_id=1345382825083895808&scene=173&from_msgid=2247516459&from_itemidx=1&count=3&nolastread=1#wechat_redirect)系列。

--- docs/readme.md

@@ -21,6 +21,7 @@ footer: |-

## 关于网站

- [项目介绍](./javaguide/intro.md)

+- [网站历史](./javaguide/history.md)

- [贡献指南](./javaguide/contribution-guideline.md)

- [常见问题](./javaguide/faq.md)

@@ -31,13 +32,13 @@ footer: |-

- [我的知识星球快 3 岁了!](./about-the-author/zhishixingqiu-two-years.md)

- [坚持写技术博客六年了](./about-the-author/writing-technology-blog-six-years.md)

-## 知识星球

+## PDF

-对于准备面试的同学来说,强烈推荐我创建的一个纯粹的[Java 面试知识星球](../about-the-author/zhishixingqiu-two-years.md),干货非常多,学习氛围也很不错!

-

-下面是星球提供的部分服务(点击下方图片即可获取知识星球的详细介绍):

-

-[](../about-the-author/zhishixingqiu-two-years.md)

+- [《JavaGuide 面试突击版》](https://mp.weixin.qq.com/s?__biz=Mzg2OTA0Njk0OA==&mid=100029614&idx=1&sn=62993c5cf10265cb7018db7f1ec67250&chksm=4ea1fb6579d67273499b7243641d4ef372decd08047bfbb6dfb5843ef81c7ccba209086cf345#rd)

+- [《消息队列常见知识点&面试题总结》](https://t.1yb.co/Fy0u)

+- [《Java 工程师进阶知识完全扫盲》](https://t.1yb.co/GXLF)

+- [《分布式相关面试题汇总》](https://t.1yb.co/GXLF)

+- [《图解计算机基础》](https://mp.weixin.qq.com/s?__biz=Mzg2OTA0Njk0OA==&mid=100021725&idx=1&sn=2db9664ca25363139a81691043e9fd8f&chksm=4ea19a1679d61300d8990f7e43bfc7f476577a81b712cf0f9c6f6552a8b219bc081efddb5c54#rd)

## 公众号

--- docs/snippets/planet.snippet.md

@@ -1,10 +1,10 @@

[《Java 面试指北》](https://javaguide.cn/zhuanlan/java-mian-shi-zhi-bei.html)(点击链接即可查看详细介绍)的部分内容展示如下,你可以将其看作是 [JavaGuide](https://javaguide.cn/#/) 的补充完善,两者可以配合使用。

-

+

## 星球介绍

-为了帮助更多同学准备 Java 面试以及学习 Java ,我创建了一个纯粹的[ Java 面试知识星球](https://javaguide.cn/about-the-author/zhishixingqiu-two-years.html)。虽然收费只有培训班/训练营的百分之一,但是知识星球里的内容质量更高,提供的服务也更全面,非常适合准备 Java 面试和学习 Java 的同学。

+为了帮助更多同学准备 Java 面试以及学习 Java ,我创建了一个纯粹的[知识星球](https://javaguide.cn/about-the-author/zhishixingqiu-two-years.html)。虽然收费只有培训班/训练营的百分之一,但是知识星球里的内容质量更高,提供的服务也更全面。

**欢迎准备 Java 面试以及学习 Java 的同学加入我的 [知识星球](https://javaguide.cn/about-the-author/zhishixingqiu-two-years.html),干货非常多,学习氛围也很不错!收费虽然是白菜价,但星球里的内容或许比你参加上万的培训班质量还要高。**

@@ -22,7 +22,7 @@

-**方式二(推荐)** :添加我的个人微信(**javaguide1024**)领取一个 **30** 元的星球专属优惠券(一定要备注“优惠卷”)。

+**方式二(推荐)** :添加我的个人微信(**guidege666**)领取一个 **30** 元的星球专属优惠券(一定要备注“优惠卷”)。

**一定要备注“优惠卷”**,不然通过不了。

@@ -30,7 +30,7 @@

**无任何套路,无任何潜在收费项。用心做内容,不割韭菜!**

-进入星球之后,记得查看 **[星球使用指南](https://t.zsxq.com/0d18KSarv)** (一定要看!) 。

+进入星球之后,记得查看[星球使用指南](https://t.zsxq.com/0d18KSarv)(一定要看!) 。

随着时间推移,星球积累的干货资源越来越多,我花在星球上的时间也越来越多,星球的价格会逐步向上调整,想要加入的同学一定要尽早。

|

javaguide

|

snailclimb

|

Java

|

Java

| 148,495

| 45,728

|

「Java学习+面试指南」一份涵盖大部分 Java 程序员所需要掌握的核心知识。准备 Java 面试,首选 JavaGuide!

|

snailclimb_javaguide

|

DOC_CHANGE

|

Obvious

|

c3c2ad1454a5df39b10596692934081797da206b

| null |

Martin Probst

|

fix: improve type of TreeNode.children.

| false

| 1

| 1

| 0

|

--- element_injector.ts

@@ -150,7 +150,7 @@ export class TreeNode<T extends TreeNode<any>> {

get parent() { return this._parent; }

// TODO(rado): replace with a function call, does too much work for a getter.

- get children(): TreeNode<any>[] {

+ get children(): T[] {

var res = [];

var child = this._head;

while (child != null) {

|

angular_angular.json

| null | null | null | null | null | null |

angular_angular.json

|

BUG_FIX

|

5, fix written in commit msg

|

1c50612559a78dce9c108f7e7b816d1b84540fe4

|

2023-09-10 21:09:16

|

Kingkor Roy Tirtho

|

fix: limit cover image upload to allowed 256kb size

| false

| 78

| 30

| 108

|

--- lib/components/playlist/playlist_create_dialog.dart

@@ -1,5 +1,4 @@

import 'dart:convert';

-import 'dart:io';

import 'package:collection/collection.dart';

import 'package:flutter/material.dart';

@@ -155,85 +154,39 @@ class PlaylistCreateDialog extends HookConsumerWidget {

child: ListView(

shrinkWrap: true,

children: [

- FormField<XFile?>(

- initialValue: image.value,

- onSaved: (newValue) {

- image.value = newValue;

- },

- validator: (value) {

- if (value == null) return null;

- final file = File(value.path);

-

- if (file.lengthSync() > 256000) {

- return "Image size should be less than 256kb";

- }

- return null;

- },

- builder: (field) {

- return Center(

- child: Stack(

- children: [

- UniversalImage(

- path: field.value?.path ??

- TypeConversionUtils.image_X_UrlString(

- updatingPlaylist?.images,

- placeholder:

- ImagePlaceholder.collection,

- ),

- height: 200,

+ Center(

+ child: Stack(

+ children: [

+ UniversalImage(

+ path: image.value?.path ??

+ TypeConversionUtils.image_X_UrlString(

+ updatingPlaylist?.images,

+ placeholder: ImagePlaceholder.collection,

),

- Positioned(

- bottom: 20,

- right: 20,

- child: IconButton.filled(

- icon: const Icon(SpotubeIcons.edit),

- style: IconButton.styleFrom(

- backgroundColor:

- theme.colorScheme.surface,

- foregroundColor:

- theme.colorScheme.primary,

- elevation: 2,

- shadowColor: theme.colorScheme.onSurface,

- ),

- onPressed: () async {

- final imageFile = await ImagePicker()

- .pickImage(

- source: ImageSource.gallery);

+ height: 200,

+ ),

+ Positioned(

+ bottom: 20,

+ right: 20,

+ child: IconButton.filled(

+ icon: const Icon(SpotubeIcons.edit),

+ style: IconButton.styleFrom(

+ backgroundColor: theme.colorScheme.surface,

+ foregroundColor: theme.colorScheme.primary,

+ elevation: 2,

+ shadowColor: theme.colorScheme.onSurface,

+ ),

+ onPressed: () async {

+ final imageFile = await ImagePicker()

+ .pickImage(source: ImageSource.gallery);

- if (imageFile != null) {

- field.didChange(imageFile);

- field.validate();

- field.save();

- }

- },

- ),

- ),

- if (field.hasError)

- Positioned(

- bottom: 20,

- left: 20,

- child: Container(

- padding: const EdgeInsets.symmetric(

- horizontal: 8,

- vertical: 4,

- ),

- decoration: BoxDecoration(

- color: theme.colorScheme.error,

- borderRadius: BorderRadius.circular(4),

- ),

- child: Text(

- field.errorText ?? "",

- style: theme.textTheme.bodyMedium!

- .copyWith(

- color: theme.colorScheme.onError,

- ),

- ),

- ),

- ),

- ],

+ image.value = imageFile ?? image.value;

+ },

),

- );

- }),

+ ),

+ ],

+ ),

+ ),

const SizedBox(height: 10),

TextFormField(

controller: playlistName,

@@ -250,7 +203,6 @@ class PlaylistCreateDialog extends HookConsumerWidget {

hintText: context.l10n.description,

),

keyboardType: TextInputType.multiline,

- validator: ValidationBuilder().required().build(),

maxLines: 5,

),

const SizedBox(height: 10),

|

spotube

|

krtirtho

|

Dart

|

Dart

| 35,895

| 1,491

|

🎧 Open source Spotify client that doesn't require Premium nor uses Electron! Available for both desktop & mobile!

|

krtirtho_spotube

|

BUG_FIX

|

this commit fixes/polishes an earlier feature

|

b676bf3d4ccce96ce33a4b54b6236e0ae9b4526f

| null |

Tom Robinson

|

Remove unused babel-loader from babel-preset-react-app (#6780)

| false

| 0

| 1

| -1

|

--- package.json

@@ -32,7 +32,6 @@

"@babel/preset-react": "7.0.0",

"@babel/preset-typescript": "7.3.3",

"@babel/runtime": "7.4.3",

- "babel-loader": "8.0.5",

"babel-plugin-dynamic-import-node": "2.2.0",

"babel-plugin-macros": "2.5.1",

"babel-plugin-transform-react-remove-prop-types": "0.4.24"

|

facebook_create-react-app.json

| null | null | null | null | null | null |

facebook_create-react-app.json

|

CODE_IMPROVEMENT

|

4, removed redundant code

|

d9280bdfa9c67dcaf1909e6408ab43d209f2f800

|

2023-08-31 20:10:30

|

李国冬

|

fix-2023-08-31T22:40+08:00

| false

| 190

| 14

| 204

|

--- README.md

@@ -64,7 +64,7 @@

### LLM微调实战

-下面给大家分享**大模型参数高效微调技术实战**,该系列共6篇文章。

+下面给大家分享**大模型参数高效微调技术实战**系列文章,该系列共6篇文章。

| 教程 | 代码 | 框架 |

| ----------------------------- | ----------------------------- | ----------------------------- |

--- docs/llm-base/ai-algo/README.md

@@ -26,12 +26,10 @@

- 通常 seq_length 与 max_position_embeddings 相等。

- key_value头数:This is the number of key_value heads that should be used to implement Grouped Query Attention. If

`num_key_value_heads=num_attention_heads`, the model will use Multi Head Attention (MHA), if

- `num_key_value_heads=1` the model will use Multi Query Attention (MQA) otherwise GQA is used. When

+ `num_key_value_heads=1 the model will use Multi Query Attention (MQA) otherwise GQA is used. When

converting a multi-head checkpoint to a GQA checkpoint, each group key and value head should be constructed

by meanpooling all the original heads within that group.

-

-

## LLaMA

| 模型 | LLaMA-7B | LLaMA-2-7B | LLaMA-13B | LLaMA-2-13B | LLaMA-30B | LLaMA-65B | LLaMA-2-70B |

@@ -39,7 +37,7 @@

| 词表大小(vocab_size) | 32000 | 32000 | 32000 | 32000 | 32000 | 32000 | 32000 |

| Transformer层(n_layer, num_layers, num_hidden_layers) | 32 | 32 | 40 | 40 | 60 | 80 | 80 |

| 注意力头数(num_attention_heads, n_head) | 32 | 32 | 40 | 40 | 52 | 64 | 64 |

-| key_value头数(num_key_value_heads) | N/A | 32 | N/A | 40 | N/A | N/A | 8 |

+| key_value头数(num_key_value_heads) | N/A | N/A | N/A | 40 | N/A | N/A | 8 |

| 隐藏层大小(hidden_size) | 4096 | 4096 | 5120 | 5120 | 6656 | 8192 | 8192 |

| 前馈神经网络的隐藏层大小(ffn_hidden_size, intermediate_size,n_inner) | 11008 | 11008 | 13824 | 13824 | 17920 | 22016 | 28672 |

| seq_length, n_ctx | 2048(max_position_embeddings) | 2048(max_position_embeddings) | 2048 | N/A | 2048 | | N/A |

--- docs/llm-base/ai-algo/transformer.md

@@ -12,7 +12,7 @@

-- 哈佛annotated-transformer:https://github.com/harvardnlp/annotated-transformer/blob/master/AnnotatedTransformer.ipynb

+

@@ -41,14 +41,6 @@ Transformer 中除了单词的Embedding,还需要使用位置Embedding 表示

位置Embedding用 PE 表示, PE 的维度与单词Embedding相同。 PE 可以通过训练得到,也可以使用某种公式计算得到。在Transformer中采用了后者。

-- Overview: The Implemented Transformer: https://medium.com/@hunter-j-phillips/overview-the-implemented-transformer-eafd87fe9589

-- Multi-Head Attention: https://medium.com/@hunter-j-phillips/multi-head-attention-7924371d477a

-- Layer Normalization: https://medium.com/@hunter-j-phillips/layer-normalization-e9ae93eb3c9c

-- Positional Encoding: https://medium.com/@hunter-j-phillips/positional-encoding-7a93db4109e6

-

-

-

-

--- docs/llm-base/ai-algo/transformer/multi-head-attention.webp

Binary files a/docs/llm-base/ai-algo/transformer/multi-head-attention.webp and /dev/null differ

--- docs/llm-base/ai-algo/transformer/模型架构.md

@@ -3,6 +3,9 @@

+

+

+

## 绝对位置编码

--- docs/llm-base/ai-hardware/README.md

@@ -20,7 +20,3 @@

-

-

-- NVIDIA GPUDirect: https://developer.nvidia.com/gpudirect

-

--- docs/llm-base/distribution-parallelism/multidimensional-hybrid-parallel/BloombergGPT模型超参数.png

Binary files "a/docs/llm-base/distribution-parallelism/multidimensional-hybrid-parallel/BloombergGPT\346\250\241\345\236\213\350\266\205\345\217\202\346\225\260.png" and /dev/null differ

--- docs/llm-base/distribution-parallelism/multidimensional-hybrid-parallel/README.md

@@ -1,5 +1,6 @@

- https://huggingface.co/docs/transformers/perf_train_gpu_many

-- https://huggingface.co/transformers/v4.12.5/parallelism.html

+

+

@@ -9,18 +10,12 @@

-| 模型 | DP | TP | PP | ZeRO Stage | FSDP(ZeRO Stage 3) | GPUs |

+| | DP | TP | PP | ZeRO Stage | FSDP(ZeRO Stage 3) | GPUs |

| ------------ | --- | --- | --- | ---------- | ------------------ | ----------------------- |

| Bloom-176B | 8 | 4 | 12 | ZeRO-1 | - | 384 张 A100 80GB |

| CodeGeeX-13B | 192 | 8 | - | ZeRO-2 | - | 1,536 张 Ascend 910 32GB |

| GLM-130B | 24 | 4 | 8 | ZeRO-1 | - | 768 张 A100 40G |

-| OPT-175B | - | 8 | - | - | ✅ | 992 张 80GB A100 |

-| Megatron-Turing NLG(530B) | 16 | 8 | 35 | - | - | 4480 张 A100 80G |

-| GPT-NeoX-20B | 12 | 2 | 4 |ZeRO-1 | - | 96 张 A100 40G |

-

-

-

-

+| OPT-175B | - | 8 | - | - | | 992 张 80GB A100 |