Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +22 -0

- .gitmodules +6 -0

- README.md +153 -0

- arguments/__init__.py +118 -0

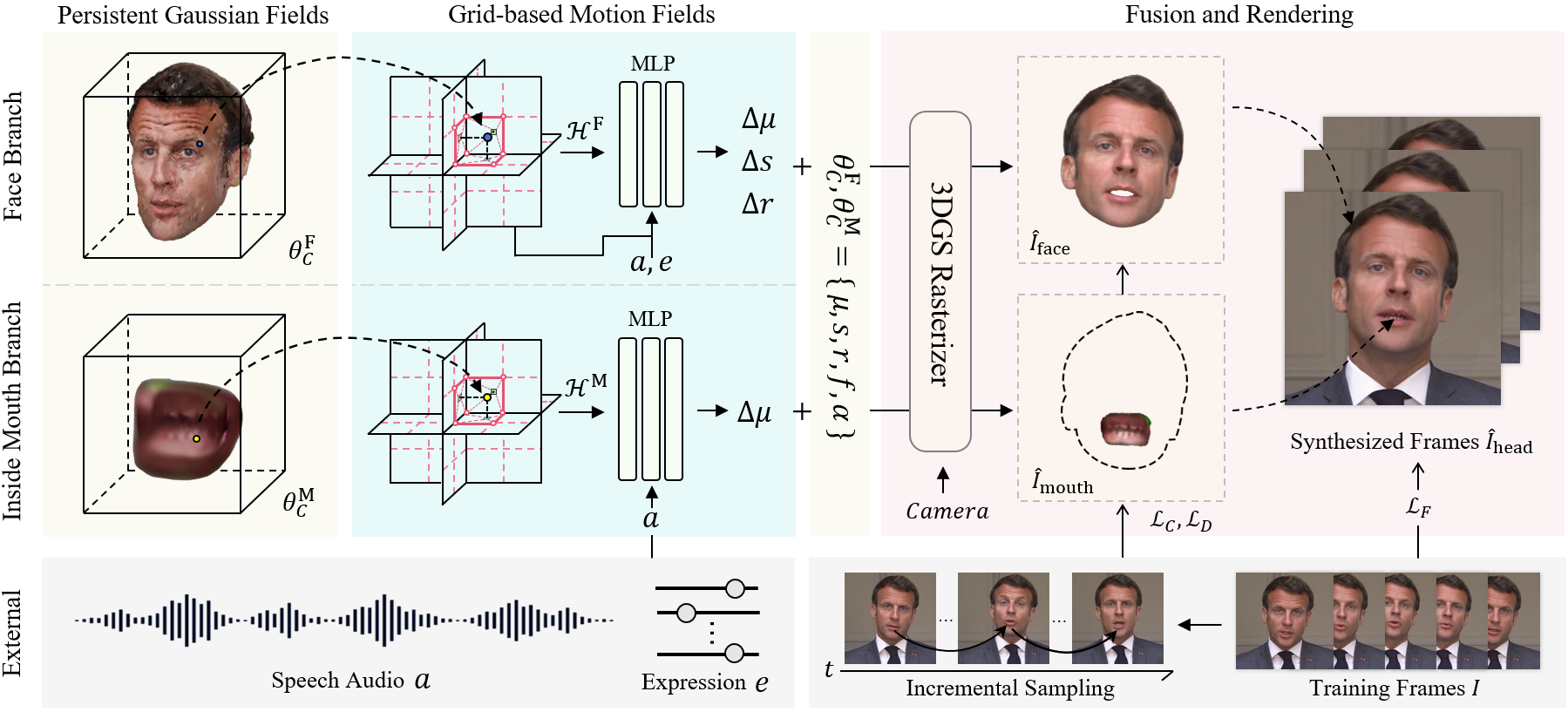

- assets/main.png +0 -0

- data/.gitkeep +0 -0

- data_utils/deepspeech_features/README.md +20 -0

- data_utils/deepspeech_features/deepspeech_features.py +274 -0

- data_utils/deepspeech_features/deepspeech_store.py +172 -0

- data_utils/deepspeech_features/extract_ds_features.py +130 -0

- data_utils/deepspeech_features/extract_wav.py +87 -0

- data_utils/deepspeech_features/fea_win.py +11 -0

- data_utils/easyportrait/create_teeth_mask.py +34 -0

- data_utils/easyportrait/local_configs/__base__/datasets/easyportrait_1024x1024.py +59 -0

- data_utils/easyportrait/local_configs/__base__/datasets/easyportrait_384x384.py +59 -0

- data_utils/easyportrait/local_configs/__base__/datasets/easyportrait_512x512.py +59 -0

- data_utils/easyportrait/local_configs/__base__/default_runtime.py +14 -0

- data_utils/easyportrait/local_configs/__base__/models/bisenetv2.py +80 -0

- data_utils/easyportrait/local_configs/__base__/models/fcn_resnet50.py +45 -0

- data_utils/easyportrait/local_configs/__base__/models/fpn_resnet50.py +36 -0

- data_utils/easyportrait/local_configs/__base__/models/lraspp.py +25 -0

- data_utils/easyportrait/local_configs/__base__/models/segformer.py +34 -0

- data_utils/easyportrait/local_configs/__base__/schedules/schedule_10k_adamw.py +11 -0

- data_utils/easyportrait/local_configs/__base__/schedules/schedule_160k_adamw.py +9 -0

- data_utils/easyportrait/local_configs/__base__/schedules/schedule_20k_adamw.py +11 -0

- data_utils/easyportrait/local_configs/__base__/schedules/schedule_40k_adamw.py +9 -0

- data_utils/easyportrait/local_configs/__base__/schedules/schedule_80k_adamw.py +9 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/bisenet-fp/bisenetv2-fp.py +221 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/bisenet-ps/bisenetv2-ps.py +218 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/danet-fp/danet-fp.py +174 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/danet-ps/danet-ps.py +171 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/deeplab-fp/deeplabv3-fp.py +174 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/deeplab-ps/deeplabv3-ps.py +171 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/fastscnn-fp/fastscnn-fp.py +165 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/fastscnn-ps/fastscnn-ps.py +162 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/fcn-fp/fcn-fp.py +187 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/fcn-ps/fcn-ps.py +184 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/fpn-fp/fpn-fp.py +182 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/fpn-ps/fpn-ps.py +179 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/segformer-fp/segformer-fp.py +182 -0

- data_utils/easyportrait/local_configs/easyportrait_experiments_v2/segformer-ps/segformer-ps.py +179 -0

- data_utils/easyportrait/mmseg/.mim/configs +0 -0

- data_utils/easyportrait/mmseg/.mim/tools +0 -0

- data_utils/easyportrait/mmseg/__init__.py +62 -0

- data_utils/easyportrait/mmseg/apis/__init__.py +11 -0

- data_utils/easyportrait/mmseg/apis/inference.py +145 -0

- data_utils/easyportrait/mmseg/apis/test.py +233 -0

- data_utils/easyportrait/mmseg/apis/train.py +194 -0

- data_utils/easyportrait/mmseg/core/__init__.py +12 -0

- data_utils/easyportrait/mmseg/core/builder.py +33 -0

.gitignore

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

| 2 |

+

build/

|

| 3 |

+

*.egg-info/

|

| 4 |

+

*.so

|

| 5 |

+

*.mp4

|

| 6 |

+

*.pth

|

| 7 |

+

|

| 8 |

+

data_utils/face_tracking/3DMM/*

|

| 9 |

+

data_utils/face_parsing/79999_iter.pth

|

| 10 |

+

|

| 11 |

+

*.pyc

|

| 12 |

+

.vscode

|

| 13 |

+

output*

|

| 14 |

+

build

|

| 15 |

+

gridencoder/gridencoder.egg-info

|

| 16 |

+

diff_rasterization/diff_rast.egg-info

|

| 17 |

+

diff_rasterization/dist

|

| 18 |

+

tensorboard_3d

|

| 19 |

+

screenshots

|

| 20 |

+

|

| 21 |

+

data/*

|

| 22 |

+

!*.gitkeep

|

.gitmodules

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[submodule "submodules/simple-knn"]

|

| 2 |

+

path = submodules/simple-knn

|

| 3 |

+

url = https://gitlab.inria.fr/bkerbl/simple-knn.git

|

| 4 |

+

[submodule "submodules/diff-gaussian-rasterization"]

|

| 5 |

+

path = submodules/diff-gaussian-rasterization

|

| 6 |

+

url = https://github.com/ashawkey/diff-gaussian-rasterization.git

|

README.md

ADDED

|

@@ -0,0 +1,153 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# TalkingGaussian: Structure-Persistent 3D Talking Head Synthesis via Gaussian Splatting

|

| 2 |

+

|

| 3 |

+

This is the official repository for our ECCV 2024 paper **TalkingGaussian: Structure-Persistent 3D Talking Head Synthesis via Gaussian Splatting**.

|

| 4 |

+

|

| 5 |

+

[Paper](https://arxiv.org/abs/2404.15264) | [Project](https://fictionarry.github.io/TalkingGaussian/) | [Video](https://youtu.be/c5VG7HkDs8I)

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Installation

|

| 11 |

+

|

| 12 |

+

Tested on Ubuntu 18.04, CUDA 11.3, PyTorch 1.12.1

|

| 13 |

+

|

| 14 |

+

```

|

| 15 |

+

git clone [email protected]:Fictionarry/TalkingGaussian.git --recursive

|

| 16 |

+

|

| 17 |

+

conda env create --file environment.yml

|

| 18 |

+

conda activate talking_gaussian

|

| 19 |

+

pip install "git+https://github.com/facebookresearch/pytorch3d.git"

|

| 20 |

+

pip install tensorflow-gpu==2.8.0

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

If encounter installation problem from the `diff-gaussian-rasterization` or `gridencoder`, please refer to [gaussian-splatting](https://github.com/graphdeco-inria/gaussian-splatting) and [torch-ngp](https://github.com/ashawkey/torch-ngp).

|

| 24 |

+

|

| 25 |

+

### Preparation

|

| 26 |

+

|

| 27 |

+

- Prepare face-parsing model and the 3DMM model for head pose estimation.

|

| 28 |

+

|

| 29 |

+

```bash

|

| 30 |

+

bash scripts/prepare.sh

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

- Download 3DMM model from [Basel Face Model 2009](https://faces.dmi.unibas.ch/bfm/main.php?nav=1-1-0&id=details):

|

| 34 |

+

|

| 35 |

+

```bash

|

| 36 |

+

# 1. copy 01_MorphableModel.mat to data_util/face_tracking/3DMM/

|

| 37 |

+

# 2. run following

|

| 38 |

+

cd data_utils/face_tracking

|

| 39 |

+

python convert_BFM.py

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

- Prepare the environment for [EasyPortrait](https://github.com/hukenovs/easyportrait):

|

| 43 |

+

|

| 44 |

+

```bash

|

| 45 |

+

# prepare mmcv

|

| 46 |

+

conda activate talking_gaussian

|

| 47 |

+

pip install -U openmim

|

| 48 |

+

mim install mmcv-full==1.7.1

|

| 49 |

+

|

| 50 |

+

# download model weight

|

| 51 |

+

cd data_utils/easyportrait

|

| 52 |

+

wget "https://n-ws-620xz-pd11.s3pd11.sbercloud.ru/b-ws-620xz-pd11-jux/easyportrait/experiments/models/fpn-fp-512.pth"

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

## Usage

|

| 56 |

+

|

| 57 |

+

### Important Notice

|

| 58 |

+

|

| 59 |

+

- This code is provided for research purposes only. The author makes no warranties, express or implied, as to the accuracy, completeness, or fitness for a particular purpose of the code. Use this code at your own risk.

|

| 60 |

+

|

| 61 |

+

- The author explicitly prohibits the use of this code for any malicious or illegal activities. By using this code, you agree to comply with all applicable laws and regulations, and you agree not to use it to harm others or to perform any actions that would be considered unethical or illegal.

|

| 62 |

+

|

| 63 |

+

- The author will not be responsible for any damages, losses, or issues that arise from the use of this code.

|

| 64 |

+

|

| 65 |

+

- Users are encouraged to use this code responsibly and ethically.

|

| 66 |

+

|

| 67 |

+

### Video Dataset

|

| 68 |

+

[Here](https://drive.google.com/drive/folders/1E_8W805lioIznqbkvTQHWWi5IFXUG7Er?usp=drive_link) we provide two video clips used in our experiments, which are captured from YouTube. Please respect the original content creators' rights and comply with YouTube’s copyright policies in the usage.

|

| 69 |

+

|

| 70 |

+

Other used videos can be found from [GeneFace](https://github.com/yerfor/GeneFace) and [AD-NeRF](https://github.com/YudongGuo/AD-NeRF).

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

### Pre-processing Training Video

|

| 74 |

+

|

| 75 |

+

* Put training video under `data/<ID>/<ID>.mp4`.

|

| 76 |

+

|

| 77 |

+

The video **must be 25FPS, with all frames containing the talking person**.

|

| 78 |

+

The resolution should be about 512x512, and duration about 1-5 min.

|

| 79 |

+

|

| 80 |

+

* Run script to process the video.

|

| 81 |

+

|

| 82 |

+

```bash

|

| 83 |

+

python data_utils/process.py data/<ID>/<ID>.mp4

|

| 84 |

+

```

|

| 85 |

+

|

| 86 |

+

* Obtain Action Units

|

| 87 |

+

|

| 88 |

+

Run `FeatureExtraction` in [OpenFace](https://github.com/TadasBaltrusaitis/OpenFace), rename and move the output CSV file to `data/<ID>/au.csv`.

|

| 89 |

+

|

| 90 |

+

* Generate tooth masks

|

| 91 |

+

|

| 92 |

+

```bash

|

| 93 |

+

export PYTHONPATH=./data_utils/easyportrait

|

| 94 |

+

python ./data_utils/easyportrait/create_teeth_mask.py ./data/<ID>

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

### Audio Pre-process

|

| 98 |

+

|

| 99 |

+

In our paper, we use DeepSpeech features for evaluation.

|

| 100 |

+

|

| 101 |

+

* DeepSpeech

|

| 102 |

+

|

| 103 |

+

```bash

|

| 104 |

+

python data_utils/deepspeech_features/extract_ds_features.py --input data/<name>.wav # saved to data/<name>.npy

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

- HuBERT

|

| 108 |

+

|

| 109 |

+

Similar to ER-NeRF, HuBERT is also available. Recommended for situations if the audio is not in English.

|

| 110 |

+

|

| 111 |

+

Specify `--audio_extractor hubert` when training and testing.

|

| 112 |

+

|

| 113 |

+

```

|

| 114 |

+

python data_utils/hubert.py --wav data/<name>.wav # save to data/<name>_hu.npy

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

### Train

|

| 118 |

+

|

| 119 |

+

```bash

|

| 120 |

+

# If resources are sufficient, partially parallel is available to speed up the training. See the script.

|

| 121 |

+

bash scripts/train_xx.sh data/<ID> output/<project_name> <GPU_ID>

|

| 122 |

+

```

|

| 123 |

+

|

| 124 |

+

### Test

|

| 125 |

+

|

| 126 |

+

```bash

|

| 127 |

+

# saved to output/<project_name>/test/ours_None/renders

|

| 128 |

+

python synthesize_fuse.py -S data/<ID> -M output/<project_name> --eval

|

| 129 |

+

```

|

| 130 |

+

|

| 131 |

+

### Inference with target audio

|

| 132 |

+

|

| 133 |

+

```bash

|

| 134 |

+

python synthesize_fuse.py -S data/<ID> -M output/<project_name> --use_train --audio <preprocessed_audio_feature>.npy

|

| 135 |

+

```

|

| 136 |

+

|

| 137 |

+

## Citation

|

| 138 |

+

|

| 139 |

+

Consider citing as below if you find this repository helpful to your project:

|

| 140 |

+

|

| 141 |

+

```

|

| 142 |

+

@article{li2024talkinggaussian,

|

| 143 |

+

title={TalkingGaussian: Structure-Persistent 3D Talking Head Synthesis via Gaussian Splatting},

|

| 144 |

+

author={Jiahe Li and Jiawei Zhang and Xiao Bai and Jin Zheng and Xin Ning and Jun Zhou and Lin Gu},

|

| 145 |

+

journal={arXiv preprint arXiv:2404.15264},

|

| 146 |

+

year={2024}

|

| 147 |

+

}

|

| 148 |

+

```

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

## Acknowledgement

|

| 152 |

+

|

| 153 |

+

This code is developed on [gaussian-splatting](https://github.com/graphdeco-inria/gaussian-splatting) with [simple-knn](https://gitlab.inria.fr/bkerbl/simple-knn), and a modified [diff-gaussian-rasterization](https://github.com/ashawkey/diff-gaussian-rasterization). Partial codes are from [RAD-NeRF](https://github.com/ashawkey/RAD-NeRF), [DFRF](https://github.com/sstzal/DFRF), [GeneFace](https://github.com/yerfor/GeneFace), and [AD-NeRF](https://github.com/YudongGuo/AD-NeRF). Teeth mask is from [EasyPortrait](https://github.com/hukenovs/easyportrait). Thanks for these great projects!

|

arguments/__init__.py

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#

|

| 2 |

+

# Copyright (C) 2023, Inria

|

| 3 |

+

# GRAPHDECO research group, https://team.inria.fr/graphdeco

|

| 4 |

+

# All rights reserved.

|

| 5 |

+

#

|

| 6 |

+

# This software is free for non-commercial, research and evaluation use

|

| 7 |

+

# under the terms of the LICENSE.md file.

|

| 8 |

+

#

|

| 9 |

+

# For inquiries contact [email protected]

|

| 10 |

+

#

|

| 11 |

+

|

| 12 |

+

from argparse import ArgumentParser, Namespace

|

| 13 |

+

import sys

|

| 14 |

+

import os

|

| 15 |

+

|

| 16 |

+

class GroupParams:

|

| 17 |

+

pass

|

| 18 |

+

|

| 19 |

+

class ParamGroup:

|

| 20 |

+

def __init__(self, parser: ArgumentParser, name : str, fill_none = False):

|

| 21 |

+

group = parser.add_argument_group(name)

|

| 22 |

+

for key, value in vars(self).items():

|

| 23 |

+

shorthand = False

|

| 24 |

+

if key.startswith("_"):

|

| 25 |

+

shorthand = True

|

| 26 |

+

key = key[1:]

|

| 27 |

+

t = type(value)

|

| 28 |

+

value = value if not fill_none else None

|

| 29 |

+

if shorthand:

|

| 30 |

+

if t == bool:

|

| 31 |

+

group.add_argument("--" + key, ("-" + key[0:1]), ("-" + key[0:1].upper()), default=value, action="store_true")

|

| 32 |

+

else:

|

| 33 |

+

group.add_argument("--" + key, ("-" + key[0:1]), ("-" + key[0:1].upper()), default=value, type=t)

|

| 34 |

+

else:

|

| 35 |

+

if t == bool:

|

| 36 |

+

group.add_argument("--" + key, default=value, action="store_true")

|

| 37 |

+

else:

|

| 38 |

+

group.add_argument("--" + key, default=value, type=t)

|

| 39 |

+

|

| 40 |

+

def extract(self, args):

|

| 41 |

+

group = GroupParams()

|

| 42 |

+

for arg in vars(args).items():

|

| 43 |

+

if arg[0] in vars(self) or ("_" + arg[0]) in vars(self):

|

| 44 |

+

setattr(group, arg[0], arg[1])

|

| 45 |

+

return group

|

| 46 |

+

|

| 47 |

+

class ModelParams(ParamGroup):

|

| 48 |

+

def __init__(self, parser, sentinel=False):

|

| 49 |

+

self.sh_degree = 2

|

| 50 |

+

self._source_path = ""

|

| 51 |

+

self._model_path = ""

|

| 52 |

+

self._images = "images"

|

| 53 |

+

self._resolution = -1

|

| 54 |

+

self._white_background = False

|

| 55 |

+

self.data_device = "cpu"

|

| 56 |

+

self.eval = False

|

| 57 |

+

self.audio = ""

|

| 58 |

+

self.init_num = 10_000

|

| 59 |

+

self.audio_extractor = "deepspeech"

|

| 60 |

+

super().__init__(parser, "Loading Parameters", sentinel)

|

| 61 |

+

|

| 62 |

+

def extract(self, args):

|

| 63 |

+

g = super().extract(args)

|

| 64 |

+

g.source_path = os.path.abspath(g.source_path)

|

| 65 |

+

|

| 66 |

+

return g

|

| 67 |

+

|

| 68 |

+

class PipelineParams(ParamGroup):

|

| 69 |

+

def __init__(self, parser):

|

| 70 |

+

self.convert_SHs_python = False

|

| 71 |

+

self.compute_cov3D_python = False

|

| 72 |

+

self.debug = False

|

| 73 |

+

super().__init__(parser, "Pipeline Parameters")

|

| 74 |

+

|

| 75 |

+

class OptimizationParams(ParamGroup):

|

| 76 |

+

def __init__(self, parser):

|

| 77 |

+

self.iterations = 50_000

|

| 78 |

+

self.position_lr_init = 0.00016

|

| 79 |

+

self.position_lr_final = 0.0000016

|

| 80 |

+

self.position_lr_delay_mult = 0.01

|

| 81 |

+

self.position_lr_max_steps = 45_000

|

| 82 |

+

self.feature_lr = 0.0025

|

| 83 |

+

self.opacity_lr = 0.05

|

| 84 |

+

self.scaling_lr = 0.003

|

| 85 |

+

self.rotation_lr = 0.001

|

| 86 |

+

self.percent_dense = 0.005

|

| 87 |

+

self.lambda_dssim = 0.2

|

| 88 |

+

self.densification_interval = 100

|

| 89 |

+

self.opacity_reset_interval = 3000

|

| 90 |

+

self.densify_from_iter = 500

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

self.densify_until_iter = 45_000

|

| 94 |

+

self.densify_grad_threshold = 0.0002

|

| 95 |

+

self.random_background = False

|

| 96 |

+

super().__init__(parser, "Optimization Parameters")

|

| 97 |

+

|

| 98 |

+

def get_combined_args(parser : ArgumentParser):

|

| 99 |

+

cmdlne_string = sys.argv[1:]

|

| 100 |

+

cfgfile_string = "Namespace()"

|

| 101 |

+

args_cmdline = parser.parse_args(cmdlne_string)

|

| 102 |

+

|

| 103 |

+

try:

|

| 104 |

+

cfgfilepath = os.path.join(args_cmdline.model_path, "cfg_args")

|

| 105 |

+

print("Looking for config file in", cfgfilepath)

|

| 106 |

+

with open(cfgfilepath) as cfg_file:

|

| 107 |

+

print("Config file found: {}".format(cfgfilepath))

|

| 108 |

+

cfgfile_string = cfg_file.read()

|

| 109 |

+

except TypeError:

|

| 110 |

+

print("Config file not found at")

|

| 111 |

+

pass

|

| 112 |

+

args_cfgfile = eval(cfgfile_string)

|

| 113 |

+

|

| 114 |

+

merged_dict = vars(args_cfgfile).copy()

|

| 115 |

+

for k,v in vars(args_cmdline).items():

|

| 116 |

+

if v != None:

|

| 117 |

+

merged_dict[k] = v

|

| 118 |

+

return Namespace(**merged_dict)

|

assets/main.png

ADDED

|

data/.gitkeep

ADDED

|

File without changes

|

data_utils/deepspeech_features/README.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Routines for DeepSpeech features processing

|

| 2 |

+

Several routines for [DeepSpeech](https://github.com/mozilla/DeepSpeech) features processing, like speech features generation for [VOCA](https://github.com/TimoBolkart/voca) model.

|

| 3 |

+

|

| 4 |

+

## Installation

|

| 5 |

+

|

| 6 |

+

```

|

| 7 |

+

pip3 install -r requirements.txt

|

| 8 |

+

```

|

| 9 |

+

|

| 10 |

+

## Usage

|

| 11 |

+

|

| 12 |

+

Generate wav files:

|

| 13 |

+

```

|

| 14 |

+

python3 extract_wav.py --in-video=<you_data_dir>

|

| 15 |

+

```

|

| 16 |

+

|

| 17 |

+

Generate files with DeepSpeech features:

|

| 18 |

+

```

|

| 19 |

+

python3 extract_ds_features.py --input=<you_data_dir>

|

| 20 |

+

```

|

data_utils/deepspeech_features/deepspeech_features.py

ADDED

|

@@ -0,0 +1,274 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

DeepSpeech features processing routines.

|

| 3 |

+

NB: Based on VOCA code. See the corresponding license restrictions.

|

| 4 |

+

"""

|

| 5 |

+

|

| 6 |

+

__all__ = ['conv_audios_to_deepspeech']

|

| 7 |

+

|

| 8 |

+

import numpy as np

|

| 9 |

+

import warnings

|

| 10 |

+

import resampy

|

| 11 |

+

from scipy.io import wavfile

|

| 12 |

+

from python_speech_features import mfcc

|

| 13 |

+

import tensorflow.compat.v1 as tf

|

| 14 |

+

tf.disable_v2_behavior()

|

| 15 |

+

|

| 16 |

+

def conv_audios_to_deepspeech(audios,

|

| 17 |

+

out_files,

|

| 18 |

+

num_frames_info,

|

| 19 |

+

deepspeech_pb_path,

|

| 20 |

+

audio_window_size=1,

|

| 21 |

+

audio_window_stride=1):

|

| 22 |

+

"""

|

| 23 |

+

Convert list of audio files into files with DeepSpeech features.

|

| 24 |

+

|

| 25 |

+

Parameters

|

| 26 |

+

----------

|

| 27 |

+

audios : list of str or list of None

|

| 28 |

+

Paths to input audio files.

|

| 29 |

+

out_files : list of str

|

| 30 |

+

Paths to output files with DeepSpeech features.

|

| 31 |

+

num_frames_info : list of int

|

| 32 |

+

List of numbers of frames.

|

| 33 |

+

deepspeech_pb_path : str

|

| 34 |

+

Path to DeepSpeech 0.1.0 frozen model.

|

| 35 |

+

audio_window_size : int, default 16

|

| 36 |

+

Audio window size.

|

| 37 |

+

audio_window_stride : int, default 1

|

| 38 |

+

Audio window stride.

|

| 39 |

+

"""

|

| 40 |

+

graph, logits_ph, input_node_ph, input_lengths_ph = prepare_deepspeech_net(

|

| 41 |

+

deepspeech_pb_path)

|

| 42 |

+

|

| 43 |

+

with tf.compat.v1.Session(graph=graph) as sess:

|

| 44 |

+

for audio_file_path, out_file_path, num_frames in zip(audios, out_files, num_frames_info):

|

| 45 |

+

print(audio_file_path)

|

| 46 |

+

print(out_file_path)

|

| 47 |

+

audio_sample_rate, audio = wavfile.read(audio_file_path)

|

| 48 |

+

if audio.ndim != 1:

|

| 49 |

+

warnings.warn(

|

| 50 |

+

"Audio has multiple channels, the first channel is used")

|

| 51 |

+

audio = audio[:, 0]

|

| 52 |

+

ds_features = pure_conv_audio_to_deepspeech(

|

| 53 |

+

audio=audio,

|

| 54 |

+

audio_sample_rate=audio_sample_rate,

|

| 55 |

+

audio_window_size=audio_window_size,

|

| 56 |

+

audio_window_stride=audio_window_stride,

|

| 57 |

+

num_frames=num_frames,

|

| 58 |

+

net_fn=lambda x: sess.run(

|

| 59 |

+

logits_ph,

|

| 60 |

+

feed_dict={

|

| 61 |

+

input_node_ph: x[np.newaxis, ...],

|

| 62 |

+

input_lengths_ph: [x.shape[0]]}))

|

| 63 |

+

|

| 64 |

+

net_output = ds_features.reshape(-1, 29)

|

| 65 |

+

win_size = 16

|

| 66 |

+

zero_pad = np.zeros((int(win_size / 2), net_output.shape[1]))

|

| 67 |

+

net_output = np.concatenate(

|

| 68 |

+

(zero_pad, net_output, zero_pad), axis=0)

|

| 69 |

+

windows = []

|

| 70 |

+

for window_index in range(0, net_output.shape[0] - win_size, 2):

|

| 71 |

+

windows.append(

|

| 72 |

+

net_output[window_index:window_index + win_size])

|

| 73 |

+

print(np.array(windows).shape)

|

| 74 |

+

np.save(out_file_path, np.array(windows))

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

def prepare_deepspeech_net(deepspeech_pb_path):

|

| 78 |

+

"""

|

| 79 |

+

Load and prepare DeepSpeech network.

|

| 80 |

+

|

| 81 |

+

Parameters

|

| 82 |

+

----------

|

| 83 |

+

deepspeech_pb_path : str

|

| 84 |

+

Path to DeepSpeech 0.1.0 frozen model.

|

| 85 |

+

|

| 86 |

+

Returns

|

| 87 |

+

-------

|

| 88 |

+

graph : obj

|

| 89 |

+

ThensorFlow graph.

|

| 90 |

+

logits_ph : obj

|

| 91 |

+

ThensorFlow placeholder for `logits`.

|

| 92 |

+

input_node_ph : obj

|

| 93 |

+

ThensorFlow placeholder for `input_node`.

|

| 94 |

+

input_lengths_ph : obj

|

| 95 |

+

ThensorFlow placeholder for `input_lengths`.

|

| 96 |

+

"""

|

| 97 |

+

# Load graph and place_holders:

|

| 98 |

+

with tf.io.gfile.GFile(deepspeech_pb_path, "rb") as f:

|

| 99 |

+

graph_def = tf.compat.v1.GraphDef()

|

| 100 |

+

graph_def.ParseFromString(f.read())

|

| 101 |

+

|

| 102 |

+

graph = tf.compat.v1.get_default_graph()

|

| 103 |

+

tf.import_graph_def(graph_def, name="deepspeech")

|

| 104 |

+

logits_ph = graph.get_tensor_by_name("deepspeech/logits:0")

|

| 105 |

+

input_node_ph = graph.get_tensor_by_name("deepspeech/input_node:0")

|

| 106 |

+

input_lengths_ph = graph.get_tensor_by_name("deepspeech/input_lengths:0")

|

| 107 |

+

|

| 108 |

+

return graph, logits_ph, input_node_ph, input_lengths_ph

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

def pure_conv_audio_to_deepspeech(audio,

|

| 112 |

+

audio_sample_rate,

|

| 113 |

+

audio_window_size,

|

| 114 |

+

audio_window_stride,

|

| 115 |

+

num_frames,

|

| 116 |

+

net_fn):

|

| 117 |

+

"""

|

| 118 |

+

Core routine for converting audion into DeepSpeech features.

|

| 119 |

+

|

| 120 |

+

Parameters

|

| 121 |

+

----------

|

| 122 |

+

audio : np.array

|

| 123 |

+

Audio data.

|

| 124 |

+

audio_sample_rate : int

|

| 125 |

+

Audio sample rate.

|

| 126 |

+

audio_window_size : int

|

| 127 |

+

Audio window size.

|

| 128 |

+

audio_window_stride : int

|

| 129 |

+

Audio window stride.

|

| 130 |

+

num_frames : int or None

|

| 131 |

+

Numbers of frames.

|

| 132 |

+

net_fn : func

|

| 133 |

+

Function for DeepSpeech model call.

|

| 134 |

+

|

| 135 |

+

Returns

|

| 136 |

+

-------

|

| 137 |

+

np.array

|

| 138 |

+

DeepSpeech features.

|

| 139 |

+

"""

|

| 140 |

+

target_sample_rate = 16000

|

| 141 |

+

if audio_sample_rate != target_sample_rate:

|

| 142 |

+

resampled_audio = resampy.resample(

|

| 143 |

+

x=audio.astype(np.float),

|

| 144 |

+

sr_orig=audio_sample_rate,

|

| 145 |

+

sr_new=target_sample_rate)

|

| 146 |

+

else:

|

| 147 |

+

resampled_audio = audio.astype(np.float32)

|

| 148 |

+

input_vector = conv_audio_to_deepspeech_input_vector(

|

| 149 |

+

audio=resampled_audio.astype(np.int16),

|

| 150 |

+

sample_rate=target_sample_rate,

|

| 151 |

+

num_cepstrum=26,

|

| 152 |

+

num_context=9)

|

| 153 |

+

|

| 154 |

+

network_output = net_fn(input_vector)

|

| 155 |

+

# print(network_output.shape)

|

| 156 |

+

|

| 157 |

+

deepspeech_fps = 50

|

| 158 |

+

video_fps = 50 # Change this option if video fps is different

|

| 159 |

+

audio_len_s = float(audio.shape[0]) / audio_sample_rate

|

| 160 |

+

if num_frames is None:

|

| 161 |

+

num_frames = int(round(audio_len_s * video_fps))

|

| 162 |

+

else:

|

| 163 |

+

video_fps = num_frames / audio_len_s

|

| 164 |

+

network_output = interpolate_features(

|

| 165 |

+

features=network_output[:, 0],

|

| 166 |

+

input_rate=deepspeech_fps,

|

| 167 |

+

output_rate=video_fps,

|

| 168 |

+

output_len=num_frames)

|

| 169 |

+

|

| 170 |

+

# Make windows:

|

| 171 |

+

zero_pad = np.zeros((int(audio_window_size / 2), network_output.shape[1]))

|

| 172 |

+

network_output = np.concatenate(

|

| 173 |

+

(zero_pad, network_output, zero_pad), axis=0)

|

| 174 |

+

windows = []

|

| 175 |

+

for window_index in range(0, network_output.shape[0] - audio_window_size, audio_window_stride):

|

| 176 |

+

windows.append(

|

| 177 |

+

network_output[window_index:window_index + audio_window_size])

|

| 178 |

+

|

| 179 |

+

return np.array(windows)

|

| 180 |

+

|

| 181 |

+

|

| 182 |

+

def conv_audio_to_deepspeech_input_vector(audio,

|

| 183 |

+

sample_rate,

|

| 184 |

+

num_cepstrum,

|

| 185 |

+

num_context):

|

| 186 |

+

"""

|

| 187 |

+

Convert audio raw data into DeepSpeech input vector.

|

| 188 |

+

|

| 189 |

+

Parameters

|

| 190 |

+

----------

|

| 191 |

+

audio : np.array

|

| 192 |

+

Audio data.

|

| 193 |

+

audio_sample_rate : int

|

| 194 |

+

Audio sample rate.

|

| 195 |

+

num_cepstrum : int

|

| 196 |

+

Number of cepstrum.

|

| 197 |

+

num_context : int

|

| 198 |

+

Number of context.

|

| 199 |

+

|

| 200 |

+

Returns

|

| 201 |

+

-------

|

| 202 |

+

np.array

|

| 203 |

+

DeepSpeech input vector.

|

| 204 |

+

"""

|

| 205 |

+

# Get mfcc coefficients:

|

| 206 |

+

features = mfcc(

|

| 207 |

+

signal=audio,

|

| 208 |

+

samplerate=sample_rate,

|

| 209 |

+

numcep=num_cepstrum)

|

| 210 |

+

|

| 211 |

+

# We only keep every second feature (BiRNN stride = 2):

|

| 212 |

+

features = features[::2]

|

| 213 |

+

|

| 214 |

+

# One stride per time step in the input:

|

| 215 |

+

num_strides = len(features)

|

| 216 |

+

|

| 217 |

+

# Add empty initial and final contexts:

|

| 218 |

+

empty_context = np.zeros((num_context, num_cepstrum), dtype=features.dtype)

|

| 219 |

+

features = np.concatenate((empty_context, features, empty_context))

|

| 220 |

+

|

| 221 |

+

# Create a view into the array with overlapping strides of size

|

| 222 |

+

# numcontext (past) + 1 (present) + numcontext (future):

|

| 223 |

+

window_size = 2 * num_context + 1

|

| 224 |

+

train_inputs = np.lib.stride_tricks.as_strided(

|

| 225 |

+

features,

|

| 226 |

+

shape=(num_strides, window_size, num_cepstrum),

|

| 227 |

+

strides=(features.strides[0],

|

| 228 |

+

features.strides[0], features.strides[1]),

|

| 229 |

+

writeable=False)

|

| 230 |

+

|

| 231 |

+

# Flatten the second and third dimensions:

|

| 232 |

+

train_inputs = np.reshape(train_inputs, [num_strides, -1])

|

| 233 |

+

|

| 234 |

+

train_inputs = np.copy(train_inputs)

|

| 235 |

+

train_inputs = (train_inputs - np.mean(train_inputs)) / \

|

| 236 |

+

np.std(train_inputs)

|

| 237 |

+

|

| 238 |

+

return train_inputs

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

def interpolate_features(features,

|

| 242 |

+

input_rate,

|

| 243 |

+

output_rate,

|

| 244 |

+

output_len):

|

| 245 |

+

"""

|

| 246 |

+

Interpolate DeepSpeech features.

|

| 247 |

+

|

| 248 |

+

Parameters

|

| 249 |

+

----------

|

| 250 |

+

features : np.array

|

| 251 |

+

DeepSpeech features.

|

| 252 |

+

input_rate : int

|

| 253 |

+

input rate (FPS).

|

| 254 |

+

output_rate : int

|

| 255 |

+

Output rate (FPS).

|

| 256 |

+

output_len : int

|

| 257 |

+

Output data length.

|

| 258 |

+

|

| 259 |

+

Returns

|

| 260 |

+

-------

|

| 261 |

+

np.array

|

| 262 |

+

Interpolated data.

|

| 263 |

+

"""

|

| 264 |

+

input_len = features.shape[0]

|

| 265 |

+

num_features = features.shape[1]

|

| 266 |

+

input_timestamps = np.arange(input_len) / float(input_rate)

|

| 267 |

+

output_timestamps = np.arange(output_len) / float(output_rate)

|

| 268 |

+

output_features = np.zeros((output_len, num_features))

|

| 269 |

+

for feature_idx in range(num_features):

|

| 270 |

+

output_features[:, feature_idx] = np.interp(

|

| 271 |

+

x=output_timestamps,

|

| 272 |

+

xp=input_timestamps,

|

| 273 |

+

fp=features[:, feature_idx])

|

| 274 |

+

return output_features

|

data_utils/deepspeech_features/deepspeech_store.py

ADDED

|

@@ -0,0 +1,172 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Routines for loading DeepSpeech model.

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

__all__ = ['get_deepspeech_model_file']

|

| 6 |

+

|

| 7 |

+

import os

|

| 8 |

+

import zipfile

|

| 9 |

+

import logging

|

| 10 |

+

import hashlib

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

deepspeech_features_repo_url = 'https://github.com/osmr/deepspeech_features'

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def get_deepspeech_model_file(local_model_store_dir_path=os.path.join("~", ".tensorflow", "models")):

|

| 17 |

+

"""

|

| 18 |

+

Return location for the pretrained on local file system. This function will download from online model zoo when

|

| 19 |

+

model cannot be found or has mismatch. The root directory will be created if it doesn't exist.

|

| 20 |

+

|

| 21 |

+

Parameters

|

| 22 |

+

----------

|

| 23 |

+

local_model_store_dir_path : str, default $TENSORFLOW_HOME/models

|

| 24 |

+

Location for keeping the model parameters.

|

| 25 |

+

|

| 26 |

+

Returns

|

| 27 |

+

-------

|

| 28 |

+

file_path

|

| 29 |

+

Path to the requested pretrained model file.

|

| 30 |

+

"""

|

| 31 |

+

sha1_hash = "b90017e816572ddce84f5843f1fa21e6a377975e"

|

| 32 |

+

file_name = "deepspeech-0_1_0-b90017e8.pb"

|

| 33 |

+

local_model_store_dir_path = os.path.expanduser(local_model_store_dir_path)

|

| 34 |

+

file_path = os.path.join(local_model_store_dir_path, file_name)

|

| 35 |

+

if os.path.exists(file_path):

|

| 36 |

+

if _check_sha1(file_path, sha1_hash):

|

| 37 |

+

return file_path

|

| 38 |

+

else:

|

| 39 |

+

logging.warning("Mismatch in the content of model file detected. Downloading again.")

|

| 40 |

+

else:

|

| 41 |

+

logging.info("Model file not found. Downloading to {}.".format(file_path))

|

| 42 |

+

|

| 43 |

+

if not os.path.exists(local_model_store_dir_path):

|

| 44 |

+

os.makedirs(local_model_store_dir_path)

|

| 45 |

+

|

| 46 |

+

zip_file_path = file_path + ".zip"

|

| 47 |

+

_download(

|

| 48 |

+

url="{repo_url}/releases/download/{repo_release_tag}/{file_name}.zip".format(

|

| 49 |

+

repo_url=deepspeech_features_repo_url,

|

| 50 |

+

repo_release_tag="v0.0.1",

|

| 51 |

+

file_name=file_name),

|

| 52 |

+

path=zip_file_path,

|

| 53 |

+

overwrite=True)

|

| 54 |

+

with zipfile.ZipFile(zip_file_path) as zf:

|

| 55 |

+

zf.extractall(local_model_store_dir_path)

|

| 56 |

+

os.remove(zip_file_path)

|

| 57 |

+

|

| 58 |

+

if _check_sha1(file_path, sha1_hash):

|

| 59 |

+

return file_path

|

| 60 |

+

else:

|

| 61 |

+

raise ValueError("Downloaded file has different hash. Please try again.")

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def _download(url, path=None, overwrite=False, sha1_hash=None, retries=5, verify_ssl=True):

|

| 65 |

+

"""

|

| 66 |

+

Download an given URL

|

| 67 |

+

|

| 68 |

+

Parameters

|

| 69 |

+

----------

|

| 70 |

+

url : str

|

| 71 |

+

URL to download

|

| 72 |

+

path : str, optional

|

| 73 |

+

Destination path to store downloaded file. By default stores to the

|

| 74 |

+

current directory with same name as in url.

|

| 75 |

+

overwrite : bool, optional

|

| 76 |

+

Whether to overwrite destination file if already exists.

|

| 77 |

+

sha1_hash : str, optional

|

| 78 |

+

Expected sha1 hash in hexadecimal digits. Will ignore existing file when hash is specified

|

| 79 |

+

but doesn't match.

|

| 80 |

+

retries : integer, default 5

|

| 81 |

+

The number of times to attempt the download in case of failure or non 200 return codes

|

| 82 |

+

verify_ssl : bool, default True

|

| 83 |

+

Verify SSL certificates.

|

| 84 |

+

|

| 85 |

+

Returns

|

| 86 |

+

-------

|

| 87 |

+

str

|

| 88 |

+

The file path of the downloaded file.

|

| 89 |

+

"""

|

| 90 |

+

import warnings

|

| 91 |

+

try:

|

| 92 |

+

import requests

|

| 93 |

+

except ImportError:

|

| 94 |

+

class requests_failed_to_import(object):

|

| 95 |

+

pass

|

| 96 |

+

requests = requests_failed_to_import

|

| 97 |

+

|

| 98 |

+

if path is None:

|

| 99 |

+

fname = url.split("/")[-1]

|

| 100 |

+

# Empty filenames are invalid

|

| 101 |

+

assert fname, "Can't construct file-name from this URL. Please set the `path` option manually."

|

| 102 |

+

else:

|

| 103 |

+

path = os.path.expanduser(path)

|

| 104 |

+

if os.path.isdir(path):

|

| 105 |

+

fname = os.path.join(path, url.split("/")[-1])

|

| 106 |

+

else:

|

| 107 |

+

fname = path

|

| 108 |

+

assert retries >= 0, "Number of retries should be at least 0"

|

| 109 |

+

|

| 110 |

+

if not verify_ssl:

|

| 111 |

+

warnings.warn(

|

| 112 |

+

"Unverified HTTPS request is being made (verify_ssl=False). "

|

| 113 |

+

"Adding certificate verification is strongly advised.")

|

| 114 |

+

|

| 115 |

+

if overwrite or not os.path.exists(fname) or (sha1_hash and not _check_sha1(fname, sha1_hash)):

|

| 116 |

+

dirname = os.path.dirname(os.path.abspath(os.path.expanduser(fname)))

|

| 117 |

+

if not os.path.exists(dirname):

|

| 118 |

+

os.makedirs(dirname)

|

| 119 |

+

while retries + 1 > 0:

|

| 120 |

+

# Disable pyling too broad Exception

|

| 121 |

+

# pylint: disable=W0703

|

| 122 |

+

try:

|

| 123 |

+

print("Downloading {} from {}...".format(fname, url))

|

| 124 |

+

r = requests.get(url, stream=True, verify=verify_ssl)

|

| 125 |

+

if r.status_code != 200:

|

| 126 |

+

raise RuntimeError("Failed downloading url {}".format(url))

|

| 127 |

+

with open(fname, "wb") as f:

|

| 128 |

+

for chunk in r.iter_content(chunk_size=1024):

|

| 129 |

+

if chunk: # filter out keep-alive new chunks

|

| 130 |

+

f.write(chunk)

|

| 131 |

+

if sha1_hash and not _check_sha1(fname, sha1_hash):

|

| 132 |

+

raise UserWarning("File {} is downloaded but the content hash does not match."

|

| 133 |

+

" The repo may be outdated or download may be incomplete. "

|

| 134 |

+

"If the `repo_url` is overridden, consider switching to "

|

| 135 |

+

"the default repo.".format(fname))

|

| 136 |

+

break

|

| 137 |

+

except Exception as e:

|

| 138 |

+

retries -= 1

|

| 139 |

+

if retries <= 0:

|

| 140 |

+

raise e

|

| 141 |

+

else:

|

| 142 |

+

print("download failed, retrying, {} attempt{} left"

|

| 143 |

+

.format(retries, "s" if retries > 1 else ""))

|

| 144 |

+

|

| 145 |

+

return fname

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

def _check_sha1(filename, sha1_hash):

|

| 149 |

+

"""

|

| 150 |

+

Check whether the sha1 hash of the file content matches the expected hash.

|

| 151 |

+

|

| 152 |

+

Parameters

|

| 153 |

+

----------

|

| 154 |

+

filename : str

|

| 155 |

+

Path to the file.

|

| 156 |

+

sha1_hash : str

|

| 157 |

+

Expected sha1 hash in hexadecimal digits.

|

| 158 |

+

|

| 159 |

+

Returns

|

| 160 |

+

-------

|

| 161 |

+

bool

|

| 162 |

+

Whether the file content matches the expected hash.

|

| 163 |

+

"""

|

| 164 |

+

sha1 = hashlib.sha1()

|

| 165 |

+

with open(filename, "rb") as f:

|

| 166 |

+

while True:

|

| 167 |

+

data = f.read(1048576)

|

| 168 |

+

if not data:

|

| 169 |

+

break

|

| 170 |

+

sha1.update(data)

|

| 171 |

+

|

| 172 |

+

return sha1.hexdigest() == sha1_hash

|

data_utils/deepspeech_features/extract_ds_features.py

ADDED

|

@@ -0,0 +1,130 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Script for extracting DeepSpeech features from audio file.

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

import os

|

| 6 |

+

import argparse

|

| 7 |

+

import numpy as np

|

| 8 |

+

import pandas as pd

|

| 9 |

+

from deepspeech_store import get_deepspeech_model_file

|

| 10 |

+

from deepspeech_features import conv_audios_to_deepspeech

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def parse_args():

|

| 14 |

+

"""

|

| 15 |

+

Create python script parameters.

|

| 16 |

+

Returns

|

| 17 |

+

-------

|

| 18 |

+

ArgumentParser

|

| 19 |

+

Resulted args.

|

| 20 |

+

"""

|

| 21 |

+

parser = argparse.ArgumentParser(

|

| 22 |

+