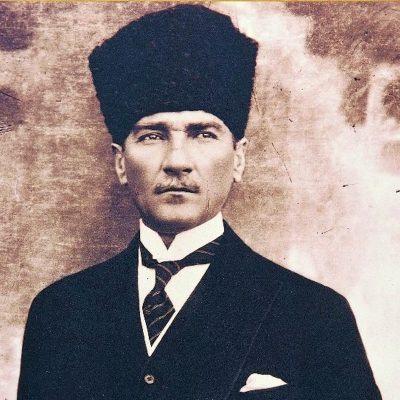

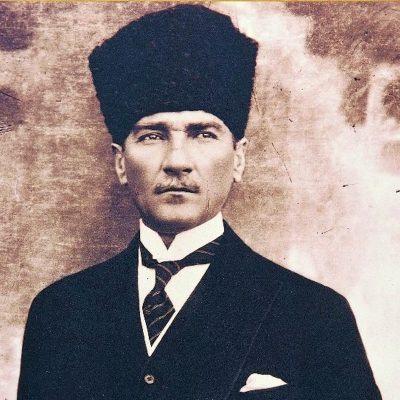

Who is in this image?

The image shows Mustafa Kemal Atatürk, the founder and first President of the Republic of Turkey.

# 🚀 Next 4B (s330)

### *Türkiye’s First Vision-Language Model — Efficient, Multimodal, and Reasoning-Focused*

[](https://opensource.org/licenses/MIT)

[]()

[](https://huggingface.co/Lamapi/next-4b)

---

## 📖 Overview

**Next 4B** is a **4-billion parameter multimodal Vision-Language Model (VLM)** based on **Gemma 3**, fine-tuned to handle **both text and images** efficiently. It is **Türkiye’s first open-source vision-language model**, designed for:

* Understanding and generating **text and image descriptions**.

* Efficient reasoning and context-aware multimodal outputs.

* Turkish support with multilingual capabilities.

* Low-resource deployment using **8-bit quantization** for consumer-grade GPUs.

This model is ideal for **researchers, developers, and organizations** who need a **high-performance multimodal AI** capable of **visual understanding, reasoning, and creative generation**.

---

# Our Next 1B and Next 4B models are leading to all of the tiny models in benchmarks.

# 🚀 Next 4B (s330)

### *Türkiye’s First Vision-Language Model — Efficient, Multimodal, and Reasoning-Focused*

[](https://opensource.org/licenses/MIT)

[]()

[](https://huggingface.co/Lamapi/next-4b)

---

## 📖 Overview

**Next 4B** is a **4-billion parameter multimodal Vision-Language Model (VLM)** based on **Gemma 3**, fine-tuned to handle **both text and images** efficiently. It is **Türkiye’s first open-source vision-language model**, designed for:

* Understanding and generating **text and image descriptions**.

* Efficient reasoning and context-aware multimodal outputs.

* Turkish support with multilingual capabilities.

* Low-resource deployment using **8-bit quantization** for consumer-grade GPUs.

This model is ideal for **researchers, developers, and organizations** who need a **high-performance multimodal AI** capable of **visual understanding, reasoning, and creative generation**.

---

# Our Next 1B and Next 4B models are leading to all of the tiny models in benchmarks.

| Model | MMLU (5-shot) % | MMLU-Pro % | GSM8K % | MATH % |

|---|---|---|---|---|

| Next 4B preview | 84.6 | 66.9 | 82.7 | 70.5 |

| Next 1B | 87.3 | 69.2 | 90.5 | 70.1 |

| Qwen 3 0.6B | 52.81 | 37.6 | 60.7 | 20.5 |

| Llama 3.2 1B | 49.3 | 44.4 | 11.9 | 30.6 |

| Model | MMLU (5-shot) % | MMLU-Pro % | GSM8K % | MATH % |

|---|---|---|---|---|

| Next 14B (Thinking) | 94.6 | 93.2 | 98.8 | 92.7 |

| Next 12B | 92.7 | 84.4 | 95.3 | 87.2 |

| GPT-5 | 92.5 | 87.0 | 98.4 | 96.0 |

| Claude Opus 4.1 (Thinking) | ~92.0 | 87.8 | 84.7 | 95.4 |