This model contains the full source of the original "Qwen3-VL-30B-A3B-Thinking" coupled with Brainstorm 20x adapter (by DavidAU)

with augmented GGUFs with MAX 16 bit output tensor.

This a MIXURE of EXPERTS model ("MOE") with 128 experts, 8 activated.

FULL example generation W vision below.

This is the full image/text/multi-modal model AND fully tested.

Brainstorm 20x will augment text generation as well as "image" description and in many cases raises the raw benchmarks of the model (which are already

very impressive (see below) ) too.

Brainstorm also augments "thinking" too.

Addition of the Brainstorm adapter has altered the model from 30B to 42B (now with 67 layers and 807 tensors).

GGUFs further enhanced with NEO IMATRIX dataset, and MAX output tensor (makes up 10-20% of model output quality) set at 16 bits.

Note that Q8_0 will only have MAX output tensor at 16 bits, as Imatrix has no effect on this quant.

IMPORTANT: - Looping?

This model tends to loop sometimes, if this happens set temp to "1" (or higher) and rep pen to "1.1" . The org model has the same issue.

If you have the option, set "presence penalty" to 2.0 [as per Qwen's own suggestions]

NOTE:

Only uploading TWO quants at this time, plus the mmproj at q8_0.

Q4_K_S, and a 2nd one "OT16" with output tensor at 16 bits.

Output Tensor ("OT") increases overall model quality.

Testing conducted at 4, 6 and 8 active experts.

---

Example Generation (Lmstudio):

Temp .7, rep pen 1.05, Quant Q4KS (NOT with "OT16"), Experts 8 using VISION mmproj Q8_0.

Q4KS is a low to mid quality quant, expect better with higher quants.

---

This model contains the full source of the original "Qwen3-VL-30B-A3B-Thinking" coupled with Brainstorm 20x adapter (by DavidAU)

with augmented GGUFs with MAX 16 bit output tensor.

This a MIXURE of EXPERTS model ("MOE") with 128 experts, 8 activated.

FULL example generation W vision below.

This is the full image/text/multi-modal model AND fully tested.

Brainstorm 20x will augment text generation as well as "image" description and in many cases raises the raw benchmarks of the model (which are already

very impressive (see below) ) too.

Brainstorm also augments "thinking" too.

Addition of the Brainstorm adapter has altered the model from 30B to 42B (now with 67 layers and 807 tensors).

GGUFs further enhanced with NEO IMATRIX dataset, and MAX output tensor (makes up 10-20% of model output quality) set at 16 bits.

Note that Q8_0 will only have MAX output tensor at 16 bits, as Imatrix has no effect on this quant.

IMPORTANT: - Looping?

This model tends to loop sometimes, if this happens set temp to "1" (or higher) and rep pen to "1.1" . The org model has the same issue.

If you have the option, set "presence penalty" to 2.0 [as per Qwen's own suggestions]

NOTE:

Only uploading TWO quants at this time, plus the mmproj at q8_0.

Q4_K_S, and a 2nd one "OT16" with output tensor at 16 bits.

Output Tensor ("OT") increases overall model quality.

Testing conducted at 4, 6 and 8 active experts.

---

Example Generation (Lmstudio):

Temp .7, rep pen 1.05, Quant Q4KS (NOT with "OT16"), Experts 8 using VISION mmproj Q8_0.

Q4KS is a low to mid quality quant, expect better with higher quants.

---

Tell me a horror story using this image as a base for the story.

[[[thinking start]]]

Got it, let's create a horror story based on this eerie gate image. First, analyze the elements: dark stone gates, flickering torches, misty tunnel, lone figure walking towards you. The mood is foreboding. Need a protagonist, backstory, suspense.

Start with setting: maybe an abandoned estate, the gates are part of a cursed place. Protagonist could be someone seeking revenge or answers, drawn in by curiosity. Build tension with sensory details—sounds of dripping water, cold air, shadows moving.

Let's outline:

- Introduce character: Eleanor, who inherited her great-grandmother's estate, heard legends about the "Whispering Gate."

- The gate is said to only open for those with blood ties, but she got a key somehow.

- Entering, mist swirls, torches flicker unnaturally. The figure in the tunnel—maybe a ghost or entity.

- Flashback: her grandmother warned her never to cross the gate, about the "Hollow Ones" that live beyond.

- Climax: the figure gets closer, voice echoes, reveals a terrible truth (family curse, ancestral sin).

- Ending: Eleanor is trapped, becomes part of the eternal procession.

Now craft prose with vivid descriptions, horror elements (psychological and supernatural).

Check flow: hook readers, build suspense, twist at the end.

Write in first person for immersion. Use sensory details—sound of metal gates creaking, smell of damp stone, the chill in the air.

[[[thinking end]]]

Title: The Whispering Gate

The iron gates groaned as I shoved them open, their rusted hinges screaming like a dying thing. Torchlight flickered weakly in the damp stone sconces flanking the entrance, casting jagged shadows that danced across the crumbling stone pillars. Mist curled through the archway ahead, thick and cold enough to choke on, swallowing the torches’ flames into ghostly blue embers.

I shouldn’t have come.

The letter from my grandmother had been a cryptic warning, ink smudged by tears: “The gate only opens for blood, Eleanor. And when it does… never look back.” But I was desperate. My mother’s illness—her body wasting away as if drained of life itself—had led me to this cursed ruin. The townsfolk whispered about the Hollow Ones, creatures said to lurk in the tunnel beyond the gates, feeding on those who dared cross the stone threshold.

Blood ties, I muttered, clutching the cold iron key she’d left behind. The brass felt slick with sweat as I slid it into the lock. It clicked—once, then again, like a heartbeat. The gates swung open with a sigh that echoed in my bones.

Then I saw him.

A lone figure stood at the far end of the tunnel, silhouetted by a ghostly light filtering from some unseen source above. Tall, gaunt, his posture rigid. He didn’t move. Didn’t breathe. Just… waited.

“Hello?” My voice cracked, swallowed by the mist.

No answer. Only the drip of water from the archway’s ceiling, each drop hitting stone like a countdown. I stepped forward, boots crunching over shattered bricks. The torches flickered—wrong, they shouldn’t flicker this fast, unless…

A hand touched my shoulder.

I spun. Nothing there. But the mist thickened, curling around me like fingers. The figure in the tunnel—was it always there?

Then came the whisper:

“You promised.”

The voice wasn’t human. It slithered through the stones, hollow and layered with echoes. I knew that voice. It was Mother’s.

The mist parted. In its place stood a skeletal thing, its skin stretched tight over bone, eyes like voids. It held a lantern—its flame flickering sickly orange—and behind it, a endless procession of figures, their faces blurred but their hands outstretched, grasping at the air.

My grandmother had warned me: the gate doesn’t let you leave. She’d meant it literally. The Hollow Ones weren’t in the tunnel—they were the tunnel. The stone, the mist, the torches—they were all part of them. And they’d waited for blood.

“You promised,” the thing repeated, its mouth stretching unnaturally wide. “You promised you’d bring her.”

My mother’s voice, now weak and broken: “It’s not the sickness, Eleanor. It’s the gate.”

I’d tried to leave, but the gates had slammed shut behind me. The stone pillars were now a cage, each breath of mist carrying whispers of forgotten names—my ancestors, all who’d crossed the gate before me, their souls trapped in the endless procession.

The skeletal figure stepped closer. Its lantern’s light revealed the truth: the tunnel wasn’t stone, but bones. The torches? They weren’t fire—they were souls. And the lone figure in the mist? It was waiting for me to join the procession.

I stumbled backward, but the gate refused to open. The ironwork seemed to writhe, transforming into teeth as I screamed. The mist swallowed me whole, and then—

Silence.

I am standing now where he once stood, the lantern’s flame flickering in my grasp. Somewhere beyond the mist, a new light glows. Another soul will hear the whisper: “You promised.”

The gate swings shut behind them.

And I reach out to welcome the next one home.

---

IMPORTANT => GGUF QUANTS:

You need both the GGUF quant(s) AND the special "mmproj" gguf quant (ONE: Q8,F16,BF16 or F32) to use all functions with this model.

Save these to the SAME folder.

In LMStudio, the app will auto-detect the "mmproj" file, and new options will be available.

For Koldboldcpp you have to load the GGUF and the "mmproj" file [this is in two separate sections in the loader].

---

Tell me a horror story using this image as a base for the story.

[[[thinking start]]]

Got it, let's create a horror story based on this eerie gate image. First, analyze the elements: dark stone gates, flickering torches, misty tunnel, lone figure walking towards you. The mood is foreboding. Need a protagonist, backstory, suspense.

Start with setting: maybe an abandoned estate, the gates are part of a cursed place. Protagonist could be someone seeking revenge or answers, drawn in by curiosity. Build tension with sensory details—sounds of dripping water, cold air, shadows moving.

Let's outline:

- Introduce character: Eleanor, who inherited her great-grandmother's estate, heard legends about the "Whispering Gate."

- The gate is said to only open for those with blood ties, but she got a key somehow.

- Entering, mist swirls, torches flicker unnaturally. The figure in the tunnel—maybe a ghost or entity.

- Flashback: her grandmother warned her never to cross the gate, about the "Hollow Ones" that live beyond.

- Climax: the figure gets closer, voice echoes, reveals a terrible truth (family curse, ancestral sin).

- Ending: Eleanor is trapped, becomes part of the eternal procession.

Now craft prose with vivid descriptions, horror elements (psychological and supernatural).

Check flow: hook readers, build suspense, twist at the end.

Write in first person for immersion. Use sensory details—sound of metal gates creaking, smell of damp stone, the chill in the air.

[[[thinking end]]]

Title: The Whispering Gate

The iron gates groaned as I shoved them open, their rusted hinges screaming like a dying thing. Torchlight flickered weakly in the damp stone sconces flanking the entrance, casting jagged shadows that danced across the crumbling stone pillars. Mist curled through the archway ahead, thick and cold enough to choke on, swallowing the torches’ flames into ghostly blue embers.

I shouldn’t have come.

The letter from my grandmother had been a cryptic warning, ink smudged by tears: “The gate only opens for blood, Eleanor. And when it does… never look back.” But I was desperate. My mother’s illness—her body wasting away as if drained of life itself—had led me to this cursed ruin. The townsfolk whispered about the Hollow Ones, creatures said to lurk in the tunnel beyond the gates, feeding on those who dared cross the stone threshold.

Blood ties, I muttered, clutching the cold iron key she’d left behind. The brass felt slick with sweat as I slid it into the lock. It clicked—once, then again, like a heartbeat. The gates swung open with a sigh that echoed in my bones.

Then I saw him.

A lone figure stood at the far end of the tunnel, silhouetted by a ghostly light filtering from some unseen source above. Tall, gaunt, his posture rigid. He didn’t move. Didn’t breathe. Just… waited.

“Hello?” My voice cracked, swallowed by the mist.

No answer. Only the drip of water from the archway’s ceiling, each drop hitting stone like a countdown. I stepped forward, boots crunching over shattered bricks. The torches flickered—wrong, they shouldn’t flicker this fast, unless…

A hand touched my shoulder.

I spun. Nothing there. But the mist thickened, curling around me like fingers. The figure in the tunnel—was it always there?

Then came the whisper:

“You promised.”

The voice wasn’t human. It slithered through the stones, hollow and layered with echoes. I knew that voice. It was Mother’s.

The mist parted. In its place stood a skeletal thing, its skin stretched tight over bone, eyes like voids. It held a lantern—its flame flickering sickly orange—and behind it, a endless procession of figures, their faces blurred but their hands outstretched, grasping at the air.

My grandmother had warned me: the gate doesn’t let you leave. She’d meant it literally. The Hollow Ones weren’t in the tunnel—they were the tunnel. The stone, the mist, the torches—they were all part of them. And they’d waited for blood.

“You promised,” the thing repeated, its mouth stretching unnaturally wide. “You promised you’d bring her.”

My mother’s voice, now weak and broken: “It’s not the sickness, Eleanor. It’s the gate.”

I’d tried to leave, but the gates had slammed shut behind me. The stone pillars were now a cage, each breath of mist carrying whispers of forgotten names—my ancestors, all who’d crossed the gate before me, their souls trapped in the endless procession.

The skeletal figure stepped closer. Its lantern’s light revealed the truth: the tunnel wasn’t stone, but bones. The torches? They weren’t fire—they were souls. And the lone figure in the mist? It was waiting for me to join the procession.

I stumbled backward, but the gate refused to open. The ironwork seemed to writhe, transforming into teeth as I screamed. The mist swallowed me whole, and then—

Silence.

I am standing now where he once stood, the lantern’s flame flickering in my grasp. Somewhere beyond the mist, a new light glows. Another soul will hear the whisper: “You promised.”

The gate swings shut behind them.

And I reach out to welcome the next one home.

---

IMPORTANT => GGUF QUANTS:

You need both the GGUF quant(s) AND the special "mmproj" gguf quant (ONE: Q8,F16,BF16 or F32) to use all functions with this model.

Save these to the SAME folder.

In LMStudio, the app will auto-detect the "mmproj" file, and new options will be available.

For Koldboldcpp you have to load the GGUF and the "mmproj" file [this is in two separate sections in the loader].

---

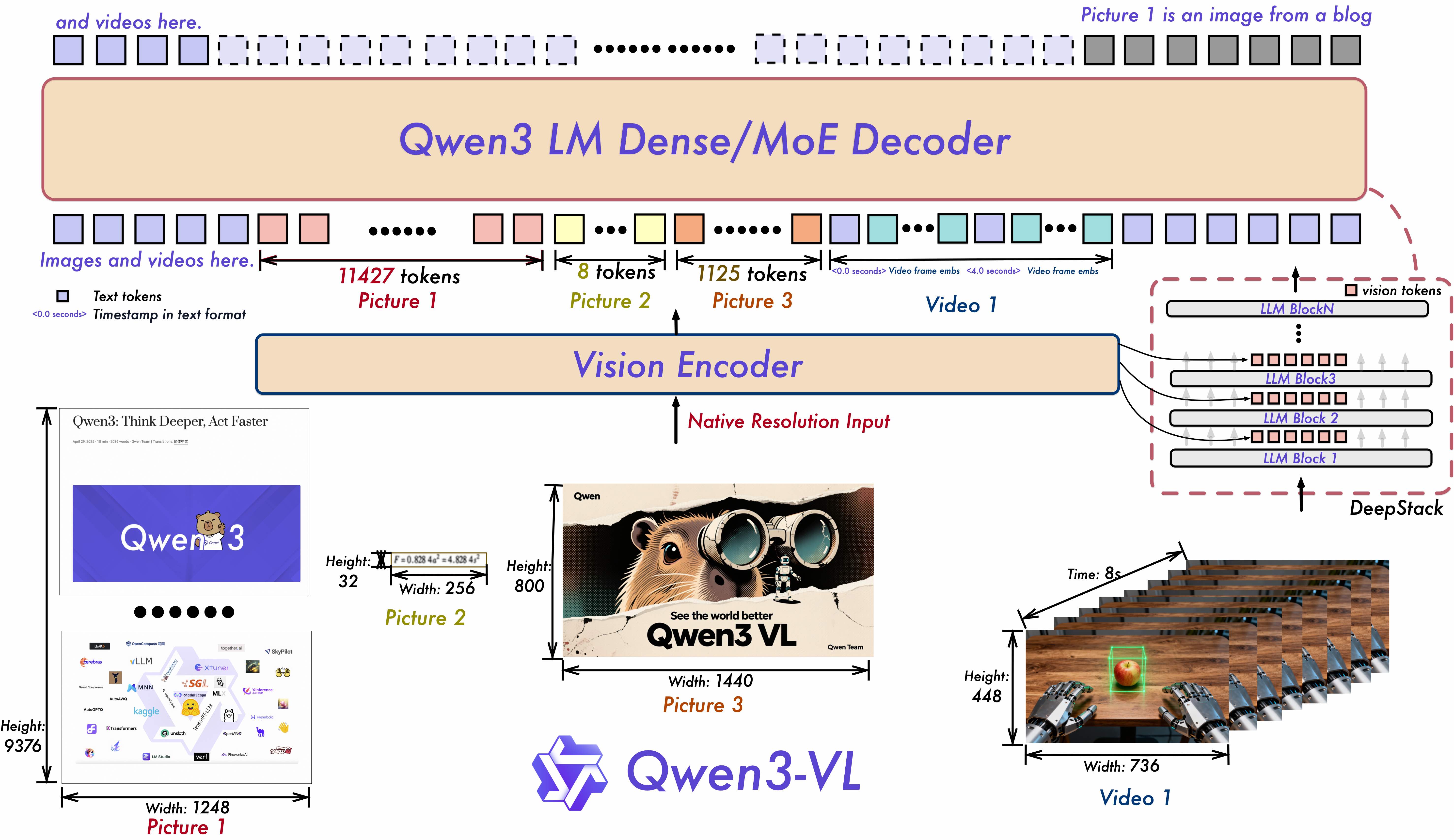

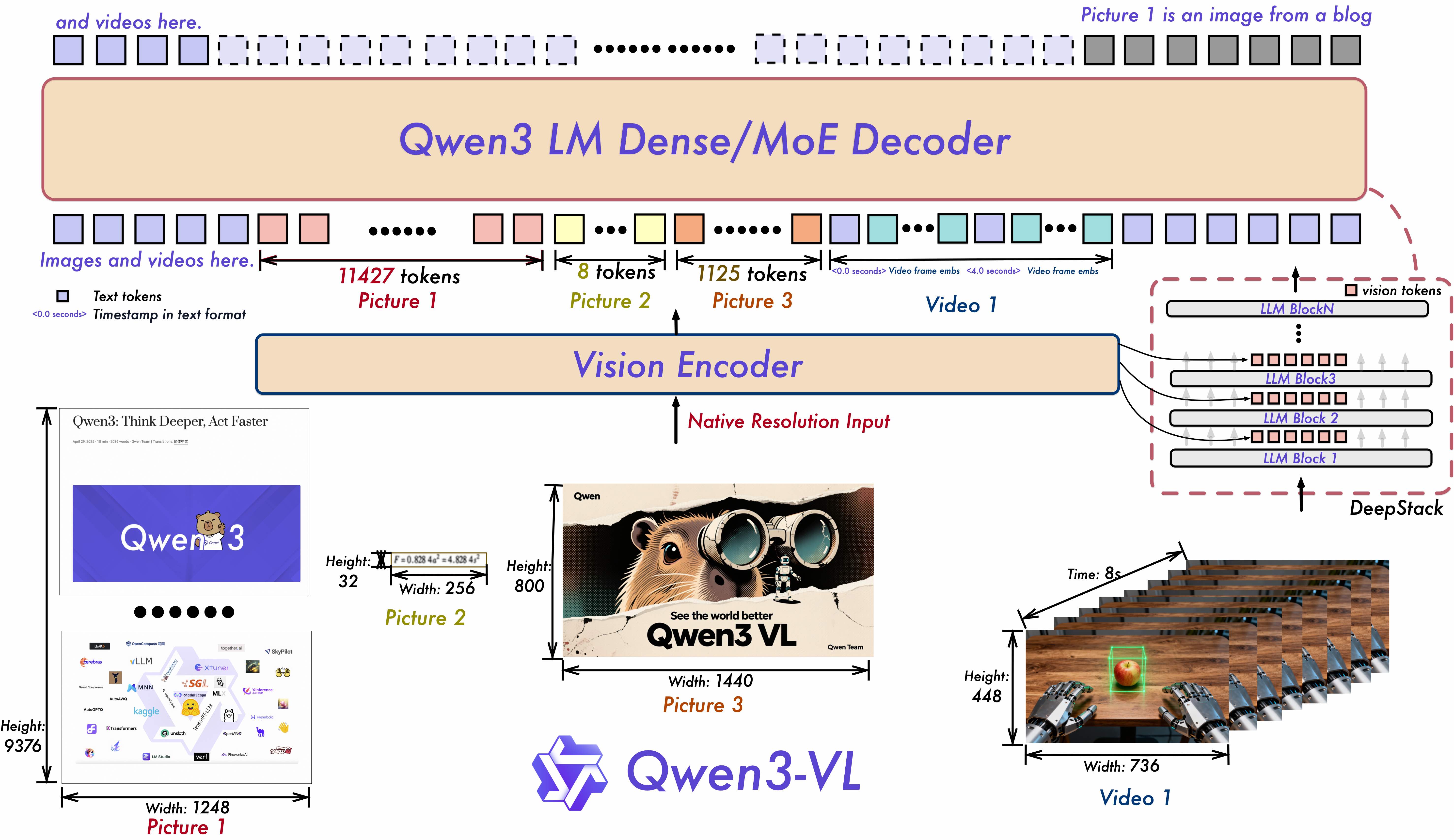

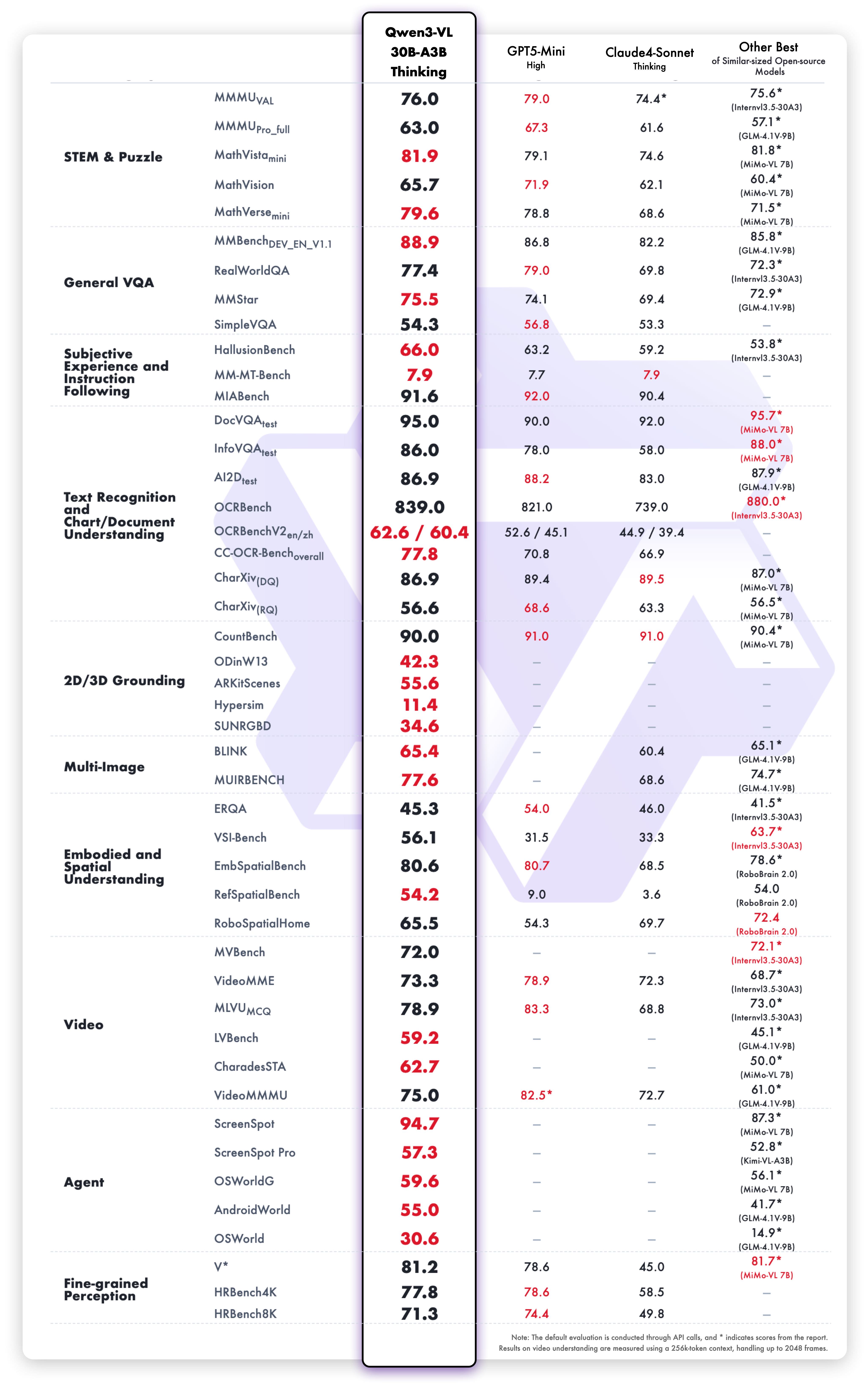

1. **Interleaved-MRoPE**: Full‑frequency allocation over time, width, and height via robust positional embeddings, enhancing long‑horizon video reasoning. 2. **DeepStack**: Fuses multi‑level ViT features to capture fine‑grained details and sharpen image–text alignment. 3. **Text–Timestamp Alignment:** Moves beyond T‑RoPE to precise, timestamp‑grounded event localization for stronger video temporal modeling. This is the weight repository for Qwen3-VL-30B-A3B-Thinking. --- ## Model Performance **Multimodal performance**  **Pure text performance**  ## Quickstart Below, we provide simple examples to show how to use Qwen3-VL with 🤖 ModelScope and 🤗 Transformers. The code of Qwen3-VL has been in the latest Hugging face transformers and we advise you to build from source with command: ``` pip install git+https://github.com/huggingface/transformers # pip install transformers==4.57.0 # currently, V4.57.0 is not released ``` ### Using 🤗 Transformers to Chat Here we show a code snippet to show you how to use the chat model with `transformers`: ```python from transformers import Qwen3VLMoeForConditionalGeneration, AutoProcessor # default: Load the model on the available device(s) model = Qwen3VLMoeForConditionalGeneration.from_pretrained( "Qwen/Qwen3-VL-30B-A3B-Thinking", dtype="auto", device_map="auto" ) # We recommend enabling flash_attention_2 for better acceleration and memory saving, especially in multi-image and video scenarios. # model = Qwen3VLMoeForConditionalGeneration.from_pretrained( # "Qwen/Qwen3-VL-30B-A3B-Thinking", # dtype=torch.bfloat16, # attn_implementation="flash_attention_2", # device_map="auto", # ) processor = AutoProcessor.from_pretrained("Qwen/Qwen3-VL-30B-A3B-Thinking") messages = [ { "role": "user", "content": [ { "type": "image", "image": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-VL/assets/demo.jpeg", }, {"type": "text", "text": "Describe this image."}, ], } ] # Preparation for inference inputs = processor.apply_chat_template( messages, tokenize=True, add_generation_prompt=True, return_dict=True, return_tensors="pt" ) # Inference: Generation of the output generated_ids = model.generate(**inputs, max_new_tokens=128) generated_ids_trimmed = [ out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids) ] output_text = processor.batch_decode( generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False ) print(output_text) ``` ---