NVIDIA Nemotron Nano 12B v2 - GGUF Q4_K_M (7GB)

This repository provides a 4-bit quantized GGUF build of NVIDIA Nemotron Nano 12B v2 using Q4_K_M, reducing the on-disk size to approximately 7GB from roughly 23GB for the original full precision weights, while preserving core capabilities.

Upstream base model: nvidia/NVIDIA-Nemotron-Nano-12B-v2

SHA256: 82ea4805d2f9f37e3c67b06768141ff58e43fb0dcd3983a82e9c2f481eb7fea8

What's included

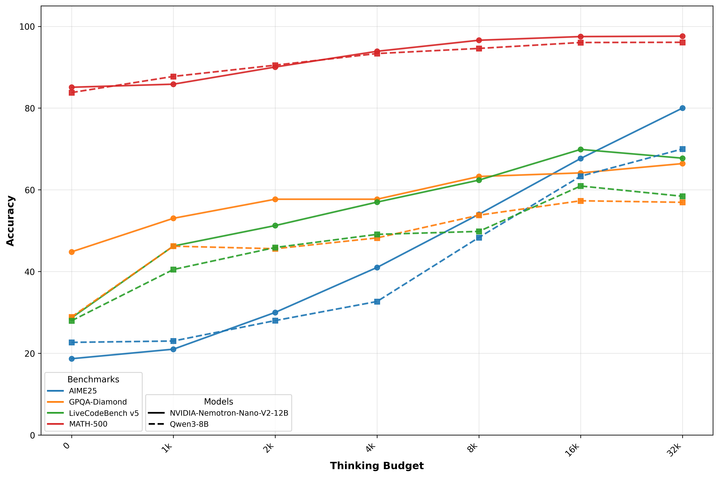

model-q4.gguf(7.0GB)tokenizer.jsontokenizer_config.jsonspecial_tokens_map.jsonconfig.jsongeneration_config.jsonconfiguration_nemotron_h.pymodeling_nemotron_h.pynemotron_toolcall_parser_no_streaming.pybias.md,explainability.md,privacy.md,safety.mdacc-vs-budget.pngREADME.md

Capabilities

- ✓ Tool calling support via preserved special tokens and helper parser script

- ✓ Thinking mode tokens for structured reasoning

- ✓ Long-context up to 128k window

- ✓ Multilingual general-purpose LLM behavior

Note: GGUF inference backends may vary in their native support for tool-calling integrations; use the included parser or your own orchestration as needed.

Hardware notes

- Disk space: 8GB free recommended for the quantized file and metadata

- CPU inference: 16GB RAM recommended for 4k contexts; 32GB suggested for comfortable operation. For 128k contexts, memory usage grows significantly and 64 to 128GB system RAM may be required

- GPU offload: 8 to 16GB VRAM can accelerate decoding with llama.cpp

-ngloffloading; very long contexts may require 24 to 48GB VRAM or hybrid CPU plus GPU offload - Throughput: Depends on backend, threads, and offload settings

Usage

llama.cpp

Build llama.cpp, then run:

Generate:

./llama-cli -m model-q4.gguf -p "Hello, Nemotron." -n 128 -t 8 -c 4096 -ngl 35

Server:

./llama-server -m model-q4.gguf -c 4096 -ngl 35

For very long contexts, increase -c accordingly and ensure sufficient RAM or VRAM for KV cache.

Python via llama-cpp-python

pip install llama-cpp-python

from llama_cpp import Llama

llm = Llama(model_path="model-q4.gguf", n_ctx=4096, n_threads=8)

out = llm("Write a short greeting.", max_tokens=128)

print(out)

Ollama

Create a Modelfile referencing this repo, then create and run:

Modelfile:

FROM hf.co/Avarok/nvidia-nemotron-nano-12b-v2-q4_k_m

PARAMETER num_ctx 4096

Commands:

ollama create nemotron-nano-12b-q4km -f Modelfile

ollama run nemotron-nano-12b-q4km

Note: Ollama versions and syntax may evolve; consult Ollama docs if the Modelfile interface changes.

License and attribution

- Base model: NVIDIA Nemotron Nano 12B v2

- License: This GGUF quantized derivative is subject to the original model's license and terms. See the upstream model card and license. By using this repository you agree to comply with NVIDIA's licensing for Nemotron models

- Attribution: If you use this model, please attribute both NVIDIA for the base model and this repository for the quantized packaging

Reproducibility

This artifact was produced by converting the upstream weights to GGUF and quantizing with Q4_K_M. An equivalent quantization command with llama.cpp tools is:

llama-quantize input.gguf model-q4.gguf Q4_K_M

Exact commands may differ based on the conversion workflow for the upstream model version.

Safety

Review the included bias, privacy, and safety documents. As with all LLMs, outputs may be inaccurate or unsafe without proper safeguards and human oversight.

- Downloads last month

- 382

We're not able to determine the quantization variants.

Model tree for Avarok/nvidia-nemotron-nano-12b-v2-q4_k_m

Base model

nvidia/NVIDIA-Nemotron-Nano-12B-v2-Base